Replacing Keen.io functionalities with Azure Functions and Cosmos DB

One of my customers consulted me about how to optimize the costs they were having by receiving SendGrid notifications with the state of the emails they sent. After thinking about a possible solution in Azure I came up with an idea that allowed my customer to reduce their costs from USD$500 to less than USD$50 each month. Keep reading and find out how.

SendGrid is a very good email sending service that allows you to send millions of emails through their platform. One of the mechanisms they have to inform you about what was the result of sending your massive emails (opened, reported as spam, deleted without being read, etc.) is by sending a HTTP POST to a URL you can provide. The HTTP POST will then contain a JSON body with details about the result of the emails sent.

You could be interested in store this information in a repository where you could be able to query these reports. For example, to know what messages were reported as spam during the last three days.

My customer was using a service called Keen.io that provided him with the webhook so SendGrid could send the information there, and with a repository and a platform to query the data gathered by the webhook.

But after a couple of months of being using this service, the costs started to rise notoriously. Hitting USD$500 just for some thousands of notifications received.

So, they consulted me, described to me the situation and I immediately imagined a solution based on Azure that will be obviously a way cheaper.

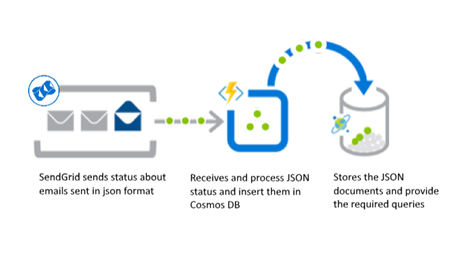

The main components would be the almighty Azure Functions on the receiving URL side, and Azure Cosmos DB as a repository.

Azure Functions can be deployed on the consumption plan model i.e. they will charge just for the seconds they are running. Not to mention that Azure gives you a million of Azure Functions calls per month for free!

On the other hand, Cosmos DB can store your JSON documents natively and make advanced and fast queries over them without too much effort. And since all the information could be stored in just one collection, you can keep the costs very low, as the price for just one collection of 400 RUs (you won't need anything else for a use case like these) is $21.92.

The other considerable cost associated with the Cosmos DB will be the storage per se. It is $0.25 GB/month. Let's exaggerate and say you have one GB of notifications per month. On the first month, your total costs will be near to $22+$0.25=$22.25. On the second, you'll pay $22 plus what you were already storing: $0.25 plus another GB: $0.25. A total of $22.5. And so on.

All in all, Keep.io is a very good service that keeps you away from the implementation details; but you could do it by yourself on your own Azure subscription while keeping the costs far down from one-tenth of the original cost of an already available service.

Let's get down to work

The following diagram depicts the solution for this case:

First, you would like to create a Cosmos DB on your subscription or maybe getting a free testing Cosmos DB. We are so flexible, that you could use even a Cosmos DB emulator.

Once you have created your Cosmos DB, you will have its endpoint and connection key (required to make the connection - like the connection string for a SQL Database). Keep them at hand as we are going to configure our function with them.

Now, using your Visual Studio, create an HTTP Triggered Azure Function. A new function with a predefined template will be created. Remove the get method from the function header as we are going to need just the post method. It is a good practice to reduce the attack surface a possible intruder could have.

By now, November 2018, the template will come a little outdated (the tracer for logging is now being deprecated and replaced by the ILogger). But not a problem. The code I'm showing you is already adjusted to being on the cutting edge (for now). Another thing to update is how we access the configuration settings. Observe that I have added the ExecutionContext parameter as input for the template. It will help us to set up the config object to get those variables in the way that is used in the .NET Core world.

So, if we are in the development environment, app settings will be loaded from local.settings.json; but in production, these variables will be loaded from the appsettings tab of the app service.

The data sent from the client (SendGrid or another source) to Azure Functions is supposed to come in raw JSON format inside the body of the post message as an array of JSON documents. That's why I am using the JArray object to store these documents.

Once you get the documents in memory it is time for us to communicate to the Cosmos DB and insert that info in the required collection. Apart from the Cosmos DB URI and Key, we are going to need the name of the DB and collection in which we need to store the data. All these values should be provided as configuration variables, so you can change the repository whenever you want, without changing your code.

The DocumentClient object will do the magic for us and when it is configured as we need a simple foreach will be required to traverse the JArray object and for each entry (a JObject) it will be inserted in the Cosmos DB using the operation CreateDocumentAsync.

If there are errors when inserting a document, in this case, the execution continues with the other documents. So, this insertion is not transactional. To make this execution transactional you should create a Cosmos DB stored procedure containing the transaction logic as right now there are no means to control transactions from Cosmos DB clients. Just inside the engine. The failed insertions will be reported in the log, and at the end of the execution, in the response, it will be noted that not all the documents were inserted. This could help administrators to check what went wrong.

This is the code that summarizes my previous instructions:

[FunctionName("JsonInserter")]

public static async Task<IActionResult> Run

([HttpTrigger(AuthorizationLevel.Function, "post", Route = null)]HttpRequest req,

ILogger log,

ExecutionContext context)

{

//This is the proper .net core way to get the config object to access configuration variables

//If we are in the development environment, app settings will be loaded from local.settings.json

//In production, these variables will be loaded from the appsettings tab of the app service

var config = new ConfigurationBuilder()

.SetBasePath(context.FunctionAppDirectory)

.AddJsonFile("local.settings.json", optional: true, reloadOnChange: true)

.AddEnvironmentVariables()

.Build();

//Getting the configuration variables (names are self explanatory)

var cosmosDBEndpointUri = config["cosmosDBEndpointUri"];

var cosmosDBPrimaryKey = config["cosmosDBPrimaryKey"];

var cosmosDBName = config["cosmosDBName"];

var cosmosDBCollectionName = config["cosmosDBCollectionName"];

//The data sent from the client to Azure Functions is supossed to come

//in raw json format inside the body of the post message;

//specifically, as an array of json documents.

//That's why we are using the JArray object. To store these documents

JArray data;

string requestBody = new StreamReader(req.Body).ReadToEnd();

try

{

data = (JArray)JsonConvert.DeserializeObject(requestBody);

}

catch(Exception exc)

{

//As we relay on the json info correctly beign parsed,

//if we have an exception trying to parse it, then we must finish the execution

//Exceptions in this functions are handled writing a message in the log

//so we could trace in which part of the fucntion it was originated

//And returning the most suitable error message with the exception text

//as the function response

log.LogError($"Problem with JSON input: {exc.Message}");

//Bad request since the format of the message is not as expected

return new BadRequestObjectResult(exc);

}

//This is going to be the object that allows us to communicate with cosmosDB

//It comes in the Microsoft.Azure.DocumentDB.Core package, so be sure to include it

DocumentClient client;

try

{

//Client must be initialized with the cosmosdb uri and key

client = new DocumentClient(new Uri(cosmosDBEndpointUri), cosmosDBPrimaryKey);

//If the desired database doesn't exist it is created

await client.CreateDatabaseIfNotExistsAsync(new Database { Id = cosmosDBName });

//If the desired collection doesn't exist it is created

await client.CreateDocumentCollectionIfNotExistsAsync(

UriFactory.CreateDatabaseUri(cosmosDBName),

new DocumentCollection { Id = cosmosDBCollectionName });

}

catch (Exception exc)

{

log.LogError($"Problem communicating with CosmosDB: {exc.Message}");

return new ExceptionResult(exc, true);

}

//Now that we have the db context we can proceed to insert the json documents in data

uint successfullInsertions = 0;

foreach (var jobj in data)

{

try

{

await client.CreateDocumentAsync(

UriFactory.CreateDocumentCollectionUri(cosmosDBName, cosmosDBCollectionName),

jobj);

successfullInsertions++;

}

catch(Exception exc)

{

//We don't finish the execution here. If there are errors, the execution continues

//with the other documents. So this insertion is not transactional.

//To make this execution transactional you must create a cosmosdb stored procedure

//with the transaction logic as there are no means right now to control transactions

//from cosmosdb clients. Just inside the engine.

//The failed insertions will be reported in the log, and at the end of the execution,

//in the response, it will be noted that not all the documents were inserted.

//This could help administrators to check what went wrong.

log.LogError($"Problem inserting document: {exc.Message}\n" +

$"Content: {jobj.ToString()}");

}

}

//A little report is generated as response if no exceptions were found

//This report indicates how many documents were inserted and what was the total

//of documents passed to the function to be processed

var endingMessage = $"{successfullInsertions} / {data.Count} " +

$"documents inserted in CosmosDB";

log.LogInformation(endingMessage);

return new OkObjectResult(endingMessage);

}

You can also find this project on GitHub and collaborate if you want to add more features or improvements to it:

UPDATE

After the first deployment, my customer told me that sometimes they required the information from SendGrid to be stored on a MongoDB database they had outside Azure.

The code base basically will be the same as for receiving the data in the Azure Function. Thus, I decided to make the function configurable so you can choose when you want to store the received data in CosmosDB either using the SQL Native model or the MongoDB model. Using the MongoDB model the data could be stored on any MongoDB i.e. Not just CosmosDB. Then, the configuration file for the Azure Function will look like:

{

"IsEncrypted": false,

"Values": {

"FUNCTIONS_WORKER_RUNTIME": "dotnet",

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"AzureWebJobsDashboard": "UseDevelopmentStorage=true",

"cosmosDbEndpointUri": "https://…",

"cosmosDbPrimaryKey": "…==",

"mongoDbConnectionString": "mongodb://….documents.azure.com:10255/?ssl=true&replicaSet=globaldb",

"dbName": "…",

"dbCollectionName": "sendgrid",

"useMongoDb": "true"

}

}

The key variable here is useMongoDb if it is set to true, it means that the function will be storing the data in MongoDB (or CosmosDB for MongoDB) so the values for the variables related to CosmosDB will be omitted.

The C# code also changed, specially to include the instructions to store the data in MongoDB. For this, you have to include the MongoDB.Driver nuget package. That code updated is published in the GitHub repository and you could use it to compare how is the experience of working with the Native SQL mode and the MongoDB mode in CosmosDB.

Comments

- Anonymous

November 29, 2018

I would say going with Table Storage can slash the cost even further- Anonymous

November 29, 2018

Absolutely right kamlan. It will be just required writing some code inside the function that parse the jdon and convert it to columns. And it will be absolutely cheap. In this case the customer sticked to mongo because they were already using a reporting tool based on that repository.

- Anonymous