How to: Mine the ULS logs for query latency

Tracking query latencies can be made easier through the use of the products ULS logs. Below you will find information on how to enable the specific ULS traces as well as information for how to parse the logs. The primary usage of this information is to monitor the ongoing health of your system. It is one tool in the toolbox to make sure that the system is running in a viable state. It is also necessary when you are making small changes to your environment so you can measure the benefits or detriments of the changes made. Another key usage of the query latency ULS logs is the ability to where the larger portions of time is being spent in the query. For example you can see the time spent in SQL improve after doing index defrags.

ULS logging

Making changes to ULS log settings can impact performance and cause more disk space to be consumed when. However, the category and level changes mentioned below are what SearchBeta is running with and the cost of this is negligible given the benefit it provides. Just make sure your logs files are not on a drive that is tight on disk space.

You will need to change the following ULS settings to get the events that we need traced.

From "Central Admin.Operations.Diagnostic Logging" set the following;

Category: "MS Search Query Processor"

Least critical event to report to the trace log: "High"

LogParser

There are a number of interesting traces that you get with the above setting. To really look at this data you will need to use some kind of log parsing utility to strip out the interesting traces and perform some additional post processing. I recommend that you use logparser.exe to do this parsing. Below I give examples of Log Parser queries to get at the data. Additionally you should provide the following input parameters to logparser.exe since the ULS log files are Unicode, tab separated text files.

- -i:TSV -iCodepage:-1 -fixedSep:ON

Traces

With the above ULS trace settings you will get the following messages in the log (location of these log files can be found in the above UI for changing the logging level):

- Completed query execution with timings: v1 v2 v3 v4 v5 v6

- The 5 numbers v1,v2,v3,v4,v5, and v6 are timemeasurements in milliseconds

- v6 = Cumulated time spent in various calls to SQL except the property fetching

- v5 = Time spent waiting for the full-text query results from the query server (TimeSpentInIndex)

- v4 = Latency of the query measured after the joining of index results with the SQL part of the query. This includes v5 and the time spent in SQL for resolving the SQL part of advanced queries (e.g. queries sorted by date or queries including property based restrictions like AND size > 1000).

- v4-v5 = Join tim

- v3 = Latency of the query measured after security trimming. It includes V4 plus retrieval of descriptors form SQL and access check.

- v3-v4 = Security Trimming tim

- v2 = Latency of the query measured after the duplicate detection.

- v3-v2 = Duplicate detection tim

- v1 = Total time spent in QP. (TotalQPTime)

- v1 -v2= Time spent retrieving properties and hit highlighting . (FetchTime)

- The 5 numbers v1,v2,v3,v4,v5, and v6 are timemeasurements in milliseconds

- Join retryv1 v2 v3

- Retry caused because there were not enough results from SQL that matched the results returned from the full-text index.

- Security trimming retry v1 v2 v3

- Caused by the user executing a query the returns a number of results that they do not have permission to read. The query is retried until the enough results are available to display the first page of results.

- Near duplicate removal retry v1 v2 v3

- There were so many virtually identical documents that were trimmed out that the query processor did not have an adequate number of documents to display

- The 3 numbers v1,v2 and v3 are counts of documents. If you see one of these messages in the log it means that the query processor was unable to satisfy the requested number of results on the first attempt and had to execute the SQL portion of the query a second++ time with a larger number of requested results. The numbers here are not excessively useful and most of the analysis you will do is around the existence of this trace. This and the relative frequency of each of the retries allows you to determine why so much time is being spent in a given phase of the query.

- v1 is the current upper bound on the number of documents to work with (this will go up on subsequent retries)

- v2 is the number of documents before the operation that caused the retry

- v3 is the number of documents after the operation that caused the retry.

Where is all of the time being spent for the queries executed in the system?

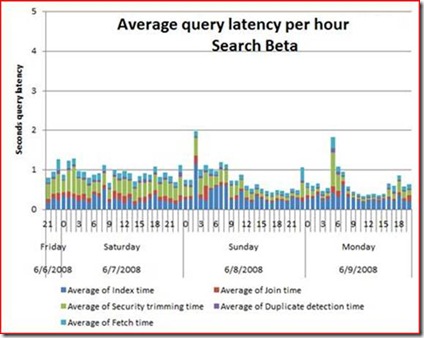

The answer to this question is primarily within the "Completed query execution…" trace. The number of retries help explain why the time spent in any one location is so high. Given all of the timing information thatyou can get from a single query and the fact that this data is available for each and every query executed, the problem becomes more of an exercise in figuring out how to store the data and provide a mechanism to summarize or chart it. Without doing this there is just too much data to try and interpret. The solution we have on SearchBeta is to collect the data on a regular basis (hourly) and import it into a SQL reporting server that is segregated from the SQL machine hosting the Search farm.

Once the data is in SQL we have created a number of Excel spreadsheets that query the data directly from SQL and chart it using Excel Pivot Tables/Charts. We have also gone further to provide a set of dashboards within a MOSS system that use Excel Server to provide up to date reports on the health of the system that are available for anyone to look at.

Once you have the basics of this system set-up there are a multitude of other reports and health monitoring that are possible; from collecting performance counters to mining IIS logs. The IIS logs provide a key piece of information about query latencies that is missing from the ULS trace. Primarily answering the question of how much additional time is spent rendering the UI.

A sample of one of the charts that we are able to produce with the ULS log data is below:

The log parser query that we use to mine the ULS logs is below. Note there are number of output options for LogParser, I am using a simple CSV file below. But you can also import the data directly into SQL.

*remember the numbers in the log are in milliseconds, the query below translates the time into seconds.

Select Timestamp

, TO_INT(Extract_token(Message,7, ' ')) as TotalQPTime

, TO_INT(Extract_token(Message,8, ' ')) as v2

, TO_INT(Extract_token(Message,9, ' ')) as v3

, TO_INT(Extract_token(Message,10, ' ')) as v4

, TO_INT(Extract_token(Message,11, ' ')) as TimeSpentInIndex

, TO_INT(Extract_token(Message,12, ' ')) as v6

, SUB(v4, TimeSpentInIndex) as JoinTime

, SUB(v3, v4) as SecurityTrimmingTime

, CASE v2

WHEN 0 THEN 0

ELSE SUB(v2, v3)

End as DuplicateDetectionTime

, SUB(TotalQPTime, v2) as FetchTime

INTO QTiming

FROM \\%wfeHost%\ULSlogs\%wfeHost%*.log<br>WHERE Category = 'MS Search Query Processor'

AND Message LIKE '%Completed query execution with timings:%'

*FYI -- Prior to the MSS release and Infrastructure Update updating MOSS with the MSS changes, the first two "tokens" (QueryID: XXX.) at the beginning of the trace did not exist. So you will need to subtract 2 from the second parameter of each "Extract_token" predicate in the above SQL command.

What is the percentage of retries that the system has?

To get an idea for how many "retries" are occurring you need to correlate the number of retries with the number of queries executed and calculate a % of total retry values for each type of retry. The timing data above does include time spent in a retry.

Log Parser queries:

- Total number of queries executed

SELECT count (Message) FROM *.log WHERE Category = 'MS Search Query Processor' and Message like '%Completed query execution%'

- Total number of retries due to Security trimming

SELECT count (Message) FROM *.log WHERE Category = 'MS Search Query Processor' and Message like '%Security trimming retry%'

- Total number of retries due to Join retries

SELECT count (Message) FROM *.log WHERE Category = 'MS Search Query Processor' and Message like '%Join retry%'

- Total number of retries due to Duplicate Removal

SELECT count (Message) FROM *.log WHERE Category = 'MS Search Query Processor' and Message like '%Near duplicate removal retry%'

Thank you for your time and as always I welcome any feedback or questions

Dan Blood

Senior Test Engineer

Microsoft Corp

Comments

Anonymous

March 10, 2009

The comment has been removedAnonymous

June 09, 2009

I was reading a blog posting from Dan Blood, Sr. Test Engineer at Microsoft, on how to mine the ULS logsAnonymous

November 29, 2010

Excellent Article, thanks for posting this Dan!