Load Testing with Agents running on Windows Azure – part 1

** Important note – Please read before reading further **

The content in this post was first written in 2011, exploring the earlier versions of Windows Azure. Since then, Windows Azure has evolved quite a lot, as well as other services in the Microsoft portfolio.

We now have an official solution to run load tests on Azure - load testing is part of Team Foundation Service and a preview is available. If you haven't tried it already, I'd encourage you to try it.

Find out more at https://aka.ms/loadtfs.

I’ve been following the cloud computing scene with some interest as I find cloud computing to be quite an interesting approach to IT for some/many companies. There are a few characteristics of cloud computing that I find very useful (and why not, exciting!), in particular the concepts around elasticity, when you scale to meet demand without worrying about hardware constraints, and the pay-as-you-go model, so that you are only charged when you actually deploy and use the system.

Of course a big part of getting value from cloud computing is identifying the most adequate workloads to take to the cloud, so after some thinking (and based on some needs from a customer) I’ve decided to try to make a Load Testing rig where the Agents are running on Windows Azure. This scenario has some aspects that make it “cloudable”:

- Running the Agents as Windows Azure instances allows me to quickly create more of them and, thus, generate as much load as I can;

- A load test rig is not something which is used 24x7, so it’d be nice to just destroy the rig and not having it around if not needed.

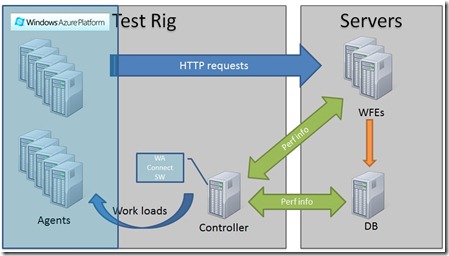

So, my first step was to decide how to design this and where to place each component (cloud or on-premises) to test a more or less typical application. Based on some decisions I’ll explain later in the post, I came up with this design:

The major components are:

- Agents – As intended, they should be running on Windows Azure so I could make the most of the cloud’s elasticity;

- Controller – I took some time deciding on where the controller should be located: cloud or on-premises. The Controller plays an important part in the testing process as it must communicate with both the agents, to send them work to do and collect their performance data, and the system being tested, to collect performance data. Because of this, some sort of cloud/on-premises communication would be needed. I decided to keep the controller on-premises mostly because the other option would imply having to either open the firewalls to allow connection between a Controller on the Cloud and, for example, a Database server or to deploy the Windows Azure Connect plugin to the database server itself. For the customer, I was thinking of this was not feasible.

- Windows Azure Connect – The Windows Azure Connect endpoint software must be active on all Azure instances and on the Controller machine as well. This allows IP connectivity between them and, given that the firewall is properly configured, allows the Controller to send work loads to the agents. In parallel, and using the LAN, the Controller will collect the performance data on the stressed systems, using the traditional WMI mechanisms.

Once I knew what I wanted to design, my next step was to start working hands-on on the scripts that would enable this to be built. My ultimate goal was to have something fully automated, I wanted as less manual work as possible. I also knew I had to make a decision about the type of role to be used for the Agent instances: web, worker or VM.

I’ll explore these topics in the next parts of this post.

Comments

- Anonymous

August 02, 2012

I'd love to see this revisited to include using the Windows Azure VPN preview feature and the potential for Windows Azure Virtual Machines to host the controller without risking loss of data that could happen with a Cloud Services/Worker Role hosted controller. All in all, love the approach and great work on code, MSDN articls, an these blog posts.