Exchange 2013 – Performance counters and their thresholds

the official TechNet most important performance counters list to monitor for Exchange 2013 is now available !

The good news is that since Exchange 2013 is now a single box (-ish as separable CAS role is merely just a protocol forwarder), the counters to monitor are now simplified down to 71 counters:

| Counter category | With Threshold | Without Threshold (Load Indicator) |

| Client Access Server Counters | 12 | |

| Database Counters | 5 | 4 |

| HTTP Proxy Counters | 7 | |

| RPC Client Access Counters | 2 | 4 |

| ASP.NET | 4 | 2 |

| Processor and Process Counters | 4 | 1 |

| Netlogon Counters | 5 | |

| Information Store Counters | 3 | 2 |

| Exchange Domain Controller Connectivity Counters | 4 | |

| Network counters | 3 | 1 |

| Workload Management Counters | 3 | |

| .NET Framework Counters | 2 | 1 |

| Memory counters | 2 | |

| Grand Total | 29 | 42 |

Remember the process to analyze an Exchange performance concern (once it’s determined that the latency is server-side and not client side or network related):

1. Check the RPC requests as well as the RPC latency counters

and then:

2. Determine which is likely the cause by checking

2.1- the Database counters to see if some databases have read or write latency => then check the underlying disk latency counters to see if something with the disk subsystem is wrong (can be the queue depth, or simply not enough I/Os that a LUN can provide)

2.1.1 - Disk counters to monitor to rule out performance issues caused by the disk subsystem are:

- Logical Disk \ Avg Disk Sec/Read (this one is important for end-user performance consequences –> usually bad performance on this counter is spikes over 50ms and are reflected on the RPC Average Latency from MSExchangeIS, and then on RPC Average Latency from MSExchange RPCClientAccess counters, and eventually on the Outlook end-user performance)

- Logical Disk \ Avg Disk Sec /Write (this one is important as well as it will slow down the data flush from memory to the EDB database on disk, having a consequence of filling the database cache in memory, then you’ll get database transaction stalls, then transaction will have to wait until they can be put in the memory aka database cache, resulting in the very end in RPC Average Latency as well – but you’ll see some issues on Database transaction stalls first, or Log Records stalls if the Write Disk performance is bad on Log drives)

- Logical Disk \ Transactions per second– this one reflects the IOPS that the Exchange server is demanding to the disk subsystem – sizing tells you how much IOPS Exchange may ask at peak load.

- NOTE: disk related performance issues can be related not only to a lack of disks in a LUN or by a hardware issue, but also by a configuration issue (like the queue depth on the server's HBA card, or the queue depth configuration on the SAN switch, or by outdated HBA drivers on the server, etc...

2.1.2 - Check my older Blog Post on the MSPFE TechNet Blog for complete performance assessment methodology, it remains the same general method for all Exchange versions:

2.2. the CPU and Memorycounters to see if there are too much pressure on these two basic counters

2.3. The RPC Operations/sec, active user count and active connection count counters to see if there is an unusual load that’s putting the server’s resources down (usually CPU and Memory suffer first if it’s the case – Database latency and disk also can be the cause if the sizing hasn’t been done properly)

2.4. if none of the above 2.x counters shows unusual values, also check your Antivirus counters, which can also block the RPC processing sometimes (seen on a customer’s site: RPC requests growing and hardly decreasing, RPC latency quite high, but all other counters like CPU, Database latency, etc… were below the error thresholds…)

Check out all the counters and their description below:

Exchange 2013 Performance Counters

https://technet.microsoft.com/en-us/library/dn904093(v=exchg.150).aspx

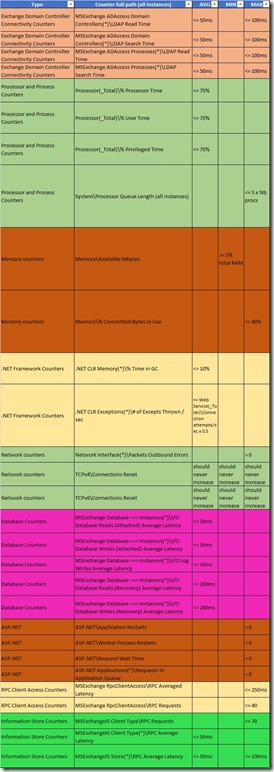

For a summarized view, below is a sub-list of the above Technet Exchange counters to show only those which have thresholds (29 counters have thresholds out of the 71 TechNet counters) – the TechNet article above has the entire list and descriptions. You’ll see below a picture with colors for readability between categories (click on the small image to open the original one in a new window), the other table is a simple table to enable you to copy paste these to make it a bit easier to integrate the counters in SCOM or Perfmon custom alerts for example…

| Type | Counter full path (all instances) | AVG | MIN | MAX |

| Exchange Domain Controller Connectivity Counters | MSExchange ADAccess Domain Controllers(*)\LDAP Read Time | <= 50ms | <= 100ms | |

| Exchange Domain Controller Connectivity Counters | MSExchange ADAccess Domain Controllers(*)\LDAP Search Time | <= 50ms | <= 100ms | |

| Exchange Domain Controller Connectivity Counters | MSExchange ADAccess Processes(*)\LDAP Read Time | <= 50ms | <= 100ms | |

| Exchange Domain Controller Connectivity Counters | MSExchange ADAccess Processes(*)\LDAP Search Time | <= 50ms | <= 100ms | |

| Processor and Process Counters | Processor(_Total)\% Processor Time | <= 75% | ||

| Processor and Process Counters | Processor(_Total)\% User Time | <= 75% | ||

| Processor and Process Counters | Processor(_Total)\% Privileged Time | <= 75% | ||

| Processor and Process Counters | System\Processor Queue Length (all instances) | <= 5 x Nb procs | ||

| Memory counters | Memory\Available Mbytes | >= 5% total RAM | ||

| Memory counters | Memory\% Committed Bytes In Use | <= 80% | ||

| .NET Framework Counters | .NET CLR Memory(*)\% Time in GC | <= 10% | ||

| .NET Framework Counters | .NET CLR Exceptions(*)\# of Excepts Thrown / sec | <= Web Service(_Total)\Connection attempts/sec x 0.5 | ||

| Network counters | Network Interface(*)\Packets Outbound Errors | = 0 | ||

| Network counters | TCPv4\Connections Reset | should never increase | should never increase | should never increase |

| Network counters | TCPv6\Connections Reset | should never increase | should never increase | should never increase |

| Database Counters | MSExchange Database ==> Instances(*)\I/O Database Reads (Attached) Average Latency | <= 20ms | ||

| Database Counters | MSExchange Database ==> Instances(*)\I/O Database Writes (Attached) Average Latency | <= 50ms | ||

| Database Counters | MSExchange Database ==> Instances(*)\I/O Log Writes Average Latency | <= 10ms | ||

| Database Counters | MSExchange Database ==> Instances(*)\I/O Database Reads (Recovery) Average Latency | <= 200ms | ||

| Database Counters | MSExchange Database ==> Instances(*)\I/O Database Writes (Recovery) Average Latency | <= 200ms | ||

| ASP.NET | ASP.NET\Application Restarts | = 0 | ||

| ASP.NET | ASP.NET\Worker Process Restarts | = 0 | ||

| ASP.NET | ASP.NET\Request Wait Time | = 0 | ||

| ASP.NET | ASP.NET Applications(*)\Requests In Application Queue | = 0 | ||

| RPC Client Access Counters | MSExchange RpcClientAccess\RPC Averaged Latency | <= 250ms | ||

| RPC Client Access Counters | MSExchange RpcClientAccess\RPC Requests | <= 40 | ||

| Information Store Counters | MSExchangeIS Client Type\RPC Requests | <= 70 | ||

| Information Store Counters | MSExchangeIS Client Type(*)\RPC Average Latency | <= 50ms | ||

| Information Store Counters | MSExchangeIS Store(*)\RPC Average Latency | <= 50ms | <= 100ms |

Comments

- Anonymous

January 27, 2015

Thanks - Anonymous

January 27, 2015

Thanks ! - Anonymous

June 16, 2015

Sammy,

i am pretty sure that the value for "MSExchange RpcClientAccessRPC Averaged Latency" should be below 50 or 30 ms. Otherwise things will get slow for the client. Could you double check the 250 ms specified?

The counter does not show the cause of the problem but it seems to be perfect to indicate if end users will call you soon. So it is important. - Anonymous

June 17, 2015

The comment has been removed - Anonymous

June 06, 2016

Hey - I'm pretty sure that >> Information Store Counters MSExchangeIS Client Type\RPC Requests >> Is not a threshold type counter so value <= 70 cannot by used. This is a cumulative type counter.- Anonymous

August 10, 2016

humm I have to triple check this ... if it's so, we'll have to correct our original TechNet article then ... thanks for the heads-up !

- Anonymous