SQL Server 2012 AlwaysOn – Part 3 – SAP configuration with two Secondary Replicas

[Edit] You can find the summary of the complete series here.

After having learned how AlwaysOn handles quorum detection and how a Standard SAP/SQL Server WSFC configuration can be used w/o large changes to the WSFC configuration increase availability of SQL the SAP database(s) by using non-shared disks, we want to look at another typical configuration. A configuration where we include three server nodes of which one is placed in a DR site. Again as in the former part, we will only take a look at the underlying WSFC configuration. The actual detailed steps creating an Availability Group will be described as soon as we are through with our reference architectures. Let’s just work on this larger theme in the order of a deployment. Let’s get all the infrastructure right with our datacenter folks and then as SAP Basis team or DBA turn our head to the SQL Server and SAP part.

Move from Database Mirroring and Log-Shipping to AlwaysOn

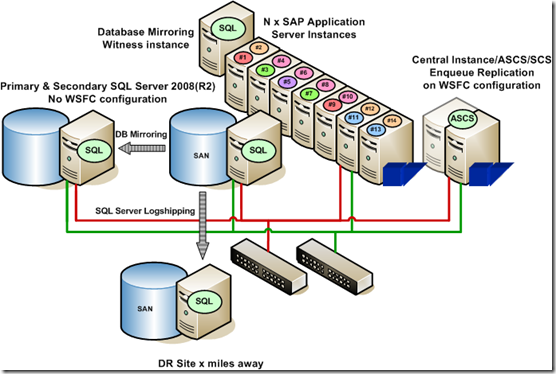

This is a configuration which is used by several SAP customers including Microsoft for their SAP ERP system. Local HA for the SAP databases is done by synchronous Database Mirroring with automatic failover. An additional SQL Server instance is providing the functionality of the witness or quorum to enable automatic failover capabilities. With SAP customers, this configuration proved to allow platform uptimes (measured for unplanned failures) of 99.999% and usually is used for the most critical SAP systems. The SAP single point of failure components are installed on a separate WSFC configuration which eventually might be shared with ASCS/SCS/CI of other SAP systems (multi-SID clustering - https://blogs.msdn.com/b/saponsqlserver/archive/2007/05/30/sap-multi-instance-clustering.aspx ). In order to supply the DR site which is many miles away, customers used SQL Server Log-Shipping. Dependent on the period of Transaction log backups, RPO (Recovery point Objective) could be kept to around 60-120sec for the DR site. The local synchronous database mirroring did provide a RPO of 0sec for committed transactions. Usually the DR site is in an own subnet. You might or might not have a dedicated network bandwidth for Log-shipping, dependent on how the datacenter in the DR site is being used by the rest of the company.

The goal to achieve is to replace Database Mirroring and Log-Shipping with new AlwaysOn technology. Advantages would be that one could lower the RPO for the DR site and the usage of one single functionality instead of 2 functionalities to cover local HA requirement and remote DR requirements. One would have one framework to setup, administrate and monitor the complete HA/DR functionality whereas in the configuration shown above, two frameworks needed to be used. To get to the new configuration one needs to:

- Move the 3 server nodes which are participating right now into one WSFC configuration

- Deciding on the quorum model/configuration based on different circumstances and preferences

Let’s discuss some of the options on what to do here:

- Our assumption is that we desire local failover and manual failover to the DR site. Usually in such cases, the SAP landscape would need to be failed over manually as well since we need to assume the main datacenter being off duty. So assumption is that we got a setup of SAP in the DR site. Usually this would be the test system of the related SAP production system. That test system would have production instances pre-installed which are shut down.

- Absolute priority is to keep the system running in the main datacenter. Clear goal is to get to 5 nines (99.999) in regards to unplanned downtimes in the main site.

- Therefore no incidents happening in the DR site or in network traffic between different datacenters should be able to jeopardize availability of the main datacenter site.

- This directs to keep all the quorum votes within the main datacenter site.

- The node in the DR site should therefore not have a vote in the quorum at all. Therefore a node majority quorum will not be the choice

- We also don’t want to create a shared disk for the 2 server nodes running in the main datacenter just to create a quorum for those two nodes (despite the fact that it is possible to create a cluster with asymmetric storage these days – we’ll see whether we will describe that one)

- We also don’t want to place a file share quorum into any other datacenter since it might be vulnerable to network issues

As a conclusion one of the best infrastructure configurations would look like:

- Create a new WSFC configuration over the 3 server nodes involved

- Assign a vote of 0 to the server node in the DR site (see next section)

- Find a third quorum vote in the main datacenter. Since the SAP single point of failure components already require a cluster, an easy way to get to a file share is to create a clustered file share on the existing WSFC configuration for the SAP single point of failure.

- Hinder the ‘Cluster Group’ to run from the 3rd node in the DR site

The so called ‘Cluster Group’ resource which usually represents the name of the Cluster still would able to run on every one of the 3 nodes. This ‘Cluster Group’ is nothing else than another group but not shown under services. To find out more about it, a good source to read is: https://blogs.technet.com/askcore/archive/2011/08/12/how-to-failover-the-cluster-group-and-available-storage-cluster-groups.aspx . In order to keep anything happening in the DR site away from the WSFC configuration of our AlwaysOn configuration, we need to restrict the ‘Cluster Group’ from running on the node of our DR site. Please see the last section for details.

This leads us to a WSFC configuration which will build a quorum with the 2 nodes in the main datacenter plus a file share which in order to be highly available is clustered. All quorum configurations are running in the main datacenter. In case of a disaster failover of the complete SAP landscape to the DR site, the third cluster node would be required to be started with the forcequorum option.

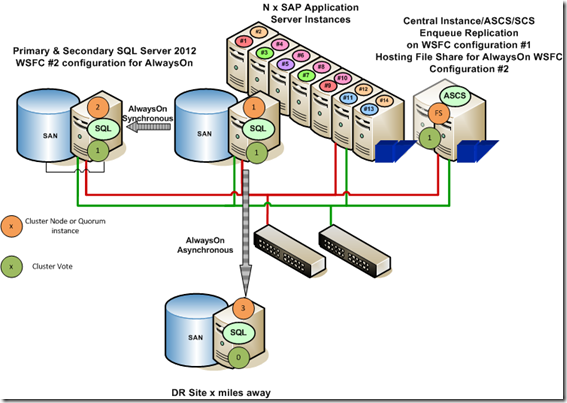

So at the end the scenario as configured with AlwaysOn looks like:

Let’s go again through the most important characteristics of the configuration:

- On the right side, the WSFC configuration for the SAP ASCS/SCS/CI remains untouched. Same quorum components, etc. Only difference is that there is a clustered share now for the second Cluster configuration.

- On the left side we look at a cluster which contains 3 nodes with the two database nodes in the main data center and one in the DR site

- The DR site node does not vote into the quorum

- The node running the DBM witness fell away

The configuration above represents the configuration Microsoft chose to deploy their productive SAP ERP system on. This system is productive on pre-released SQL Server 2012 since November 2012 and uses AlwaysOn in the configuration shown above for 3 months so far very successfully. All the different considerations as described above were debated and discussed in order to get to a solution which reflects the priorities of Microsoft’s business. Also past history about incidents of outages and their root cause got analyzed to come up with the requirements listed. As demonstrated these requirements first needed to be mapped into an appropriate WSFC configuration before one now can make the move to actually create an AlwaysOn Availability Group

REMARK: if you chose to run the Cluster Validation Wizard, the result will tell you that it is recommended to run a node majority quorum configuration. This is caused by the fact that the cluster validation doesn’t realize that that one node doesn’t have any quorum vote. In Windows Server 2008 SP1 it doesn’t check it. Therefore you can ignore the warning in the cluster validation wizard.

De-Assigning quorum vote from a WSFC node

Up to March 2011 there was not even a possibility to choose whether a server node which is part of a WSFC configuration has a vote in the quorum or not. It usually had one by default (unless you chose disk-only majority – not recommendable for the configurations we are debating here). Hence our configuration out of 3 server nodes would have automatically made a nice node majority cluster. However our demand of not having any quorum vote in the DR site could not have been satisfied. However in March 2011, Windows Development delivered a Windows QFE with https://support.microsoft.com/kb/2494036 . This QFE needs to be installed on ALL the three server nodes which will make our WSFC configuration. Then you create the WSFC configuration amongst the three nodes. Best Practices as documented in Microsoft Whitepaper and Technet articles do apply for such a WSFC configuration as well. E.g. dedicated NICs for the cluster heartbeat are advisable as documented in those papers.

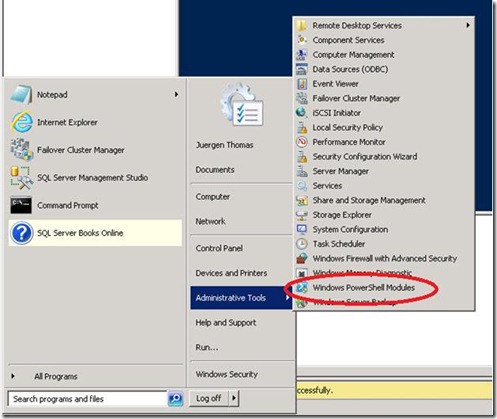

Next step is to start a PowerShell Command Window on one of the server nodes of the WSFC configuration as shown here:

Now execute the following command in the PowerShell command window:

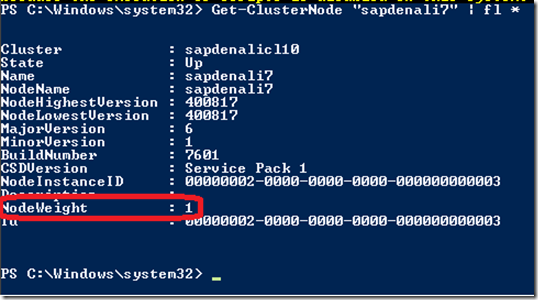

Get-ClusterNode “<NodeName>” | fl *

Where <NodeName> can be one of the three server nodes in the WSFC configuration. A typical result would look like:

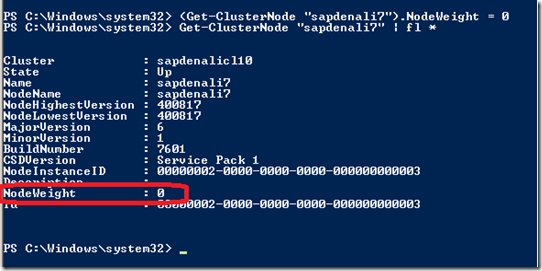

As seen above the server node does one quorum vote. In order to change this vote to 0, execute the following PowerShell command:

(Get-ClusterNode “<NodeName>”).NodeWeight = 0

<NodeName> being the server node name of the server you want to de-assign the quorum vote. To check whether the command was executed successfully, you run the first PowerShell command again. The result now should look like:

Now the server node does not have any influence in the quorum vote anymore. Performing these steps on the server node in the DR site will eliminate that server from voting for quorum. Means the voting is restricted to the two server nodes in the main datacenter and the third vote which we suggested to be a file share on the WSFC configuration of the SAP application layer. The steps can be executed on every one of the nodes in the WSFC configuration. The configuration of votes is persisted and hence does survive reboots or a restart of the cluster.

Moving the ‘Cluster Group’

In the scenario we are faced with the overriding goal that anything happening in the DR site should not impact the functionality running in the man datacenter . As a result of this we also need to avoid that important functionality for the WSFC framework would run in the DR site. If the so called ‘Cluster group’ is not running, then the cluster would not work. Means there would be no quorum and as a result the SQL server Availability Group will shut down and the databases within the AG would go into a non-accessible state.

Therefore we want to run the ‘Cluster Group’ out of the main site only. This is best done by using this sequence of PowerShell commands:

Set-ClusterOwnerNode -Group "Cluster Group" -Owners <first main site node name>, <second main site node name>

Where nodes names are written w/o any single or double quotes. The third node in the DR site is not listed. In this case the cluster configuration would try to run the ‘Cluster Group’ always from the first node in the list.

As a second command, we want to set the failback option with the following PowerShell command:

(Get-ClusterGroup "Cluster Group").AutoFailbackType = 1

In this case, the ‘cluster group’ would fall back to the first preferred owner as soon as the first node in the list is coming up again.

One also could move the ‘Cluster Group’ manually with the following PowerShell command:

Move-ClusterGroup "Cluster Group"

Like shown here:

That is about it with our second reference configuration for SAP and SQL Server 2012 AlwaysOn.Let's check out a third one next week and then start to create a first Availability Group on our SAP ERP system.

Comments

Anonymous

April 19, 2012

Thank you so much for the great articles on Always On. I do have few questions. On this article, is DR site SQL Server located on different datacenter as well as different subnet?Anonymous

April 19, 2012

Yes, in this case the DR site is a different subnet. You see more details on how to setup the configuration in Part 6. Thanks.Anonymous

August 19, 2012

This article is what I need. I'd appreciate it. Let me make sure 2 points.

- Can WSFC on the right side (CI, ASCS, SCS and ERS) and the other on the left side (AlwaysOn DB sync) be implemented on a same WSFC 2 nodes cluster? It means, can that architecture be built on 2 nodes in the primary site? (except SAP App. servers)

- For DR of CI, ASCS, SCS and ERS, do we need to build same WSFC 2 nodes cluster in the DR site? I guess it's not just showed in the figure.