GPT-4o Realtime API for speech and audio (Preview)

Note

This feature is currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

Azure OpenAI GPT-4o Realtime API for speech and audio is part of the GPT-4o model family that supports low-latency, "speech in, speech out" conversational interactions. The GPT-4o audio realtime API is designed to handle real-time, low-latency conversational interactions, making it a great fit for use cases involving live interactions between a user and a model, such as customer support agents, voice assistants, and real-time translators.

Most users of the Realtime API need to deliver and receive audio from an end-user in real time, including applications that use WebRTC or a telephony system. The Realtime API isn't designed to connect directly to end user devices and relies on client integrations to terminate end user audio streams.

Supported models

Currently only gpt-4o-realtime-preview version: 2024-10-01-preview supports real-time audio.

The gpt-4o-realtime-preview model is available for global deployments in East US 2 and Sweden Central regions.

Important

The system stores your prompts and completions as described in the "Data Use and Access for Abuse Monitoring" section of the service-specific Product Terms for Azure OpenAI Service, except that the Limited Exception does not apply. Abuse monitoring will be turned on for use of the gpt-4o-realtime-preview API even for customers who otherwise are approved for modified abuse monitoring.

API support

Support for the Realtime API was first added in API version 2024-10-01-preview.

Note

For more information about the API and architecture, see the Azure OpenAI GPT-4o real-time audio repository on GitHub.

Prerequisites

- An Azure subscription - Create one for free.

- An Azure OpenAI resource created in a supported region. For more information, see Create a resource and deploy a model with Azure OpenAI.

Deploy a model for real-time audio

Before you can use GPT-4o real-time audio, you need a deployment of the gpt-4o-realtime-preview model in a supported region as described in the supported models section.

- Go to the Azure AI Foundry home page and make sure you're signed in with the Azure subscription that has your Azure OpenAI Service resource (with or without model deployments.)

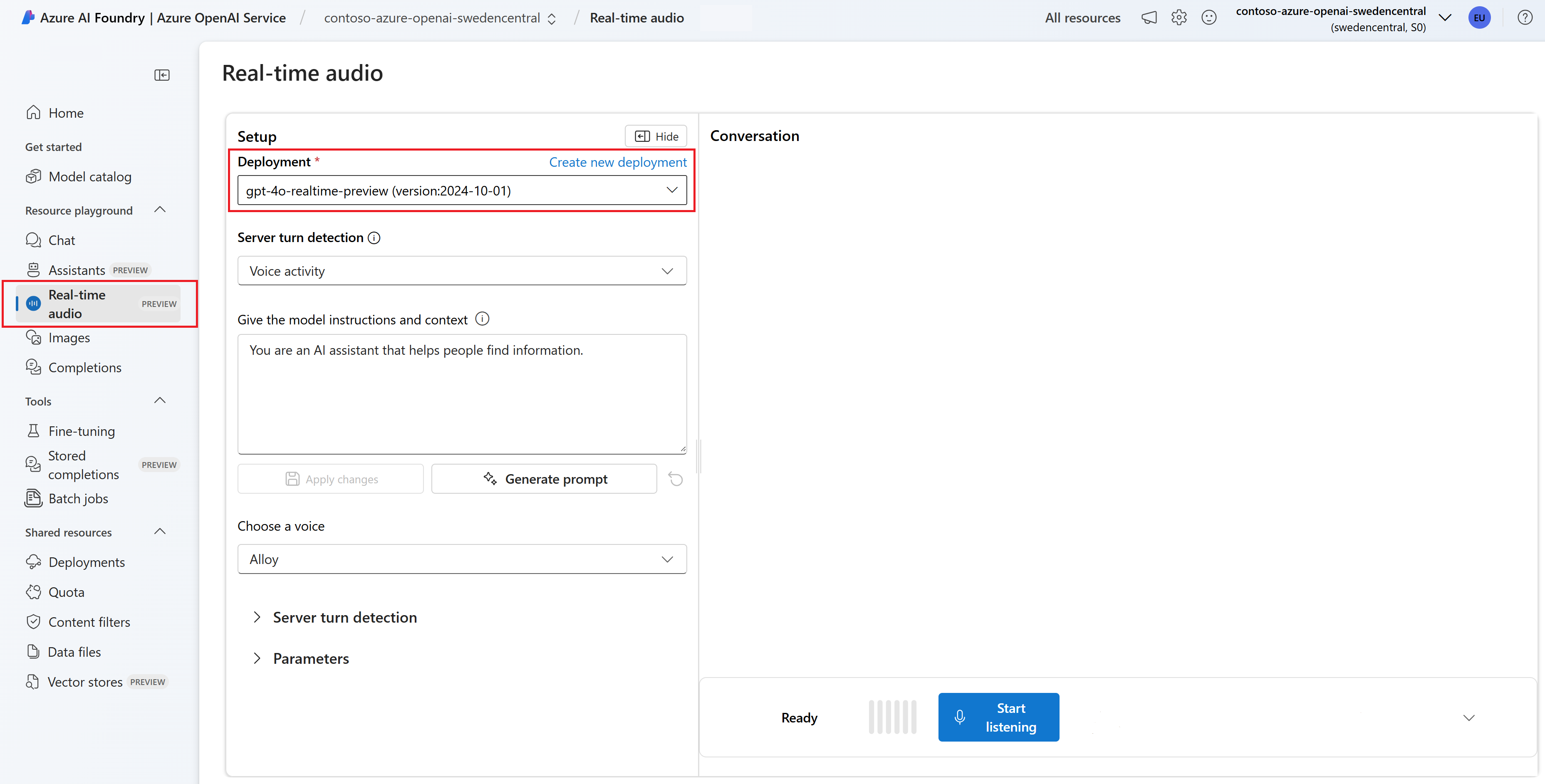

- Select the Real-time audio playground from under Resource playground in the left pane.

- Select + Create a deployment to open the deployment window.

- Search for and select the

gpt-4o-realtime-previewmodel and then select Confirm. - In the deployment wizard, make sure to select the

2024-10-01model version. - Follow the wizard to deploy the model.

Now that you have a deployment of the gpt-4o-realtime-preview model, you can interact with it in real time in the Azure AI Foundry portal Real-time audio playground or Realtime API.

Use the GPT-4o real-time audio

Tip

Right now, the fastest way to get started development with the GPT-4o Realtime API is to download the sample code from the Azure OpenAI GPT-4o real-time audio repository on GitHub.

To chat with your deployed gpt-4o-realtime-preview model in the Azure AI Foundry Real-time audio playground, follow these steps:

the Azure OpenAI Service page in Azure AI Foundry portal. Make sure you're signed in with the Azure subscription that has your Azure OpenAI Service resource and the deployed

gpt-4o-realtime-previewmodel.Select the Real-time audio playground from under Resource playground in the left pane.

Select your deployed

gpt-4o-realtime-previewmodel from the Deployment dropdown.Select Enable microphone to allow the browser to access your microphone. If you already granted permission, you can skip this step.

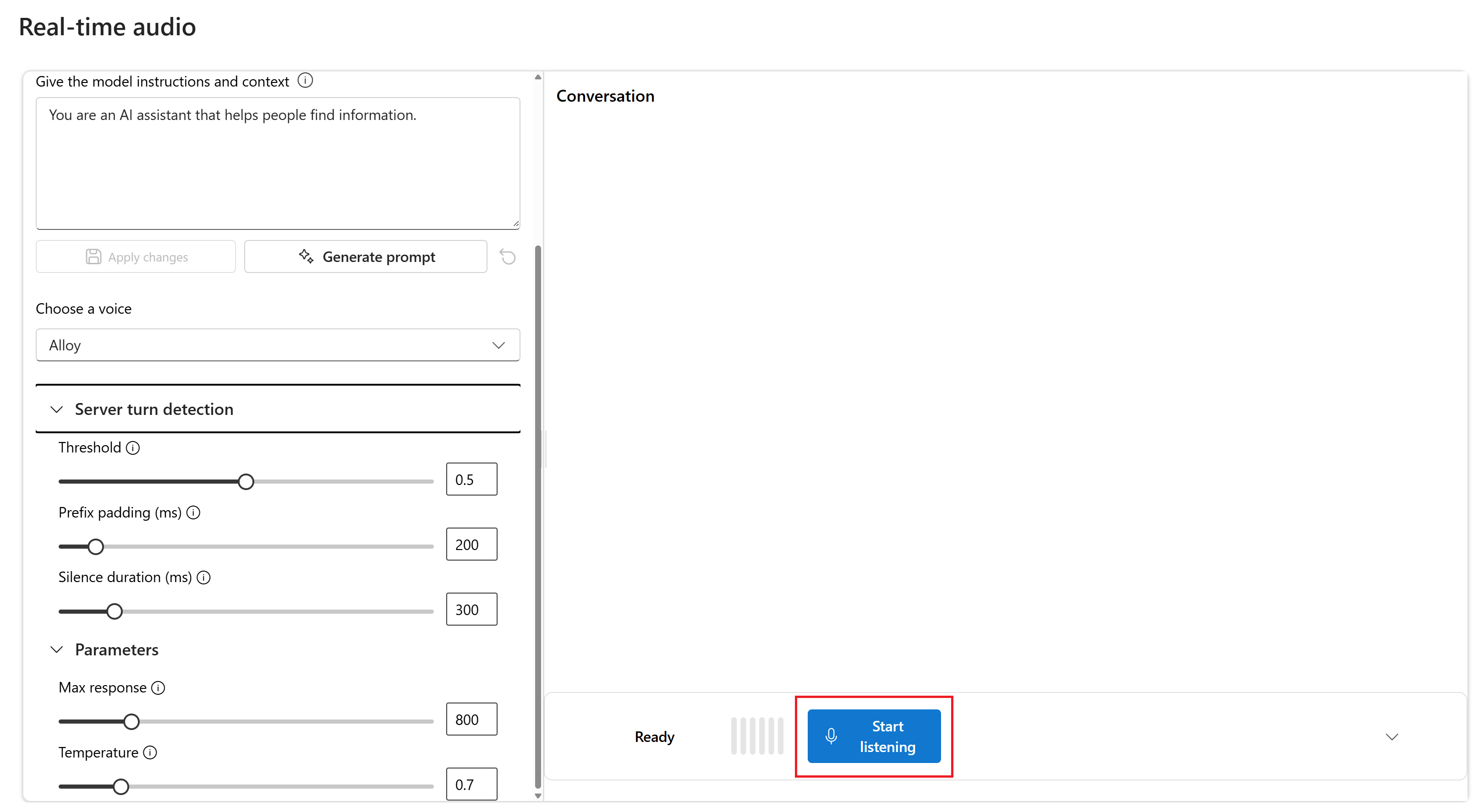

Optionally you can edit contents in the Give the model instructions and context text box. Give the model instructions about how it should behave and any context it should reference when generating a response. You can describe the assistant's personality, tell it what it should and shouldn't answer, and tell it how to format responses.

Optionally, change settings such as threshold, prefix padding, and silence duration.

Select Start listening to start the session. You can speak into the microphone to start a chat.

You can interrupt the chat at any time by speaking. You can end the chat by selecting the Stop listening button.

The JavaScript web sample demonstrates how to use the GPT-4o Realtime API to interact with the model in real time. The sample code includes a simple web interface that captures audio from the user's microphone and sends it to the model for processing. The model responds with text and audio, which the sample code renders in the web interface.

You can run the sample code locally on your machine by following these steps. Refer to the repository on GitHub for the most up-to-date instructions.

If you don't have Node.js installed, download and install the LTS version of Node.js.

Clone the repository to your local machine:

git clone https://github.com/Azure-Samples/aoai-realtime-audio-sdk.gitGo to the

javascript/samples/webfolder in your preferred code editor.cd ./javascript/samplesRun

download-pkg.ps1ordownload-pkg.shto download the required packages.Go to the

webfolder from the./javascript/samplesfolder.cd ./webRun

npm installto install package dependencies.Run

npm run devto start the web server, navigating any firewall permissions prompts as needed.Go to any of the provided URIs from the console output (such as

http://localhost:5173/) in a browser.Enter the following information in the web interface:

- Endpoint: The resource endpoint of an Azure OpenAI resource. You don't need to append the

/realtimepath. An example structure might behttps://my-azure-openai-resource-from-portal.openai.azure.com. - API Key: A corresponding API key for the Azure OpenAI resource.

- Deployment: The name of the

gpt-4o-realtime-previewmodel that you deployed in the previous section. - System Message: Optionally, you can provide a system message such as "You always talk like a friendly pirate."

- Temperature: Optionally, you can provide a custom temperature.

- Voice: Optionally, you can select a voice.

- Endpoint: The resource endpoint of an Azure OpenAI resource. You don't need to append the

Select the Record button to start the session. Accept permissions to use your microphone if prompted.

You should see a

<< Session Started >>message in the main output. Then you can speak into the microphone to start a chat.You can interrupt the chat at any time by speaking. You can end the chat by selecting the Stop button.

Related content

- Learn more about How to use the Realtime API

- See the Realtime API reference

- Learn more about Azure OpenAI quotas and limits