Use the Azure AI model inference endpoint

Azure AI inference service in Azure AI services allows customers to consume the most powerful models from flagship model providers using a single endpoint and credentials. This means that you can switch between models and consume them from your application without changing a single line of code.

The article explains how models are organized inside of the service and how to use the inference endpoint to invoke them.

Deployments

Azure AI model inference service makes models available using the deployment concept. Deployments are a way to give a model a name under certain configurations. Then, you can invoke such model configuration by indicating its name on your requests.

Deployments capture:

- A model name

- A model version

- A provisioning/capacity type1

- A content filtering configuration1

- A rate limiting configuration1

1 Configurations may vary depending on the model you have selected.

An Azure AI services resource can have as many model deployments as needed and they don't incur in cost unless inference is performed for those models. Deployments are Azure resources and hence they are subject to Azure policies.

To learn more about how to create deployments see Add and configure model deployments.

Azure AI inference endpoint

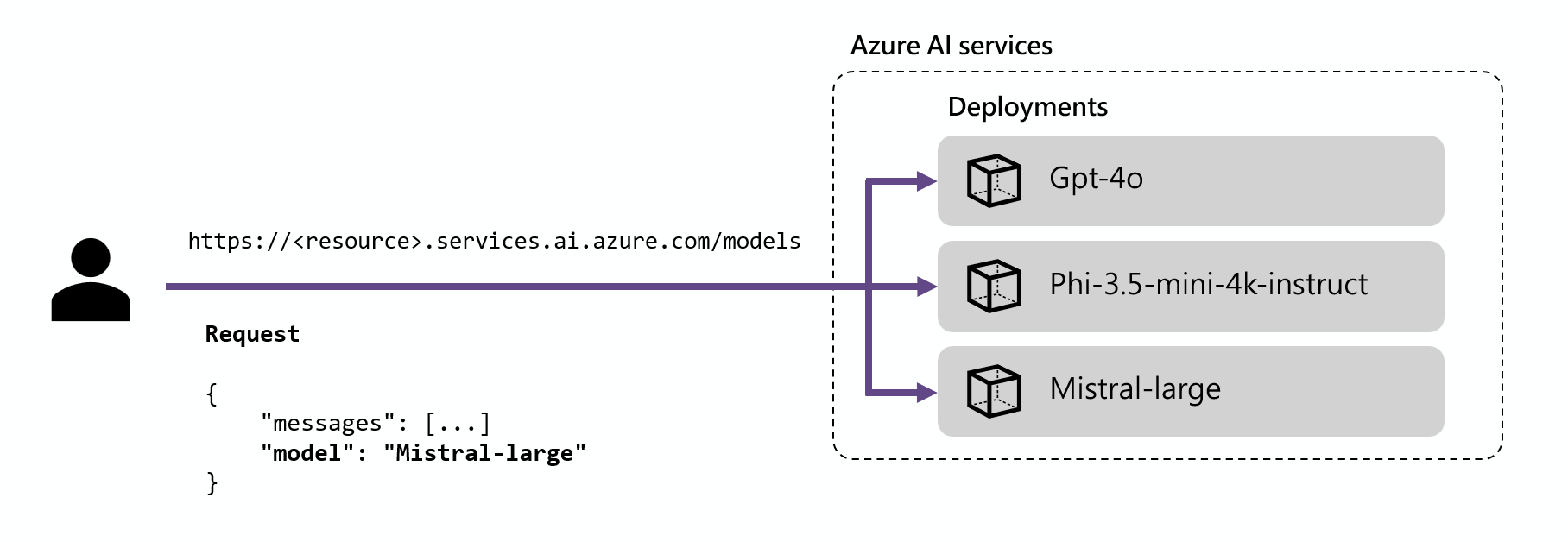

The Azure AI inference endpoint allows customers to use a single endpoint with the same authentication and schema to generate inference for the deployed models in the resource. This endpoint follows the Azure AI model inference API which is supported by all the models in Azure AI model inference service.

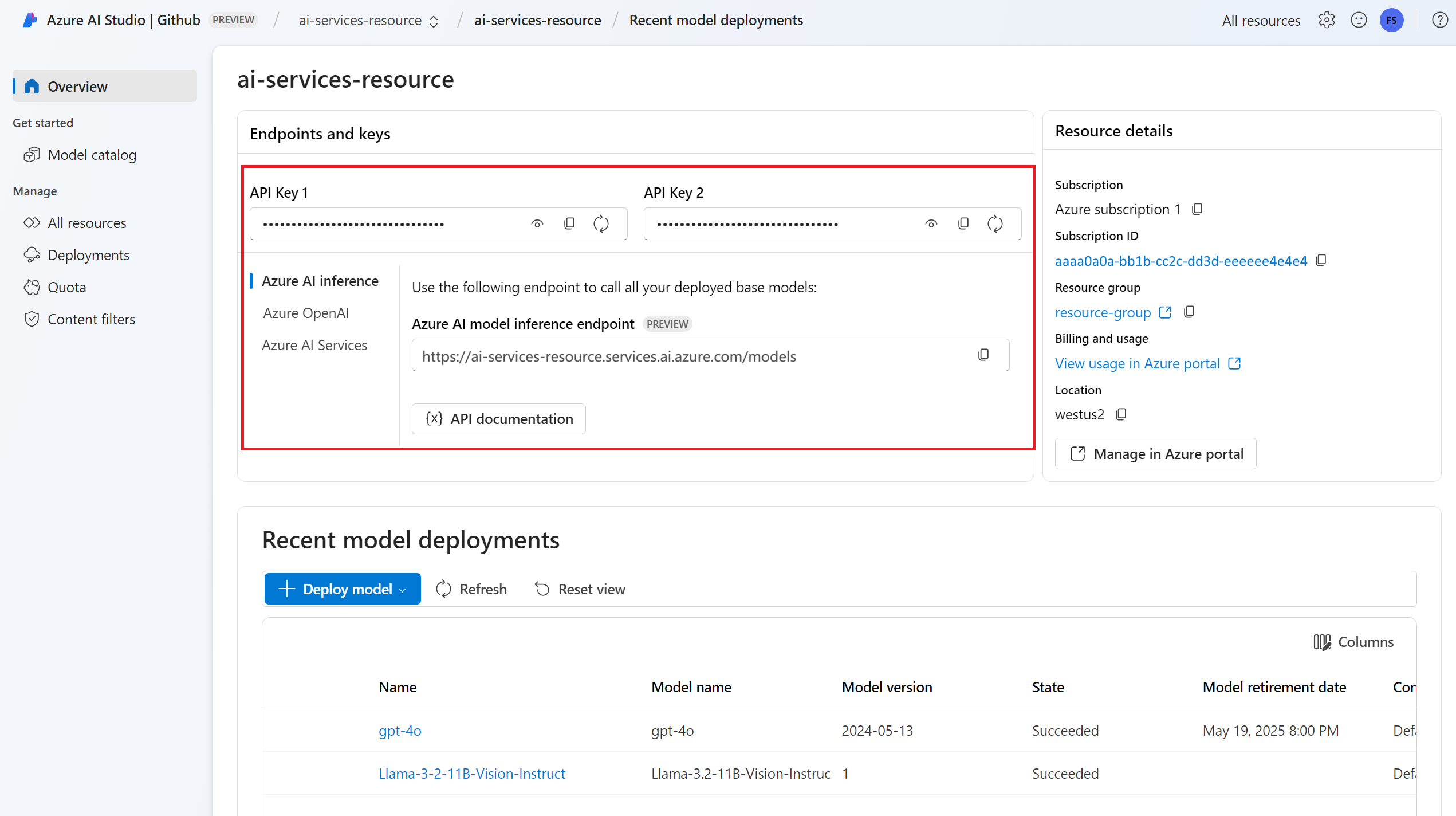

You can see the endpoint URL and credentials in the Overview section. The endpoint usually has the form https://<resource-name>.services.ai.azure.com/models:

You can connect to the endpoint using the Azure AI Inference SDK:

Install the package azure-ai-inference using your package manager, like pip:

pip install azure-ai-inference>=1.0.0b5

Warning

Azure AI Services resource requires the version azure-ai-inference>=1.0.0b5 for Python.

Then, you can use the package to consume the model. The following example shows how to create a client to consume chat completions:

import os

from azure.ai.inference import ChatCompletionsClient

from azure.core.credentials import AzureKeyCredential

client = ChatCompletionsClient(

endpoint=os.environ["AZUREAI_ENDPOINT_URL"],

credential=AzureKeyCredential(os.environ["AZUREAI_ENDPOINT_KEY"]),

)

Explore our samples and read the API reference documentation to get yourself started.

See Supported languages and SDKs for more code examples and resources.

Routing

The inference endpoint routes requests to a given deployment by matching the parameter name inside of the request to the name of the deployment. This means that deployments work as an alias of a given model under certain configurations. This flexibility allows you to deploy a given model multiple times in the service but under different configurations if needed.

For example, if you create a deployment named Mistral-large, then such deployment can be invoked as:

from azure.ai.inference.models import SystemMessage, UserMessage

response = client.complete(

messages=[

SystemMessage(content="You are a helpful assistant."),

UserMessage(content="Explain Riemann's conjecture in 1 paragraph"),

],

model="mistral-large"

)

print(response.choices[0].message.content)

Tip

Deployment routing is not case sensitive.

Supported languages and SDKs

All models deployed in Azure AI model inference service support the Azure AI model inference API and its associated family of SDKs, which are available in the following languages:

| Language | Documentation | Package | Examples |

|---|---|---|---|

| C# | Reference | azure-ai-inference (NuGet) | C# examples |

| Java | Reference | azure-ai-inference (Maven) | Java examples |

| JavaScript | Reference | @azure/ai-inference (npm) | JavaScript examples |

| Python | Reference | azure-ai-inference (PyPi) | Python examples |

Azure OpenAI inference endpoint

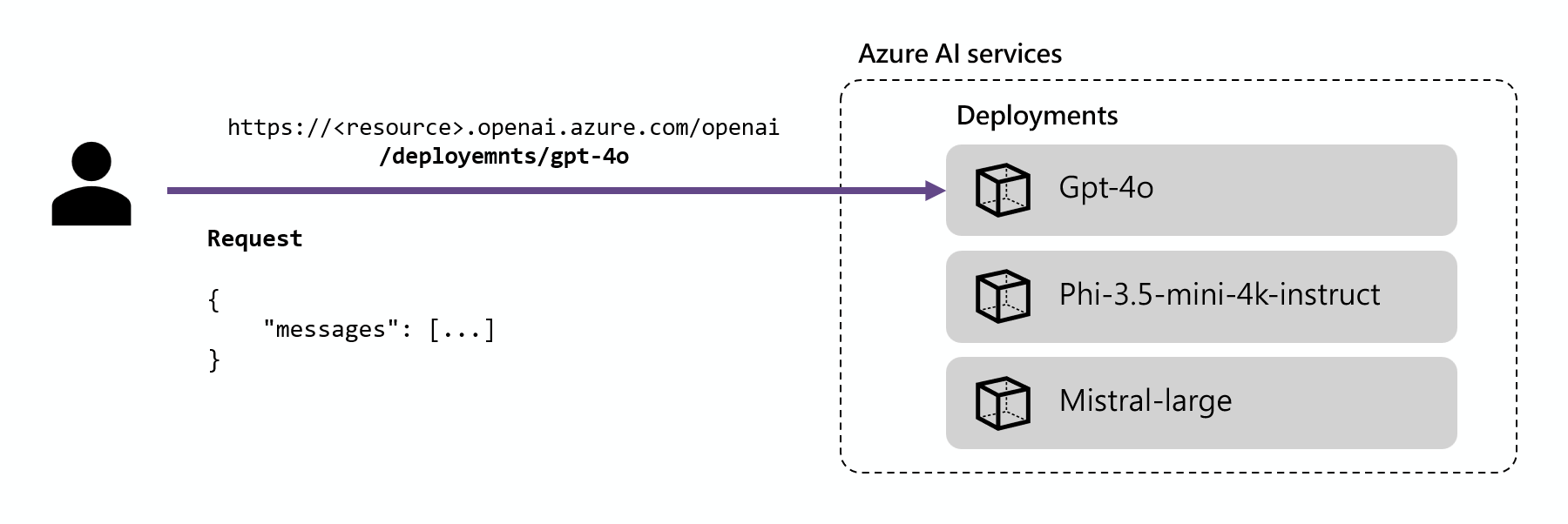

Azure OpenAI models also support the Azure OpenAI API. This API exposes the full capabilities of OpenAI models and supports additional features like assistants, threads, files, and batch inference.

Each OpenAI model deployment has its own URL associated with such deployment under the Azure OpenAI inference endpoint. However, the same authentication mechanism can be used to consume it. URLs are usually in the form of https://<resource-name>.openai.azure.com/openai/deployments/<model-deployment-name>. Learn more in the reference page for Azure OpenAI API

Each deployment has a URL that is the concatenations of the Azure OpenAI base URL and the route /deployments/<model-deployment-name>.

Important

There is no routing mechanism for the Azure OpenAI endpoint, as each URL is exclusive for each model deployment.

Supported languages and SDKs

The Azure OpenAI endpoint is supported by the OpenAI SDK (AzureOpenAI class) and Azure OpenAI SDKs, which are available in multiple languages:

| Language | Source code | Package | Examples |

|---|---|---|---|

| C# | Source code | Azure.AI.OpenAI (NuGet) | C# examples |

| Go | Source code | azopenai (Go) | Go examples |

| Java | Source code | azure-ai-openai (Maven) | Java examples |

| JavaScript | Source code | @azure/openai (npm) | JavaScript examples |

| Python | Source code | openai (PyPi) | Python examples |