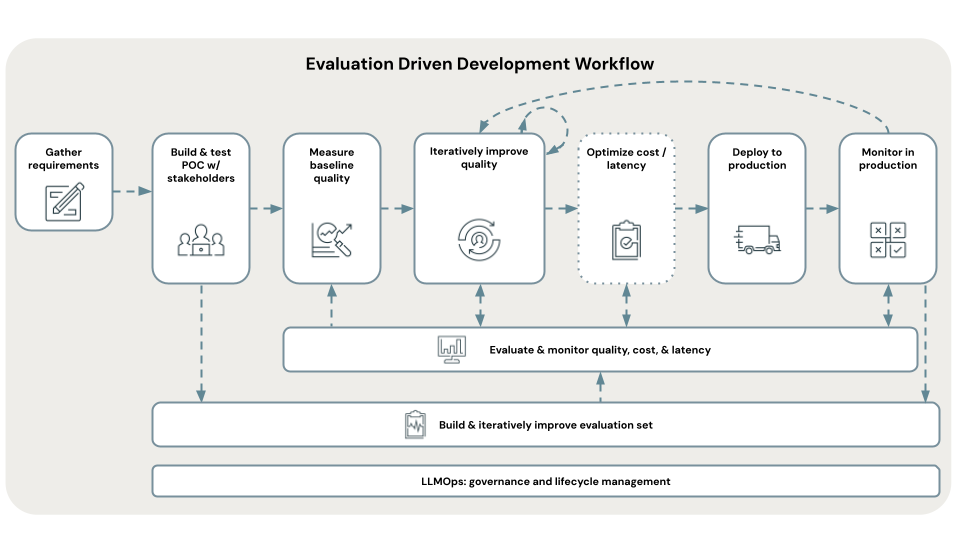

Evaluation-driven development workflow

This section walks you through the Databricks recommended development workflow for building, testing, and deploying a high-quality RAG application: evaluation-driven development. This workflow is based on the Mosaic Research team’s recommended best practices for building and evaluating high-quality RAG applications. Databricks recommends the following evaluation-driven workflow:

- Define the requirements.

- Collect stakeholder feedback on a rapid proof of concept (POC).

- Evaluate the POC’s quality.

- Iteratively diagnose and fix quality issues.

- Deploy to production.

- Monitor in production.

There are two core concepts in evaluation-driven development:

Metrics: Defining what high-quality means.

Similar to how you set business goals each year, you need to define what high-quality means for your use case. Mosaic AI Agent Evaluation provides a suggested set of metrics to use, the most important of which is answer accuracy or correctness - is the RAG application providing the right answer?

Evaluation set: Objectively measuring the metrics.

To objectively measure quality, you need an evaluation set, which contains questions with known-good answers validated by humans. This guide walks you through the process of developing and iteratively refining this evaluation set.

Anchoring against metrics and an evaluation set provides the following benefits:

- You can iteratively and confidently refine your application’s quality during development - no more guessing if a change resulted in an improvement.

- Getting alignment with business stakeholders on the readiness of the application for production becomes more straightforward when you can confidently state, “we know our application answers the most critical questions to our business correctly and doesn’t hallucinate.”

For a step-by-step walkthrough illustrating the evaluation-driven workflow, start with Prerequisite: Gather requirements.