Scale Azure OpenAI for JavaScript with Azure API Management

Learn how to add enterprise-grade load balancing to your application to extend the chat app beyond the Azure OpenAI Service token and model quota limits. This approach uses Azure API Management to intelligently direct traffic between three Azure OpenAI resources.

This article requires you to deploy two separate samples:

- Chat app:

- If you haven't deployed the chat app yet, wait until after the load balancer sample is deployed.

- If you already deployed the chat app once, change the environment variable to support a custom endpoint for the load balancer and redeploy it again.

- Load balancer with Azure API Management.

Note

This article uses one or more AI app templates as the basis for the examples and guidance in the article. AI app templates provide you with well-maintained reference implementations that are easy to deploy. They help to ensure a high-quality starting point for your AI apps.

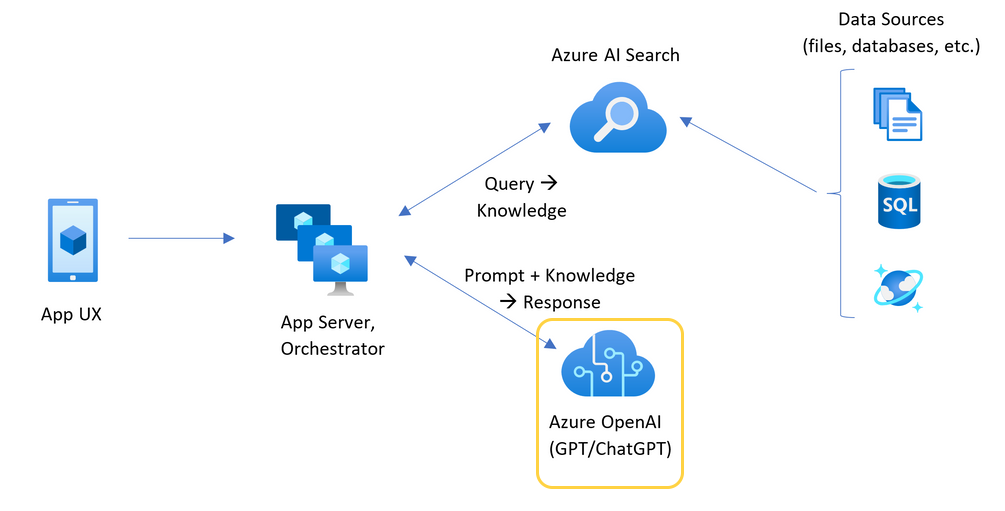

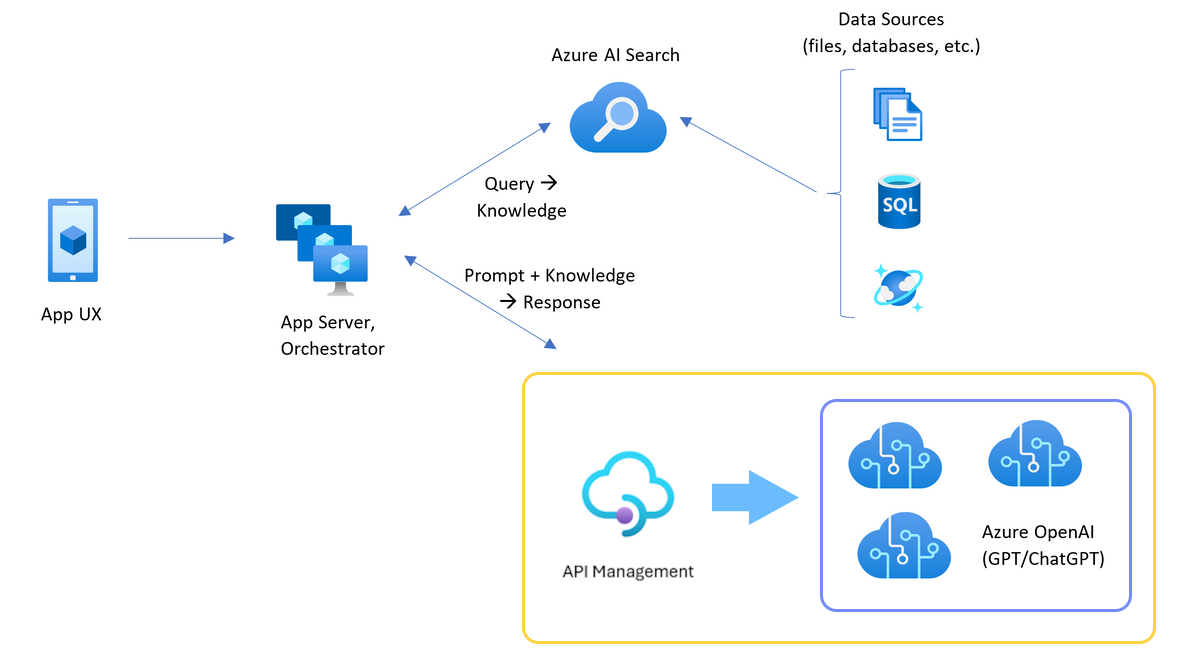

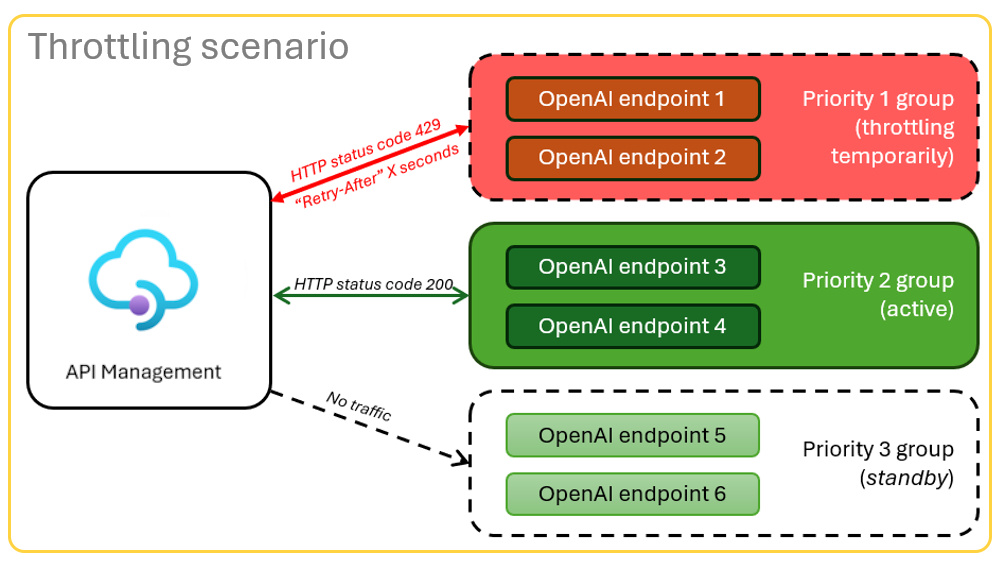

Architecture for load balancing Azure OpenAI with Azure API Management

Because the Azure OpenAI resource has specific token and model quota limits, a chat app that uses a single Azure OpenAI resource is prone to have conversation failures because of those limits.

To use the chat app without hitting those limits, use a load-balanced solution with API Management. This solution seamlessly exposes a single endpoint from API Management to your chat app server.

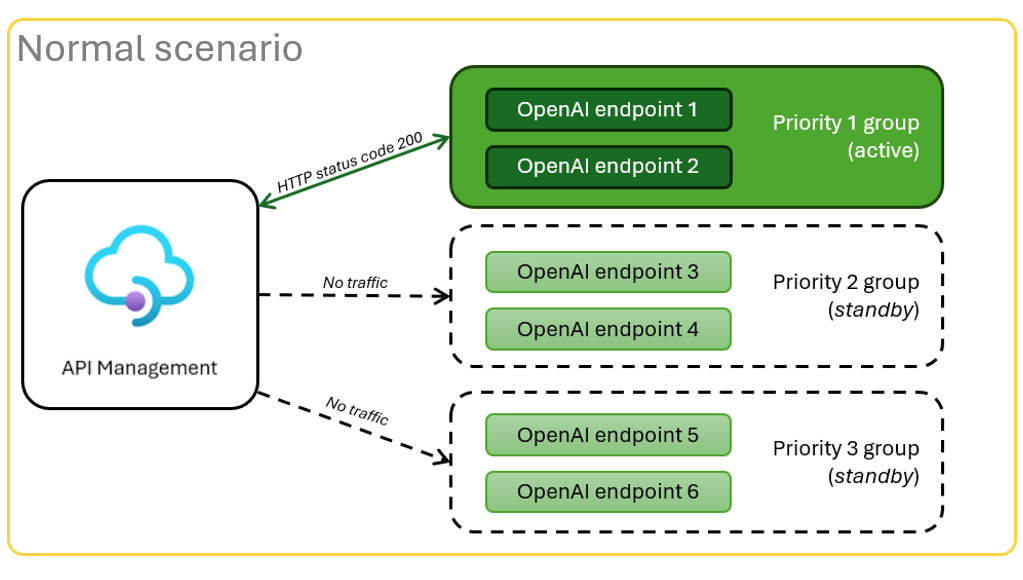

The API Management resource, as an API layer, sits in front of a set of Azure OpenAI resources. The API layer applies to two scenarios: normal and throttled. During a normal scenario where token and model quota is available, the Azure OpenAI resource returns a 200 back through the API layer and backend app server.

When a resource is throttled because of quota limits, the API layer can retry a different Azure OpenAI resource immediately to fulfill the original chat app request.

Prerequisites

Azure subscription. Create one for free

Dev containers are available for both samples, with all dependencies required to complete this article. You can run the dev containers in GitHub Codespaces (in a browser) or locally using Visual Studio Code.

- Only a GitHub account is required to use Codespaces

Open the Azure API Management local balancer sample app

GitHub Codespaces runs a development container managed by GitHub with Visual Studio Code for the Web as the user interface. For the most straightforward development environment, use GitHub Codespaces so that you have the correct developer tools and dependencies preinstalled to complete this article.

Important

All GitHub accounts can use GitHub Codespaces for up to 60 hours free each month with two core instances. For more information, see GitHub Codespaces monthly included storage and core hours.

Deploy the Azure API Management load balancer

To deploy the load balancer to Azure, sign in to the Azure Developer CLI (

AZD):azd auth loginFinish the sign-in instructions.

Deploy the load balancer app:

azd upSelect a subscription and region for the deployment. They don't have to be the same subscription and region as the chat app.

Wait for the deployment to finish before you continue. This process might take up to 30 minutes.

Get the load balancer endpoint

Run the following Bash command to see the environment variables from the deployment. You need this information later.

azd env get-values | grep APIM_GATEWAY_URL

Redeploy the chat app with the load balancer endpoint

These examples are completed on the chat app sample.

Open the chat app sample's dev container by using one of the following choices.

Language GitHub Codespaces Visual Studio Code .NET JavaScript Python Sign in to the Azure Developer CLI (

AZD):azd auth loginFinish the sign-in instructions.

Create an

AZDenvironment with a name such aschat-app:azd env new <name>Add the following environment variable, which tells the chat app's backend to use a custom URL for the Azure OpenAI requests:

azd env set OPENAI_HOST azure_customAdd the following environment variable, which tells the chat app's backend what the value is of the custom URL for the Azure OpenAI request:

azd env set AZURE_OPENAI_CUSTOM_URL <APIM_GATEWAY_URL>Deploy the chat app:

azd up

Configure the TPM quota

By default, each of the Azure OpenAI instances in the load balancer is deployed with a capacity of 30,000 tokens per minute (TPM). You can use the chat app with the confidence that it's built to scale across many users without running out of quota. Change this value when:

- You get deployment capacity errors: Lower the value.

- You need higher capacity: Raise the value.

Use the following command to change the value:

azd env set OPENAI_CAPACITY 50Redeploy the load balancer:

azd up

Clean up resources

When you're finished with the chat app and the load balancer, clean up the resources. The Azure resources created in this article are billed to your Azure subscription. If you don't expect to need these resources in the future, delete them to avoid incurring more charges.

Clean up the chat app resources

Return to the chat app article to clean up those resources.

Clean up the load balancer resources

Run the following Azure Developer CLI command to delete the Azure resources and remove the source code:

azd down --purge --force

The switches provide:

purge: Deleted resources are immediately purged. You can reuse the Azure OpenAI tokens per minute.force: The deletion happens silently, without requiring user consent.

Clean up resources

Deleting the GitHub Codespaces environment ensures that you can maximize the amount of free per-core hours entitlement that you get for your account.

Important

For more information about your GitHub account's entitlements, see GitHub Codespaces monthly included storage and core hours.

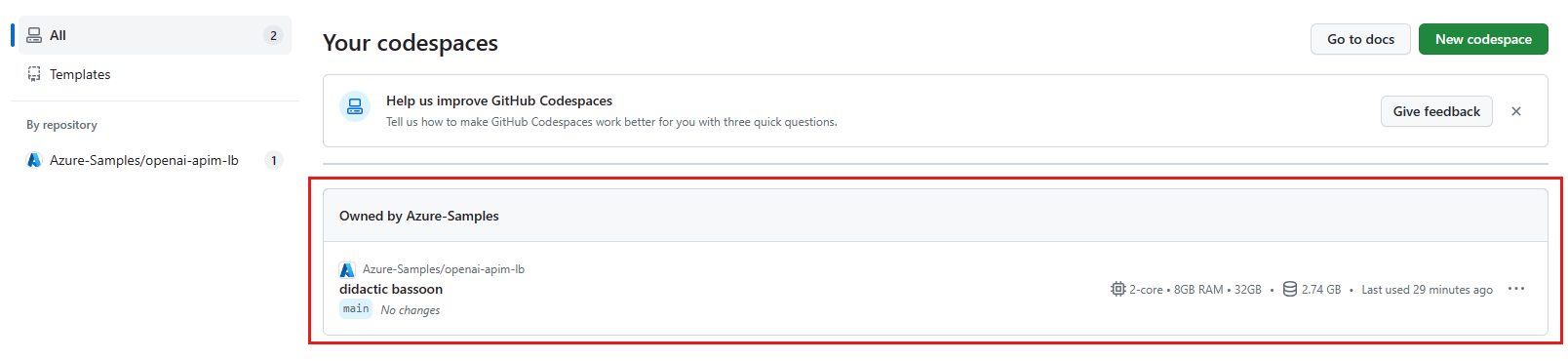

Sign in to the GitHub Codespaces dashboard.

Locate your currently running codespaces that are sourced from the

azure-samples/openai-apim-lbGitHub repository.

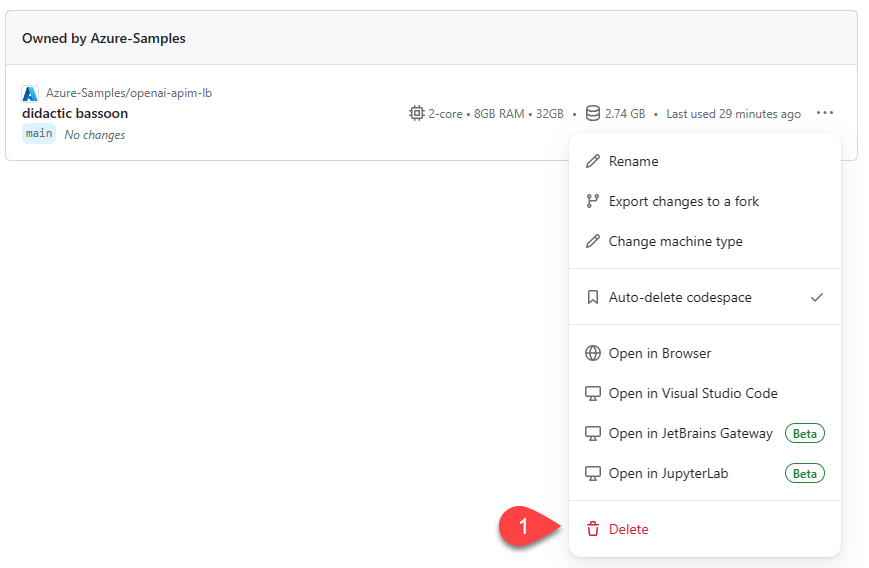

Open the context menu for the GitHub Codespaces item, and then select Delete.

Get help

If you have trouble deploying the Azure API Management load balancer, add your issue to the repository's Issues webpage.

Sample code

Samples used in this article include:

Next steps

- View Azure API Management diagnostic data in Azure Monitor

- Use Azure Load Testing to load test your chat app with Azure Load Testing service.