Configure dataflow endpoints for Azure Data Lake Storage Gen2

Important

Azure IoT Operations Preview – enabled by Azure Arc is currently in preview. You shouldn't use this preview software in production environments.

You'll need to deploy a new Azure IoT Operations installation when a generally available release becomes available. You won't be able to upgrade a preview installation.

For legal terms that apply to Azure features that are in beta, in preview, or otherwise not yet released into general availability, see the Supplemental Terms of Use for Microsoft Azure Previews.

To send data to Azure Data Lake Storage Gen2 in Azure IoT Operations Preview, you can configure a dataflow endpoint. This configuration allows you to specify the destination endpoint, authentication method, table, and other settings.

Prerequisites

- An instance of Azure IoT Operations Preview

- A configured dataflow profile

- A Azure Data Lake Storage Gen2 account

- A pre-created storage container in the storage account

Create an Azure Data Lake Storage Gen2 dataflow endpoint

To configure a dataflow endpoint for Azure Data Lake Storage Gen2, we suggest using the managed identity of the Azure Arc-enabled Kubernetes cluster. This approach is secure and eliminates the need for secret management. Alternatively, you can authenticate with the storage account using an access token. When using an access token, you would need to create a Kubernetes secret containing the SAS token.

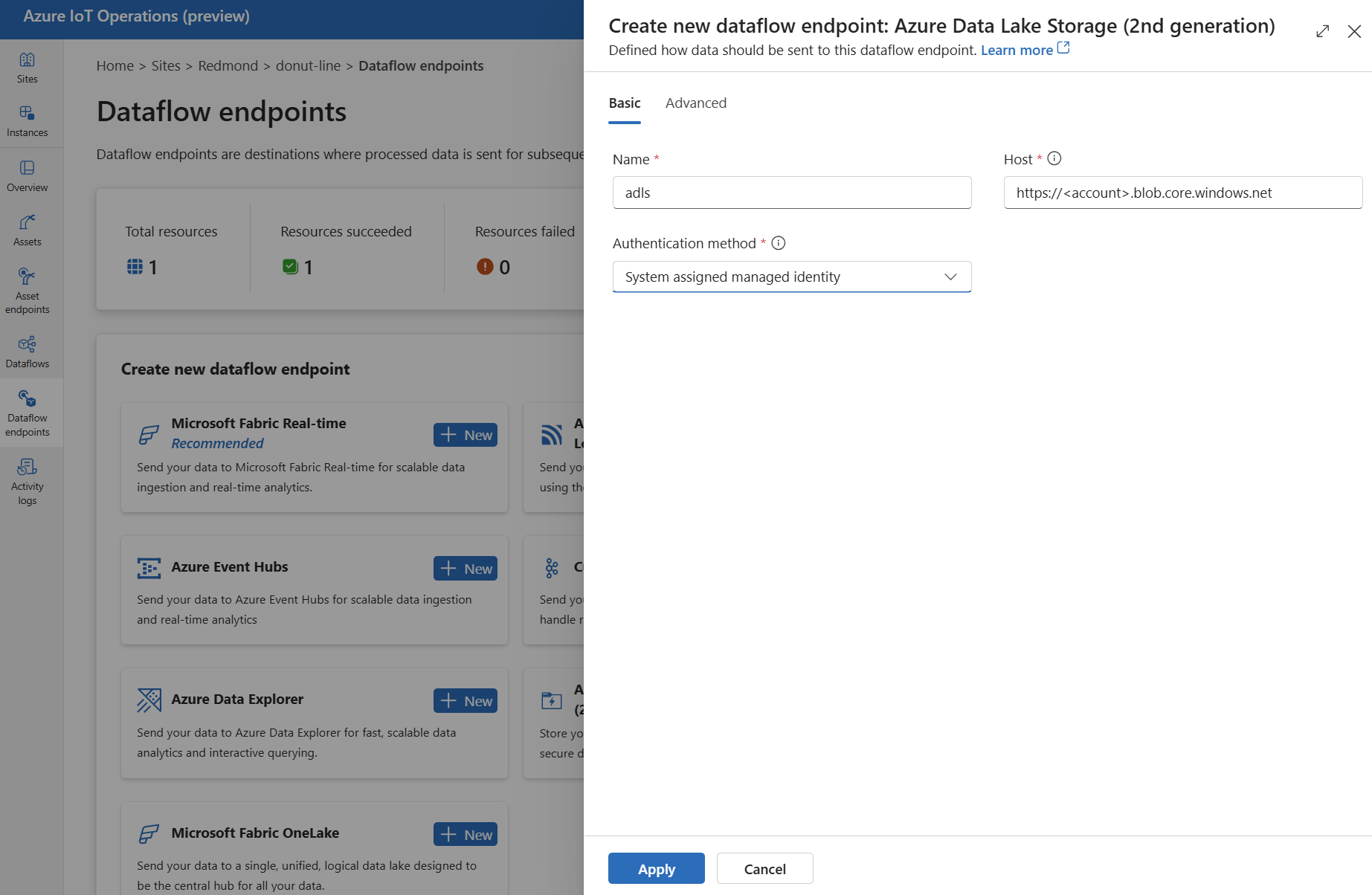

In the IoT Operations portal, select the Dataflow endpoints tab.

Under Create new dataflow endpoint, select Azure Data Lake Storage (2nd generation) > New.

Enter the following settings for the endpoint:

Setting Description Name The name of the dataflow endpoint. Host The hostname of the Azure Data Lake Storage Gen2 endpoint in the format <account>.blob.core.windows.net. Replace the account placeholder with the endpoint account name.Authentication method The method used for authentication. Choose System assigned managed identity, User assigned managed identity, or Access token. Client ID The client ID of the user-assigned managed identity. Required if using User assigned managed identity. Tenant ID The tenant ID of the user-assigned managed identity. Required if using User assigned managed identity. Access token secret name The name of the Kubernetes secret containing the SAS token. Required if using Access token. Select Apply to provision the endpoint.

If you need to override the system-assigned managed identity audience, see the System-assigned managed identity section.

Use access token authentication

Follow the steps in the access token section to get a SAS token for the storage account and store it in a Kubernetes secret.

Then, create the DataflowEndpoint resource and specify the access token authentication method. Here, replace <SAS_SECRET_NAME> with name of the secret containing the SAS token and other placeholder values.

In the IoT Operations portal, select the Dataflow endpoints tab.

Under Create new dataflow endpoint, select Azure Data Lake Storage (2nd generation) > New.

Enter the following settings for the endpoint:

Setting Description Name The name of the dataflow endpoint. Host The hostname of the Azure Data Lake Storage Gen2 endpoint in the format <account>.blob.core.windows.net. Replace the account placeholder with the endpoint account name.Authentication method The method used for authentication. Choose Access token. Synced secret name The name of the Kubernetes secret that is synchronized with the ADLSv2 endpoint. Access token secret name The name of the Kubernetes secret containing the SAS token. Select Apply to provision the endpoint.

Available authentication methods

The following authentication methods are available for Azure Data Lake Storage Gen2 endpoints.

For more information about enabling secure settings by configuring an Azure Key Vault and enabling workload identities, see Enable secure settings in Azure IoT Operations Preview deployment.

System-assigned managed identity

Using the system-assigned managed identity is the recommended authentication method for Azure IoT Operations. Azure IoT Operations creates the managed identity automatically and assigns it to the Azure Arc-enabled Kubernetes cluster. It eliminates the need for secret management and allows for seamless authentication.

Before creating the dataflow endpoint, assign a role to the managed identity that has write permission to the storage account. For example, you can assign the Storage Blob Data Contributor role. To learn more about assigning roles to blobs, see Authorize access to blobs using Microsoft Entra ID.

- In Azure portal, go to your Azure IoT Operations instance and select Overview.

- Copy the name of the extension listed after Azure IoT Operations Arc extension. For example, azure-iot-operations-xxxx7.

- Search for the managed identity in the Azure portal by using the name of the extension. For example, search for azure-iot-operations-xxxx7.

- Assign a role to the Azure IoT Operations Arc extension managed identity that grants permission to write to the storage account, such as Storage Blob Data Contributor. To learn more, see Authorize access to blobs using Microsoft Entra ID.

- Create the DataflowEndpoint resource and specify the managed identity authentication method.

In the operations experience dataflow endpoint settings page, select the Basic tab then choose Authentication method > System assigned managed identity.

In most cases, you don't need to specify a service audience. Not specifying an audience creates a managed identity with the default audience scoped to your storage account.

If you need to override the system-assigned managed identity audience, you can specify the audience setting.

In most cases, you don't need to specify a service audience. Not specifying an audience creates a managed identity with the default audience scoped to your storage account.

Access token

Using an access token is an alternative authentication method. This method requires you to create a Kubernetes secret with the SAS token and reference the secret in the DataflowEndpoint resource.

Get a SAS token for an Azure Data Lake Storage Gen2 (ADLSv2) account. For example, use the Azure portal to browse to your storage account. On the left menu, choose Security + networking > Shared access signature. Use the following table to set the required permissions.

| Parameter | Enabled setting |

|---|---|

| Allowed services | Blob |

| Allowed resource types | Object, Container |

| Allowed permissions | Read, Write, Delete, List, Create |

To enhance security and follow the principle of least privilege, you can generate a SAS token for a specific container. To prevent authentication errors, ensure that the container specified in the SAS token matches the dataflow destination setting in the configuration.

In the operations experience dataflow endpoint settings page, select the Basic tab then choose Authentication method > Access token.

Enter the access token secret name you created in Access token secret name.

To learn more about secrets, see Create and manage secrets in Azure IoT Operations Preview.

User-assigned managed identity

To use user-managed identity for authentication, you must first deploy Azure IoT Operations with secure settings enabled. To learn more, see Enable secure settings in Azure IoT Operations Preview deployment.

Then, specify the user-assigned managed identity authentication method along with the client ID, tenant ID, and scope of the managed identity.

In the operations experience dataflow endpoint settings page, select the Basic tab then choose Authentication method > User assigned managed identity.

Enter the user assigned managed identity client ID and tenant ID in the appropriate fields.

Here, the scope is optional and defaults to https://storage.azure.com/.default. If you need to override the default scope, specify the scope setting via the Bicep or Kubernetes manifest.

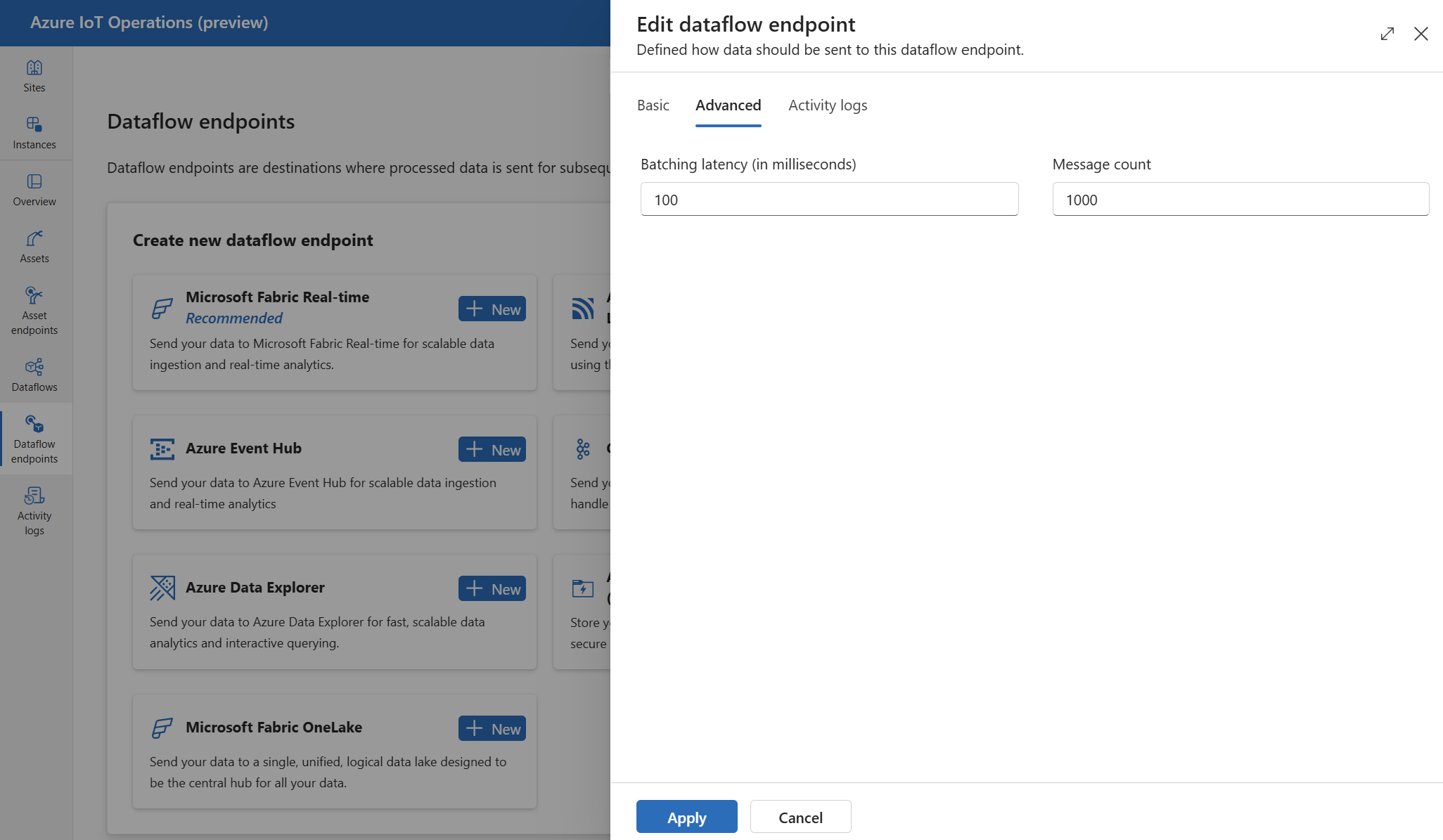

Advanced settings

You can set advanced settings for the Azure Data Lake Storage Gen2 endpoint, such as the batching latency and message count.

Use the batching settings to configure the maximum number of messages and the maximum latency before the messages are sent to the destination. This setting is useful when you want to optimize for network bandwidth and reduce the number of requests to the destination.

| Field | Description | Required |

|---|---|---|

latencySeconds |

The maximum number of seconds to wait before sending the messages to the destination. The default value is 60 seconds. | No |

maxMessages |

The maximum number of messages to send to the destination. The default value is 100000 messages. | No |

For example, to configure the maximum number of messages to 1000 and the maximum latency to 100 seconds, use the following settings:

In the operations experience, select the Advanced tab for the dataflow endpoint.

Next steps

To learn more about dataflows, see Create a dataflow.