Artificial Intelligence overview

Which product offerings from Microsoft utilize AI?

Microsoft has a variety of AI offerings that can be broadly categorized into two groups: ready-to-use generative AI systems such as Copilot, and AI development platforms where you can build your own generative AI systems.

Azure AI serves as the common development platform, providing a foundation for responsible innovation with enterprise-grade privacy, security, and compliance.

For more information on Microsoft’s AI offerings, read the following product documentation:

How does the shared responsibility model apply to AI?

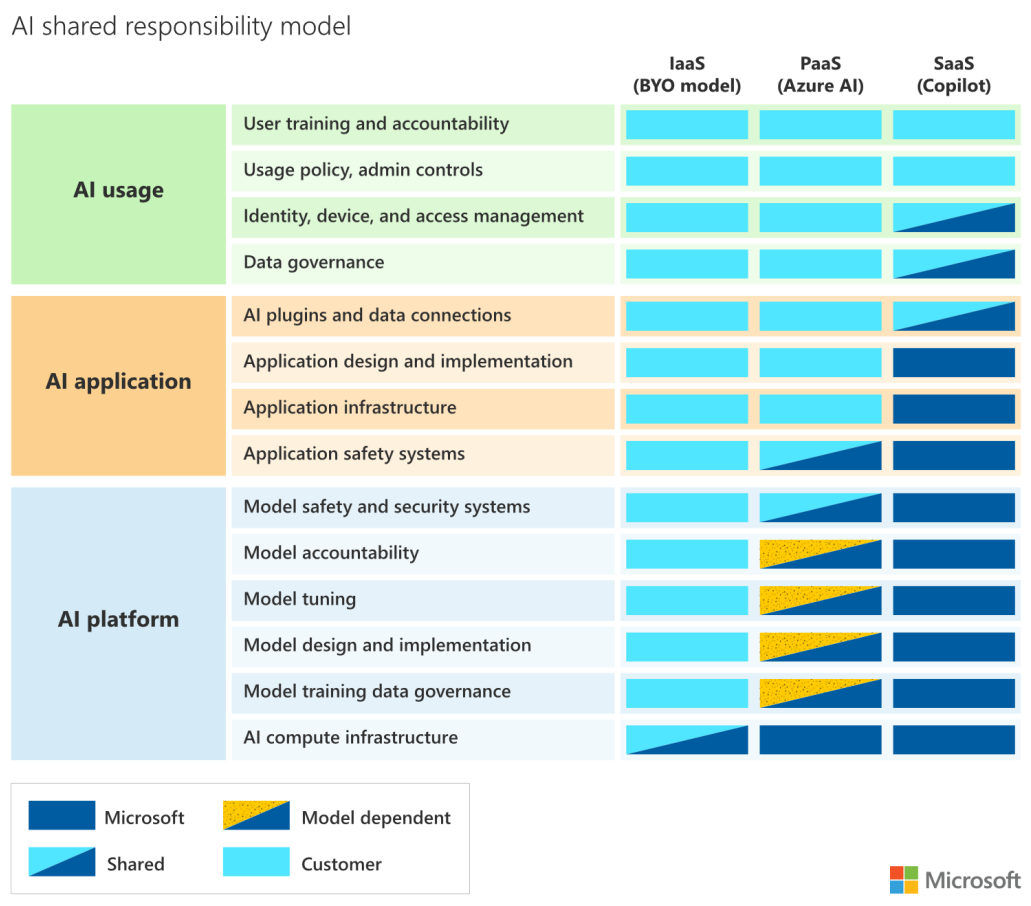

As with other cloud offerings, mitigation of AI risks is a shared responsibility between Microsoft and customers. Depending on the AI capabilities implemented within your organization, responsibilities shift between Microsoft and the customer.

The following diagram illustrates the areas of responsibility between Microsoft and our customers according to the type of deployment.

To learn more, please read Artificial Intelligence (AI) shared responsibility model

For information on how Microsoft supports customers in carrying out their portion of the shared responsibility model, please read How we support our customers in building AI responsibly.

How does Microsoft govern the development and management of AI systems?

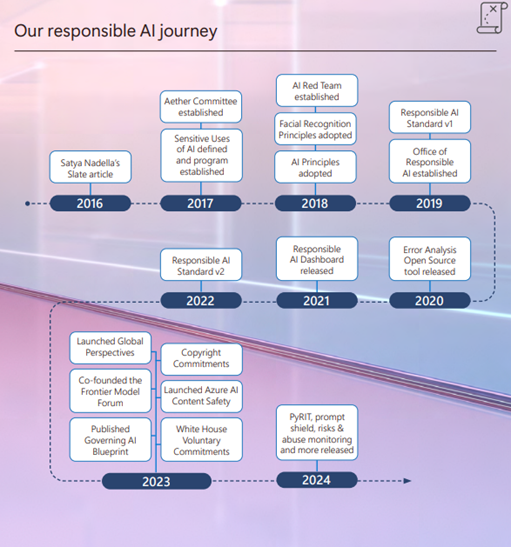

AI isn't new for Microsoft; we have been driving innovations in AI systems for years. Our responsible AI initiative has been the core of our AI efforts and was kickstarted by the release of Satya Nadella’s slate article in 2016 and has lead to where we're today in operationalizing responsible AI.

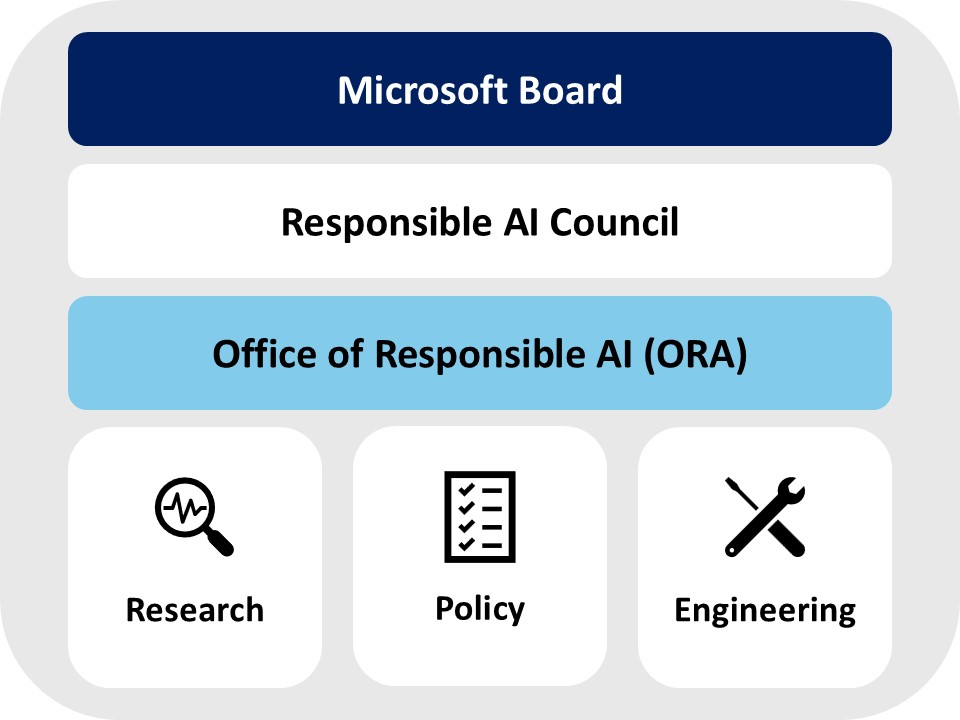

No one team or organization is solely responsible for driving the adoption of responsible AI practices. We combine a federated, bottom-up approach with strong top-down support and oversight by company leadership to fuel our policies, governance, and processes. Specialists in research, policy, and engineering combine their expertise and collaborate on cutting-edge practices to ensure we meet our own commitments while simultaneously supporting our customers and partners.

- Microsoft Board: Our management of responsible AI starts with CEO Satya Nadella and cascades across the senior leadership team and all of Microsoft. The Environmental, Social, and Public Policy Committee of the Board of Directors provides oversight and guidance on responsible AI policies and programs.

- Responsible AI Council: Coled by Vice Chair and President Brad Smith and Chief Technology Officer Kevin Scott, the Responsible AI Council provides a forum for business leaders and representatives from research, policy, and engineering to regularly grapple with the biggest challenges surrounding AI and to drive progress in our responsible AI policies and processes.

- Office of Responsible AI (ORA): ORA is tasked with putting responsible AI principles into practice, which they accomplish through five key functions. ORA sets company-wide internal policies, defines governance structures, provides resources to adopt AI practices, reviews sensitive use cases, and helps shape public policy around AI.

- Research: Researchers in the AI Ethics and Effects in Engineering and Research (Aether) Committee, Microsoft Research, and engineering teams keep the responsible AI program on the leading edge of issues through thought leadership.

- Policy: ORA collaborates with stakeholders and policy teams across the company to develop policies and practices to uphold our AI principles when building AI applications.

- Engineering: Engineering teams create AI platforms, applications, and tools. They provide feedback to ensure policies and practices are technically feasible, innovate novel practices and new technologies, and scale responsible AI practices throughout the company.

What principles serve as the foundation for Microsoft’s RAI program?

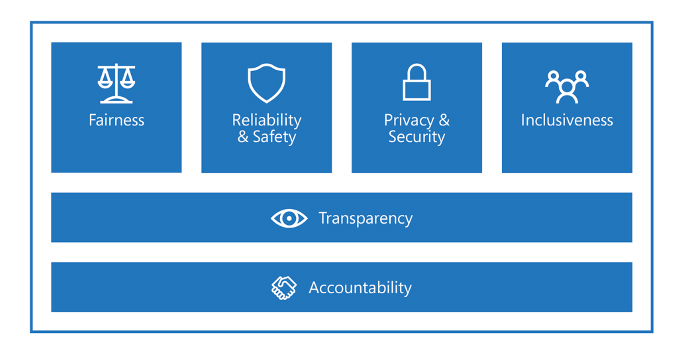

Microsoft developed six human-centered principles to help foster a culture of awareness, learning, and innovation on responsible AI topics. These six AI principles lay the foundation of Microsoft’s RAI program, and the policies ORA develops.

For more information on the Responsible AI principles, read Responsible AI.

How does Microsoft implement the policies defined by ORA?

The Responsible AI Standard (RAI Standard) is the result of a long-term effort to establish product development and risk management criteria for AI systems. It puts into action the RAI principles by defining goals, requirements, and practices for all AI systems developed by Microsoft.

- Goals: Define what it means to uphold our AI principles

- Requirements: Define how we must uphold our AI principles

- Practices: The aids that help satisfy the requirements

The main driving force in the RAI Standard is the Impact Assessment, where the developing team must document outcomes for each goal requirement. For the Privacy & Security and Inclusiveness goals, the RAI Standard states the requirement being compliant with the existing privacy, security, and accessibility programs that already exist at Microsoft and to follow any AI specific guidance they may have.

The full RAI Standard can be found in the Microsoft Responsible AI Standard general requirements documentation.