Use LightGBM models with SynapseML in Microsoft Fabric

The LightGBM framework specializes in creating high-quality and GPU-enabled decision tree algorithms for ranking, classification, and many other machine learning tasks. In this article, you use LightGBM to build classification, regression, and ranking models.

LightGBM is an open-source, distributed, high-performance gradient boosting (GBDT, GBRT, GBM, or MART) framework. LightGBM is part of Microsoft's DMTK project. You can use LightGBM by using LightGBMClassifier, LightGBMRegressor, and LightGBMRanker. LightGBM comes with the advantages of being incorporated into existing SparkML pipelines and used for batch, streaming, and serving workloads. It also offers a wide array of tunable parameters, that one can use to customize their decision tree system. LightGBM on Spark also supports new types of problems such as quantile regression.

Prerequisites

Get a Microsoft Fabric subscription. Or, sign up for a free Microsoft Fabric trial.

Sign in to Microsoft Fabric.

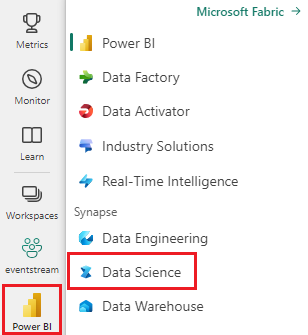

Use the experience switcher on the left side of your home page to switch to the Synapse Data Science experience.

- Go to the Data Science experience in Microsoft Fabric.

- Create a new notebook.

- Attach your notebook to a lakehouse. On the left side of your notebook, select Add to add an existing lakehouse or create a new one.

Use LightGBMClassifier to train a classification model

In this section, you use LightGBM to build a classification model for predicting bankruptcy.

Read the dataset.

from pyspark.sql import SparkSession # Bootstrap Spark Session spark = SparkSession.builder.getOrCreate() from synapse.ml.core.platform import *df = ( spark.read.format("csv") .option("header", True) .option("inferSchema", True) .load( "wasbs://publicwasb@mmlspark.blob.core.windows.net/company_bankruptcy_prediction_data.csv" ) ) # print dataset size print("records read: " + str(df.count())) print("Schema: ") df.printSchema()display(df)Split the dataset into train and test sets.

train, test = df.randomSplit([0.85, 0.15], seed=1)Add a featurizer to convert features into vectors.

from pyspark.ml.feature import VectorAssembler feature_cols = df.columns[1:] featurizer = VectorAssembler(inputCols=feature_cols, outputCol="features") train_data = featurizer.transform(train)["Bankrupt?", "features"] test_data = featurizer.transform(test)["Bankrupt?", "features"]Check if the data is unbalanced.

display(train_data.groupBy("Bankrupt?").count())Train the model using

LightGBMClassifier.from synapse.ml.lightgbm import LightGBMClassifier model = LightGBMClassifier( objective="binary", featuresCol="features", labelCol="Bankrupt?", isUnbalance=True, dataTransferMode="bulk" )model = model.fit(train_data)Visualize feature importance

import pandas as pd import matplotlib.pyplot as plt feature_importances = model.getFeatureImportances() fi = pd.Series(feature_importances, index=feature_cols) fi = fi.sort_values(ascending=True) f_index = fi.index f_values = fi.values # print feature importances print("f_index:", f_index) print("f_values:", f_values) # plot x_index = list(range(len(fi))) x_index = [x / len(fi) for x in x_index] plt.rcParams["figure.figsize"] = (20, 20) plt.barh( x_index, f_values, height=0.028, align="center", color="tan", tick_label=f_index ) plt.xlabel("importances") plt.ylabel("features") plt.show()Generate predictions with the model

predictions = model.transform(test_data) predictions.limit(10).toPandas()from synapse.ml.train import ComputeModelStatistics metrics = ComputeModelStatistics( evaluationMetric="classification", labelCol="Bankrupt?", scoredLabelsCol="prediction", ).transform(predictions) display(metrics)

Use LightGBMRegressor to train a quantile regression model

In this section, you use LightGBM to build a regression model for drug discovery.

Read the dataset.

triazines = spark.read.format("libsvm").load( "wasbs://publicwasb@mmlspark.blob.core.windows.net/triazines.scale.svmlight" )# print some basic info print("records read: " + str(triazines.count())) print("Schema: ") triazines.printSchema() display(triazines.limit(10))Split the dataset into train and test sets.

train, test = triazines.randomSplit([0.85, 0.15], seed=1)Train the model using

LightGBMRegressor.from synapse.ml.lightgbm import LightGBMRegressor model = LightGBMRegressor( objective="quantile", alpha=0.2, learningRate=0.3, numLeaves=31, dataTransferMode="bulk" ).fit(train)print(model.getFeatureImportances())Generate predictions with the model.

scoredData = model.transform(test) display(scoredData)from synapse.ml.train import ComputeModelStatistics metrics = ComputeModelStatistics( evaluationMetric="regression", labelCol="label", scoresCol="prediction" ).transform(scoredData) display(metrics)

Use LightGBMRanker to train a ranking model

In this section, you use LightGBM to build a ranking model.

Read the dataset.

df = spark.read.format("parquet").load( "wasbs://publicwasb@mmlspark.blob.core.windows.net/lightGBMRanker_train.parquet" ) # print some basic info print("records read: " + str(df.count())) print("Schema: ") df.printSchema() display(df.limit(10))Train the ranking model using

LightGBMRanker.from synapse.ml.lightgbm import LightGBMRanker features_col = "features" query_col = "query" label_col = "labels" lgbm_ranker = LightGBMRanker( labelCol=label_col, featuresCol=features_col, groupCol=query_col, predictionCol="preds", leafPredictionCol="leafPreds", featuresShapCol="importances", repartitionByGroupingColumn=True, numLeaves=32, numIterations=200, evalAt=[1, 3, 5], metric="ndcg", dataTransferMode="bulk" )lgbm_ranker_model = lgbm_ranker.fit(df)Generate predictions with the model.

dt = spark.read.format("parquet").load( "wasbs://publicwasb@mmlspark.blob.core.windows.net/lightGBMRanker_test.parquet" ) predictions = lgbm_ranker_model.transform(dt) predictions.limit(10).toPandas()