OpenAIMockResponsePlugin

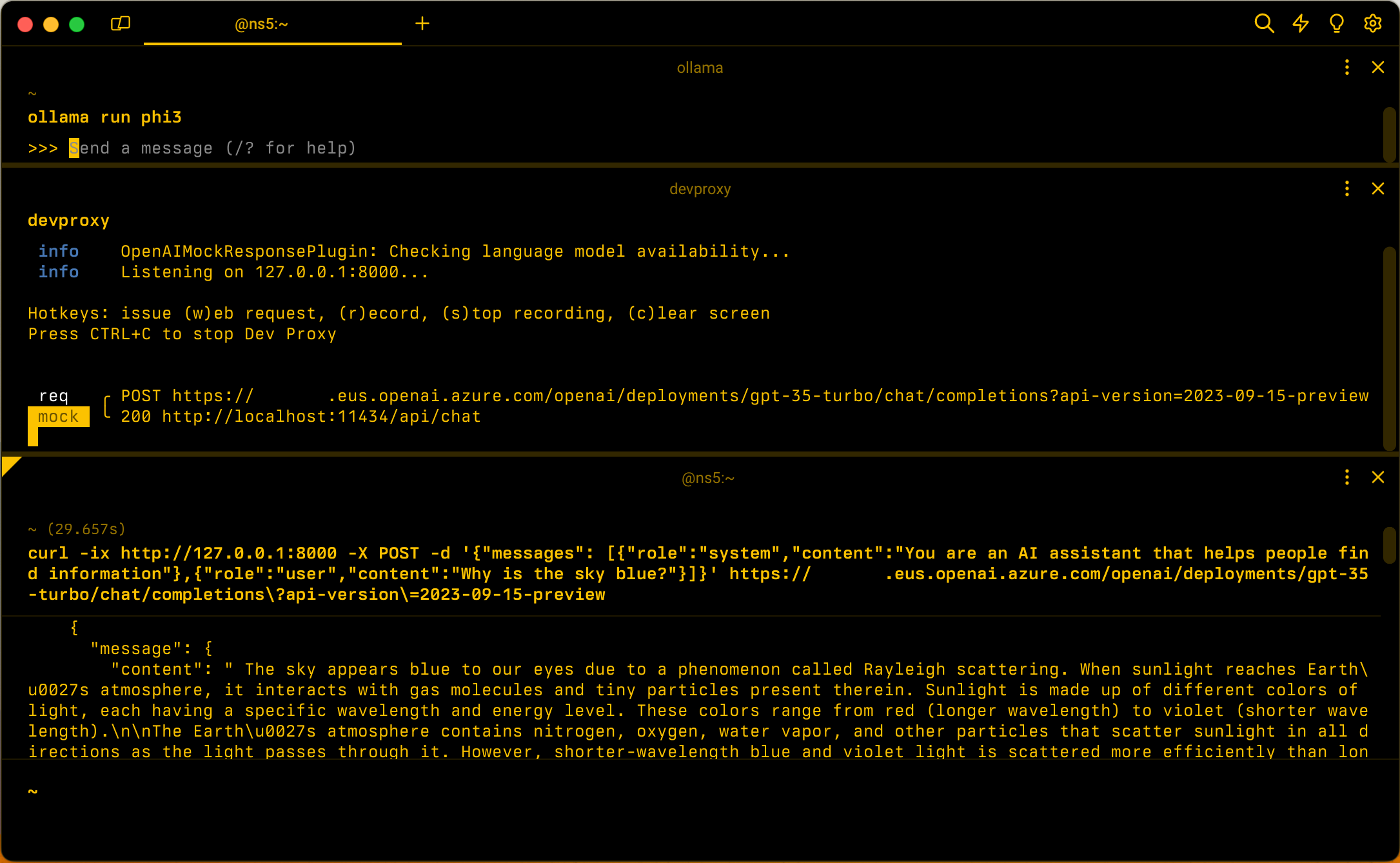

Simulates responses from Azure OpenAI and OpenAI using a local language model.

Plugin instance definition

{

"name": "OpenAIMockResponsePlugin",

"enabled": true,

"pluginPath": "~appFolder/plugins/dev-proxy-plugins.dll"

}

Configuration example

None

Configuration properties

None

Command line options

None

Remarks

The OpenAIMockResponsePlugin plugin simulates responses from Azure OpenAI and OpenAI using a local language model. Using this plugin allows you to emulate using a language model in your app without connecting to the OpenAI or Azure OpenAI service and incurring costs.

The plugin uses the Dev Proxy language model configuration to communicate with a local language model. To use this plugin, configure Dev Proxy to use a local language model.

Important

The accuracy of the responses generated by the plugin depends on the local language model that you use. Before deploying your app to production, be sure to test it with the language model that you plan to use in production.

The OpenAIMockResponsePlugin plugin simulates completions and chat completions. The plugin doesn't support other OpenAI API endpoints.