Exercise - Deploy cross-platform DeepStream images to NVIDIA embedded devices with Azure IoT Edge

You've published a containerized DeepStream Graph Composer workload to your container registry and provisioned your NVIDIA Jetson embedded device with the IoT Edge runtime. Now, you're ready to create a deployment specification in your hub to run the workload as an IoT Edge module.

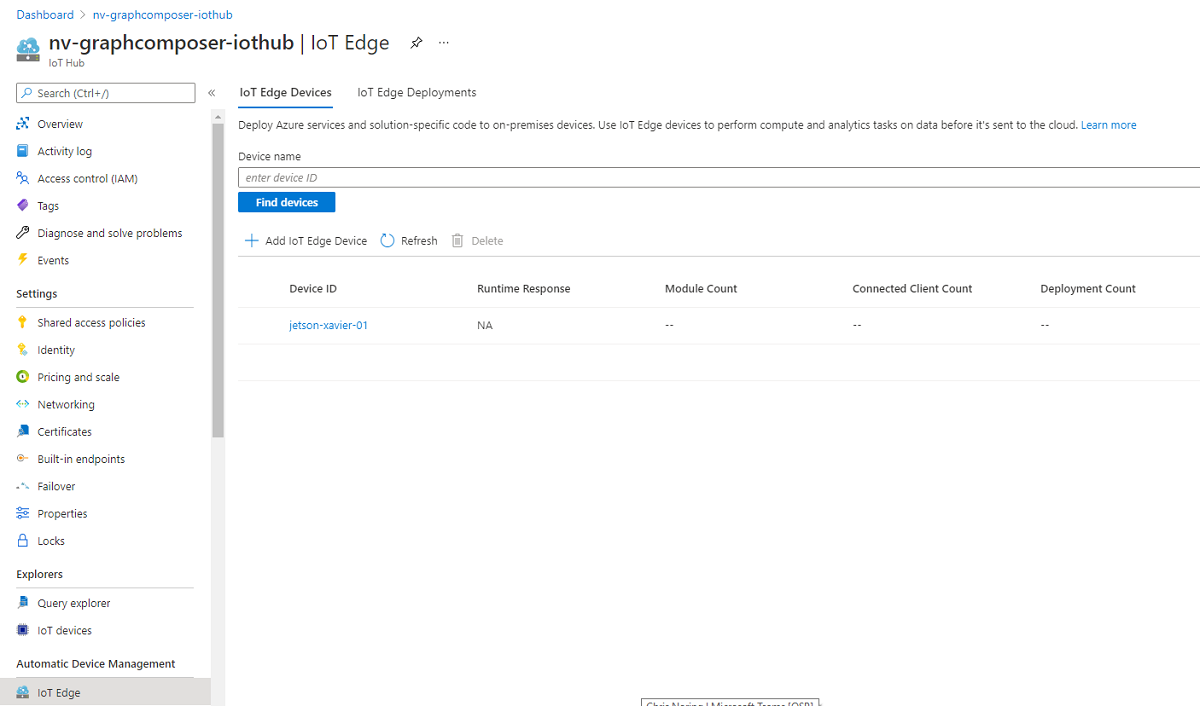

In the Azure portal, go to the IoT hub that you created at the beginning of this module. In the left menu, under Automatic Device Management, select IoT Edge. Look for your registered device.

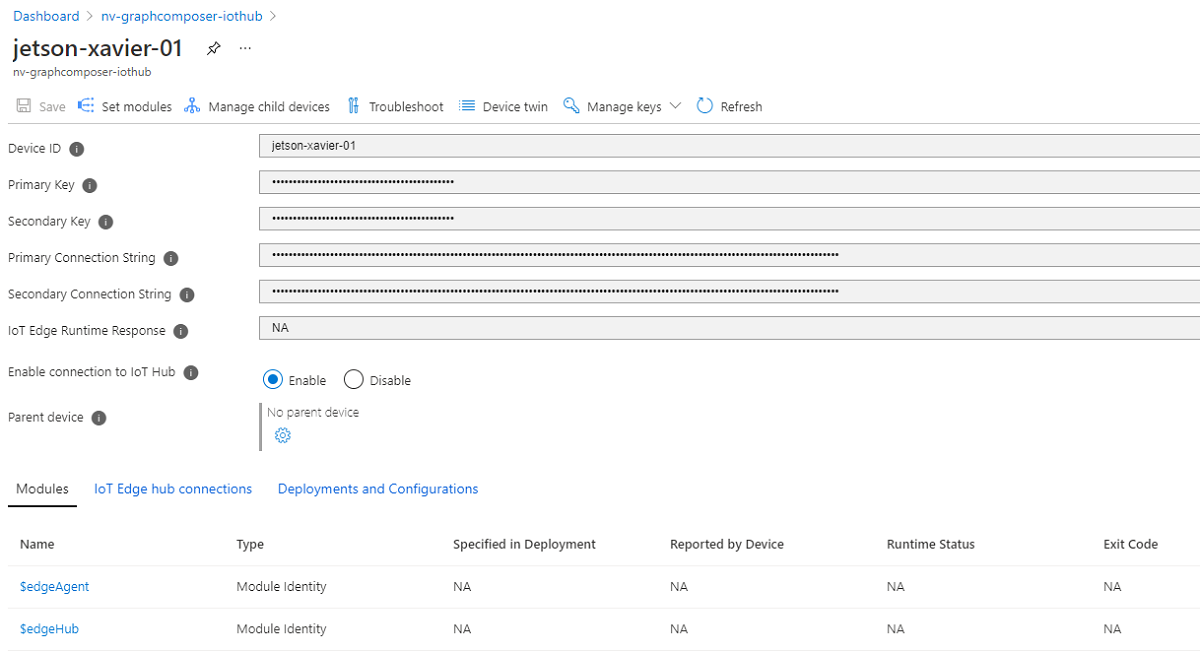

To see details about the current configuration, select the name of the device:

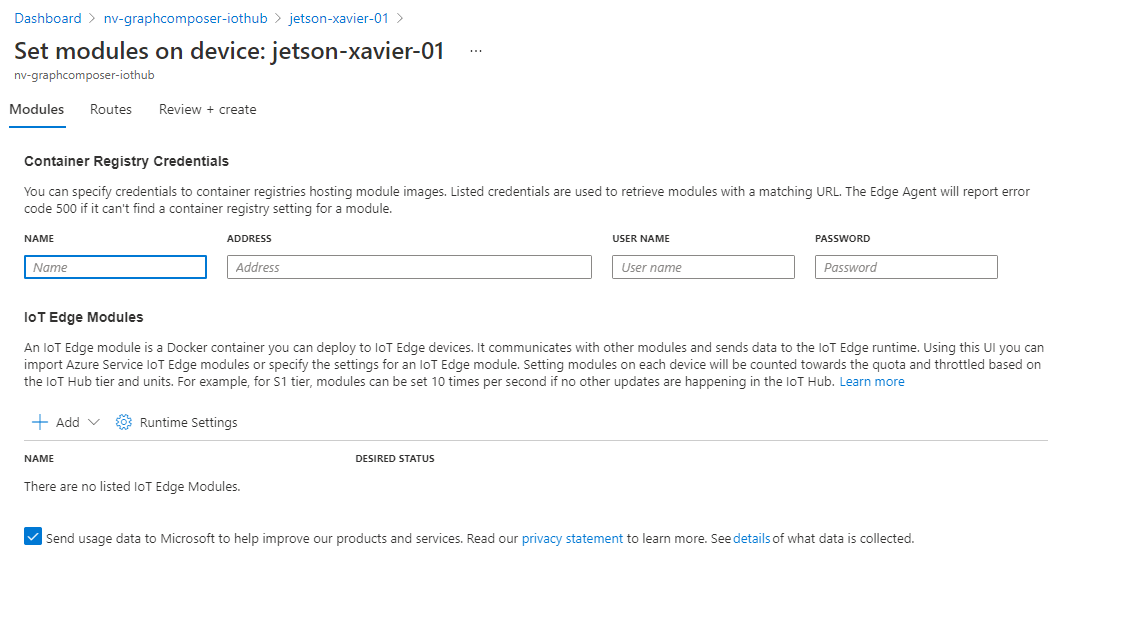

Select the Set modules tab to open the modules editor:

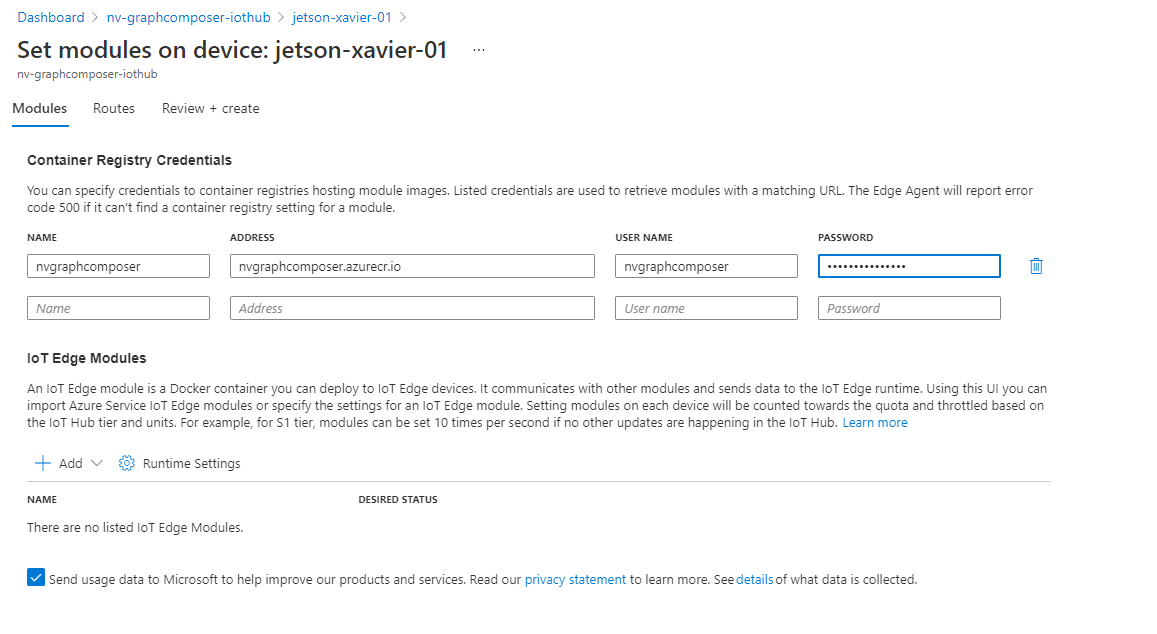

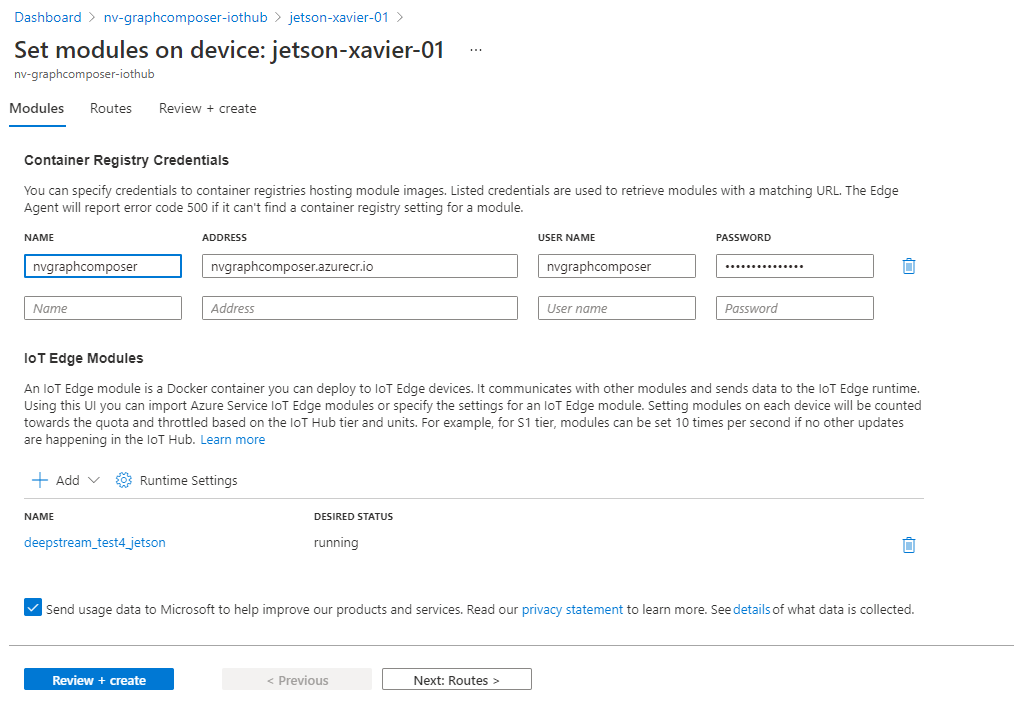

You must supply appropriate Container Registry credentials, so your NVIDIA embedded device can pull container workloads from your container registry.

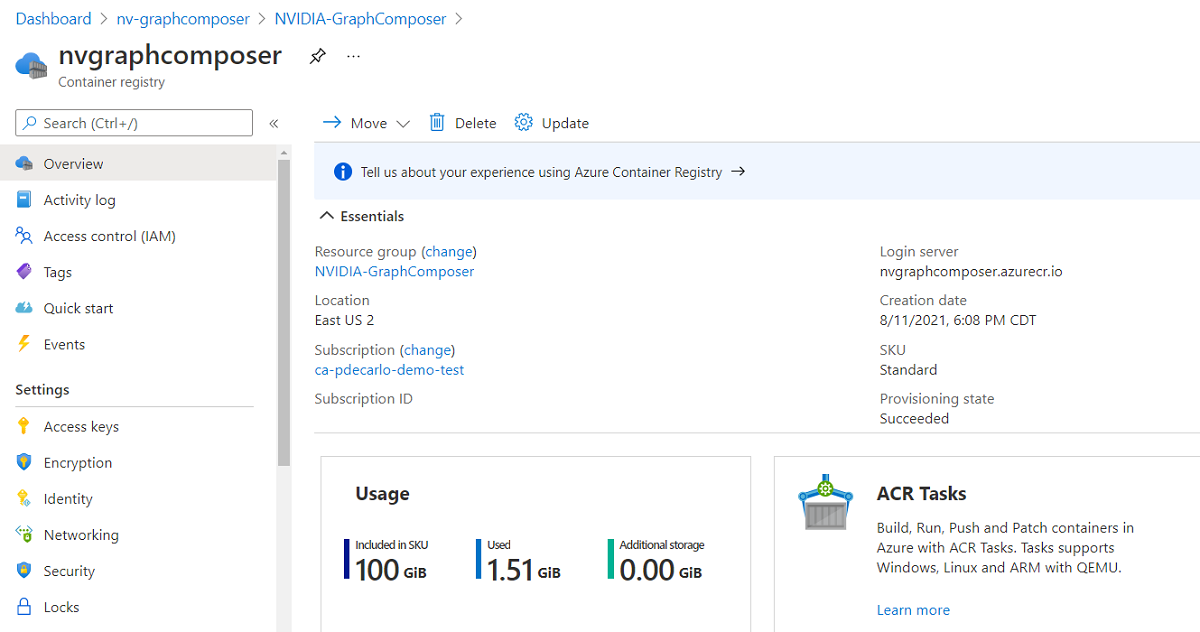

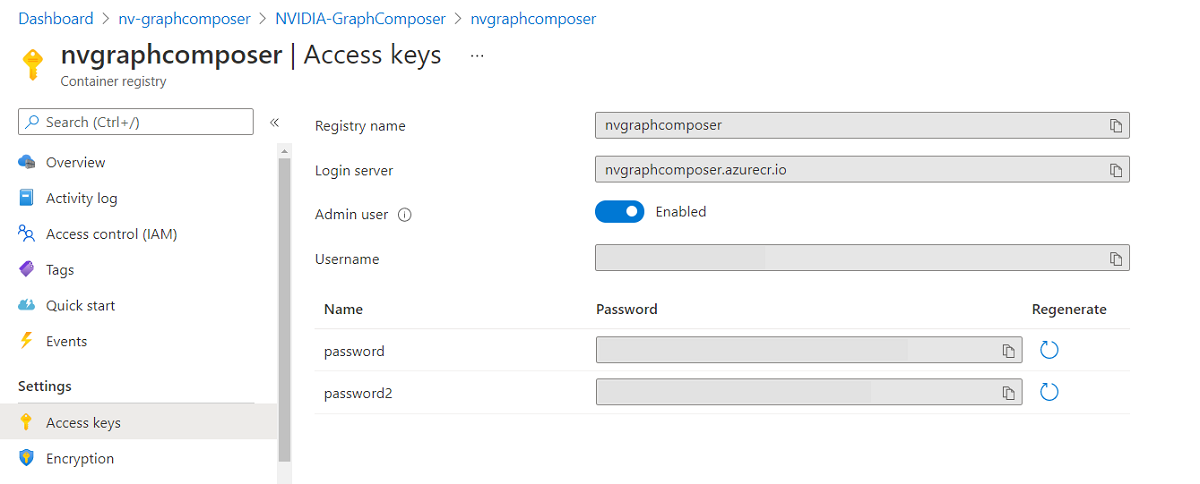

In a separate browser window, go to your container registry in the Azure portal:

In the left menu, under Settings, select Access keys. In Access keys, note the values for Login server, Username, and password. You'll use these values in the next step.

Return to the browser window that's open to Set modules. In Container Registry Credentials, enter the values from the container registry Access keys. Using these credentials, any device that applies this module specification can securely pull container workloads from your container registry in Azure.

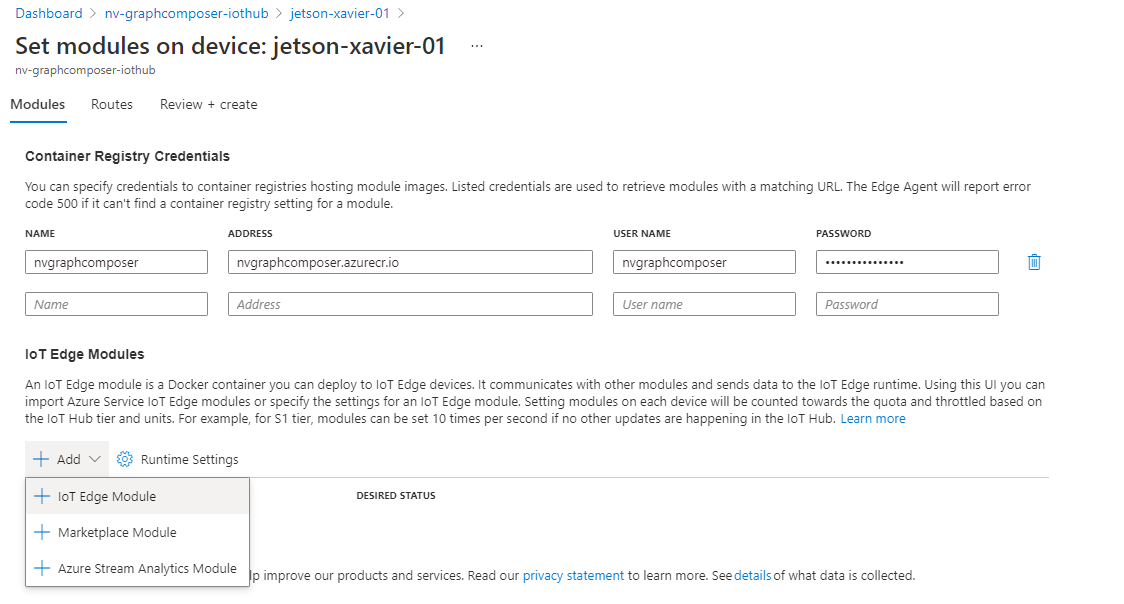

Next, you'll configure a custom IoT Edge module as part of your deployment specification. In the IoT Edge Modules section of the Modules pane, select Add > IoT Edge Module:

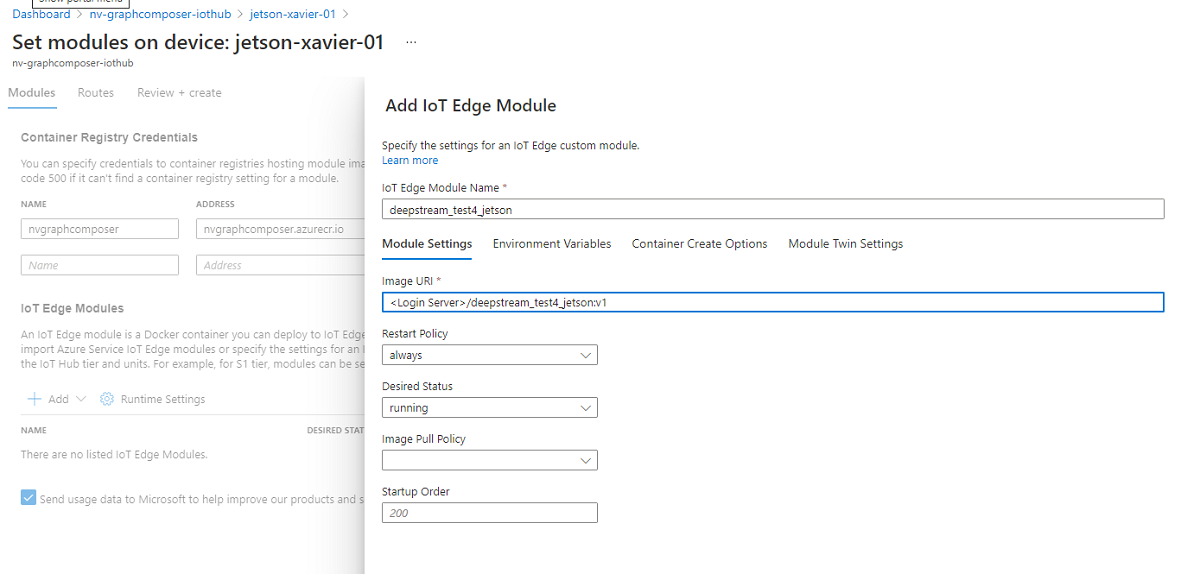

In Add IoT Edge Module, in IoT Edge Module Name, enter the module name deepstream_test4_jetson. In Image URI, enter <Login Server>/deepstream_test4_jetson:v1. For <Login Server>, use the URL of your container registry.

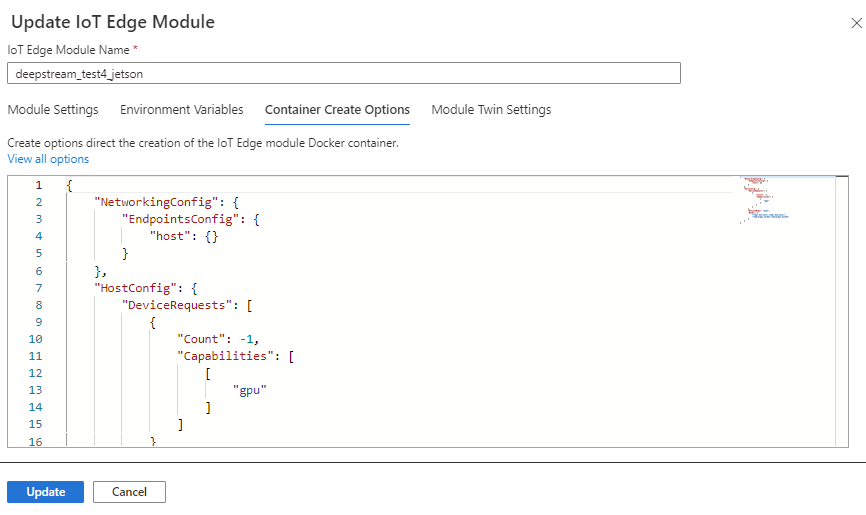

Next, select the Container Create Options tab to enable support for GPU acceleration and also to provide access to the X11 socket to allow for rendering video output from the container by adding the following:

{ "NetworkingConfig": { "EndpointsConfig": { "host": {} } }, "HostConfig": { "DeviceRequests": [ { "Count": -1, "Capabilities": [ [ "gpu" ] ] } ], "NetworkMode": "host", "Binds": [ "/tmp/.X11-unix/:/tmp/.X11-unix/", "/tmp/argus_socket:/tmp/argus_socket" ] } }When you're finished, select Update:

You will return to the Set Modules on device page, once there select Review + create:

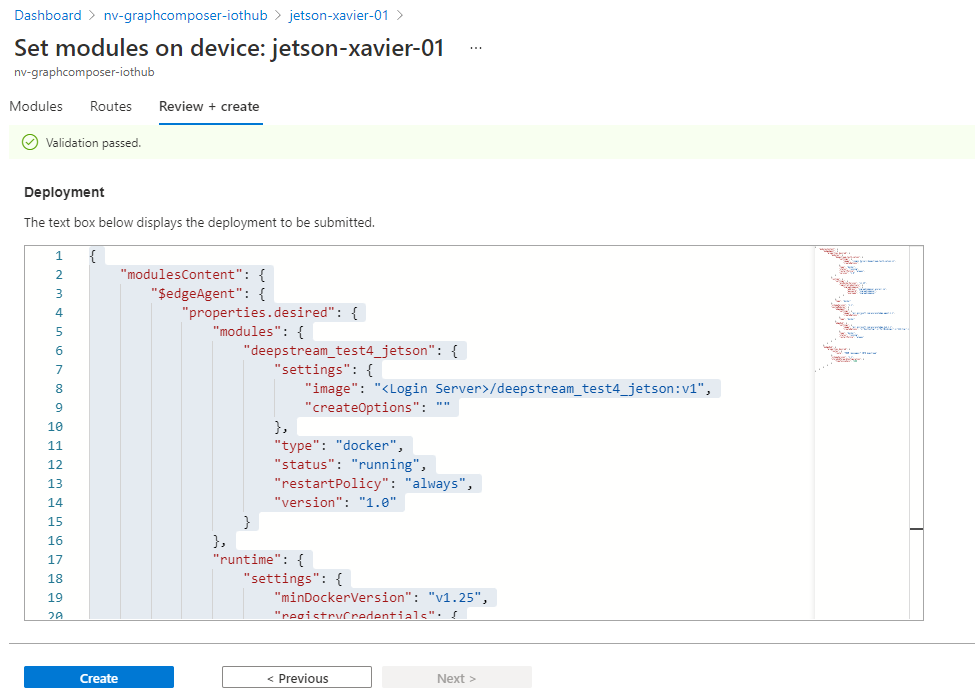

The Deployment text box displays the deployment specification you're about to submit to your device. Verify that the contents look similar to this example:

{ "modulesContent": { "$edgeAgent": { "properties.desired": { "modules": { "deepstream_test4_jetson": { "settings": { "image": "<Login Server>.azurecr.io/deepstream_test4_jetson:v1", "createOptions": "{\"NetworkingConfig\":{\"EndpointsConfig\":{\"host\":{}}},\"HostConfig\":{\"DeviceRequests\":[{\"Count\":-1,\"Capabilities\":[[\"gpu\"]]}],\"NetworkMode\":\"host\",\"Binds\":[\"/tmp/.X11-unix/:/tmp/.X11-unix/\",\"/tmp/argus_socket:/tmp/argus_socket\"]}}" }, "type": "docker", "version": "1.0", "env": { "DISPLAY": { "value": ":0" } }, "status": "running", "restartPolicy": "always" } }, "runtime": { "settings": { "minDockerVersion": "v1.25", "registryCredentials": { "<Your Registry Name>": { "address": "<Login Server>.azurecr.io", "password": "<Your Password>", "username": "<Your Username>" } } }, "type": "docker" }, "schemaVersion": "1.1", "systemModules": { "edgeAgent": { "settings": { "image": "mcr.microsoft.com/azureiotedge-agent:1.1", "createOptions": "" }, "type": "docker" }, "edgeHub": { "settings": { "image": "mcr.microsoft.com/azureiotedge-hub:1.1", "createOptions": "{\"HostConfig\":{\"PortBindings\":{\"5671/tcp\":[{\"HostPort\":\"5671\"}],\"8883/tcp\":[{\"HostPort\":\"8883\"}],\"443/tcp\":[{\"HostPort\":\"443\"}]}}}" }, "type": "docker", "status": "running", "restartPolicy": "always" } } } }, "$edgeHub": { "properties.desired": { "routes": { "route": "FROM /messages/* INTO $upstream" }, "schemaVersion": "1.1", "storeAndForwardConfiguration": { "timeToLiveSecs": 7200 } } }, "deepstream_test4_jetson": { "properties.desired": {} } } }Verify that the deployment configuration is correct, and then select Create to start the deployment process:

To verify that the deployment was successful, run the following commands in a terminal on the NVIDIA embedded device:

sudo iotedge listVerify that the output shows a status of

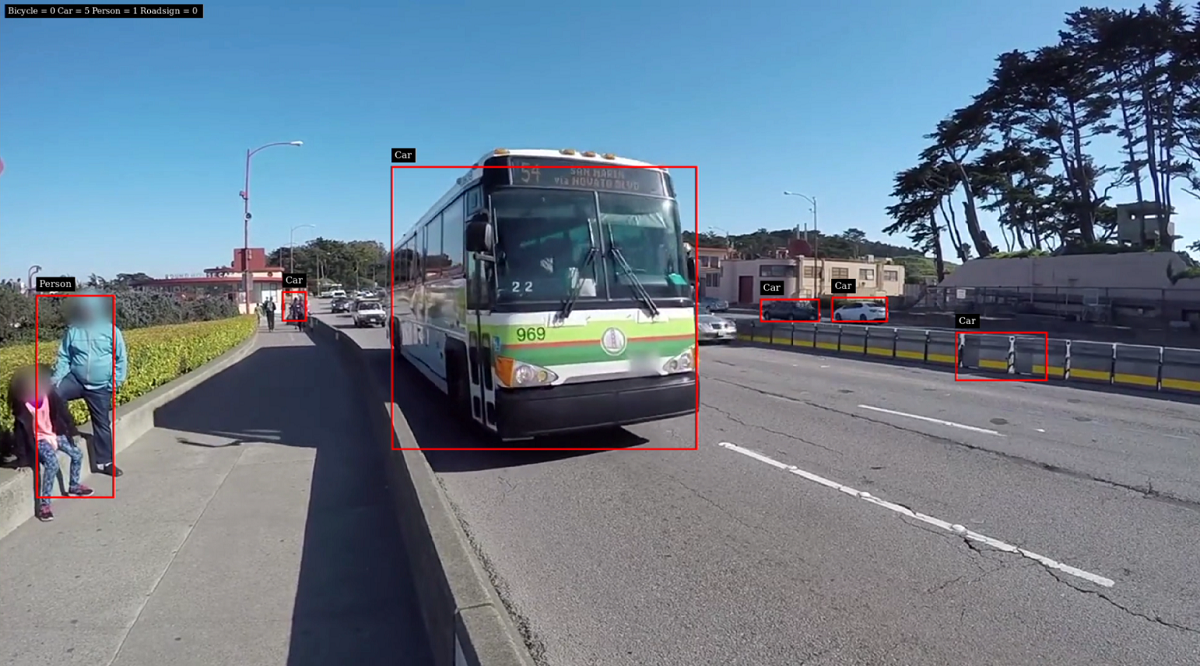

runningfor theedgeAgent,edgeHub, anddeepstream_test4_jetsonmodules.If your device is connected to a display, you should be able to see the visualized output of the DeepStream Graph Composer application, like in this example:

Monitor the output of the

deepstream_test4_jetsonmodule by running the following command in a terminal on the NVIDIA Jetson embedded device:sudo docker logs -f deepstream_test4_jetsonEvery few seconds, your device sends telemetry to its registered hub in Azure IoT Hub. A message that looks like the following example appears:

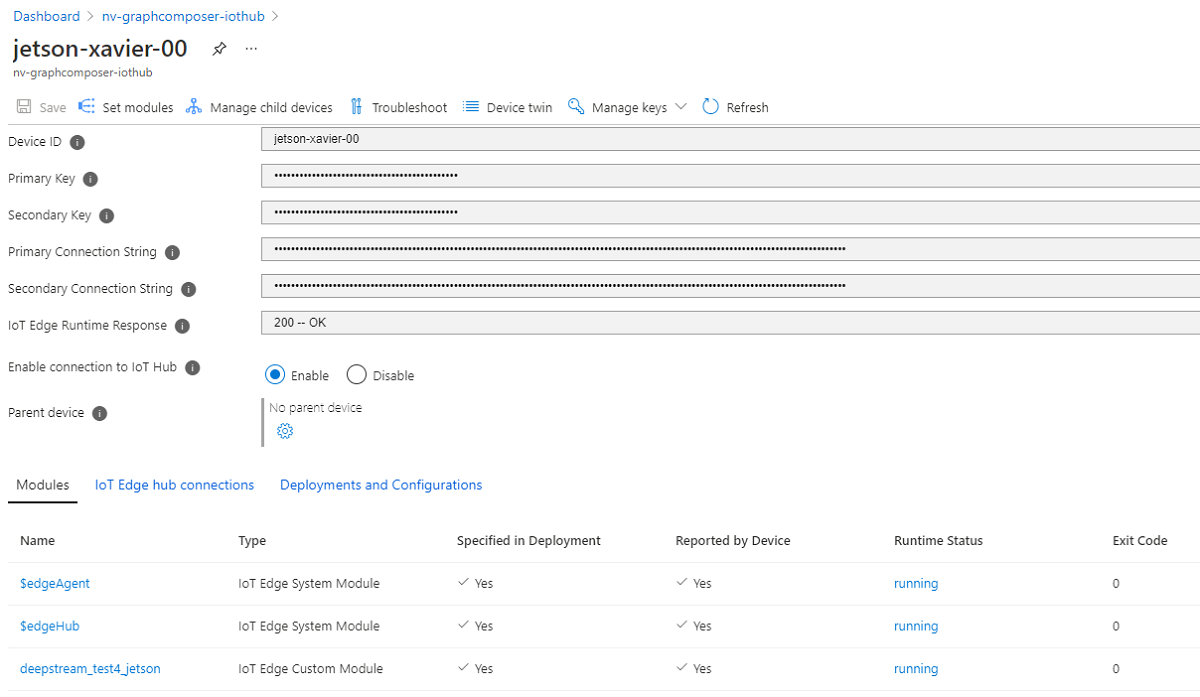

Message sent : { "version" : "4.0", "id" : 1440, "@timestamp" : "2021-09-21T03:08:51.161Z", "sensorId" : "sensor-0", "objects" : [ "-1|570|478.37|609|507.717|Vehicle|#|sedan|Bugatti|M|blue|XX1234|CA|-0.1" ] }You can confirm the status of the running modules in the Azure portal by returning to the device overview for your IoT Edge device. You should see the following modules and associated statuses listed for your device:

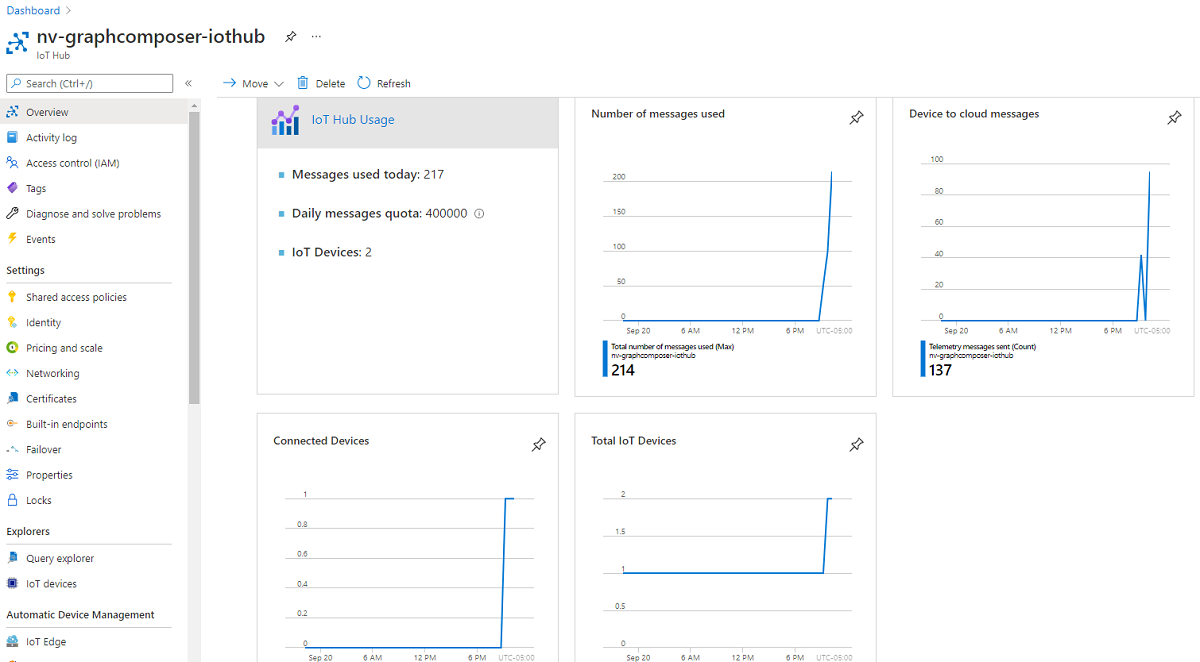

You also can confirm in your IoT Hub overview pane that messages are arriving in your hub from your device. You should notice an increase in messages:

Congratulations! You have successfully developed a production-grade edge deployment of a DeepStream Graph Composer workload and deployed it to a real device by using Azure IoT Edge!

Try this

Using strategies described in this module, how might you modify an existing DeepStream reference graph to support a wildlife conservation solution that counts unique instances of endangered species by using live camera feeds? Which components would you need to modify to support this solution? Would you need to make any modifications to the overall deployment strategy?