Test a data migration and validate output

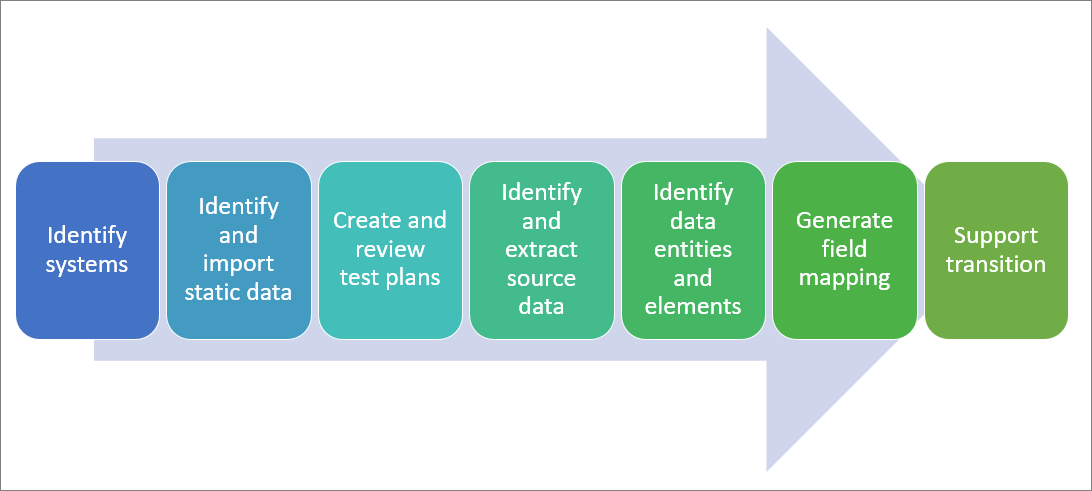

To prepare for data migration, the first step is to identify relevant (legacy) systems that the customer is currently using. Then, you need to identify and import static data that is common between industries.

Identify relevant (legacy) systems

A legacy system is a business solution that has a few common characteristics with current solutions in the market, such as being based on outdated technology or design, being incompatible or difficult and costly to integrate with current systems, and a potential inability of being purchased from vendors because it is unavailable and discontinued.

Each legacy system might have a different data export format option that must be considered as part of the planning for data migration, and you should be prepared for data extraction from legacy data sources.

Identify and import static data that is common between industries

Your customer might have different verticals, such as distribution and trade, manufacturing, and retail. Even if you manage a customer’s data migration that is only focused on one specific industry, such as manufacturing, you still need to investigate to determine whether their customers and suppliers who might be in a different industry such as resellers which are retailers who interchange data with your customer.

When you plan data migration, you need to identify common data that are not frequently changed, such as country codes, zip or postal codes, states, or regions.

Identifying the common static data between different verticals or industries helps reduce the cost and prepare the source data, clean it, and get customer consensus prior to importing the data to finance and operations apps.

Create and review test plans for data migration

Your plans for data migration need to be ready to guide you through the process. Make sure that the right system permissions are applied on resources to have a successful data migration.

Make sure that you back up the database and source files that you plan to migrate. If you encounter any issues during migration, such as corrupt, incomplete, or missing files, you need to correct the error and restore the database from the backup.

You need to extract all data from the source system, and then migrate the data to the target, which is finance and operations apps. Then, you need to clean the data and transform it into the proper format for transfer to finance and operations apps.

Finally, load your cleaned and deduplicated data into your finance and operations apps data management, map fields, and import data to finance and operations apps.

Make sure to always monitor your data migration during the process so that you can detect and resolve any issues or problems if they arise.

Once the migration is complete, ensure that there are no connectivity problems with source system and finance and operations. Your goal is to make sure that all data correctly migrated by performing unit, system, regression, and other applicable tests. Do not forget the simplest method, which is using built-in reports and inquiries in finance and operations, and then have the customer’s key stakeholder verify the data and compare the migrated data with their legacy system.

For example, assume you are migrating the chart of accounts, posted transactions, and so on. After you migrated data for a specific financial period, you ran the trial balance print, and then asked the CFO (or one of your customer’s financial leaders) to compare the results and match them against the financial report on their legacy system before migrating the next period.

Identify and extract source data

When you and your team have analyzed the data and retrieved relevant information from data sources (like a database) in a specific pattern, it is then time to extract the relevant data. You might need to perform further data processing, which involves adding metadata and other data integration, another process in the data workflow.

Identify relevant data entities and elements

You need to know what sources of data are important for the analysis. If the information being analyzed is only related to the scenario at hand, it should be set aside because you should only use data sources that are absolutely relevant to the scenario for migration.

You should never bring all the data from multiple data sources to finance and operations apps in one occurrence; rather, you should have a proper plan to guide you according to the business scenario.

For example, if you are planning to bring all the purchase orders in to finance and operations apps from a legacy system, there are many related and relevant entities that you should consider as well, such as vendor groups, vendors, tax codes, headers and lines of purchase orders, and many more.

Prior to starting the data migration process, identify what data you’re migrating, what format it’s currently in, where it lives, and what format it should be in post-migration. Consider whether the data migration will interfere with normal business operations or will contribute to downtime. If so, you need to plan off hours or weekends for the migration process. Also, remember to perform the database backup prior to starting the data migration. You need to communicate the potential downtime with your customer, so they can plan accordingly. Remember that business continuity always should be your first priority.

Often, your source data must be changed and cleaned, or even split into more segments. Data verification helps you check that the data is available, accessible, complete, and in the correct format. This is called data cleansing. The first stage in data cleansing is to define which rules must be carried out manually and which need to be planned as automated.

Typically, manual cleansing will be done before migration starts, while automated cleansing might be done before or as part of the migration’s initial phase.

Generate field mapping between source and target data structures

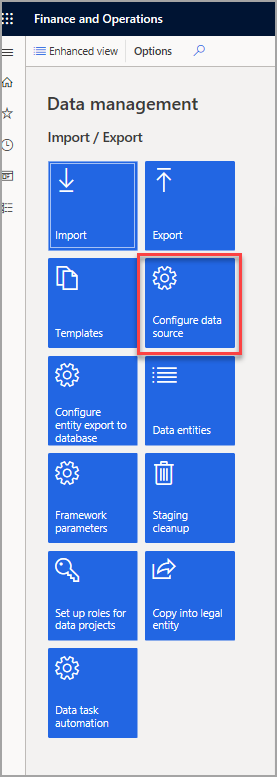

First, you need to identify the format of the data source. You can view the formats in Data management from the Configure data source tile.

In Configure data source, you can create and modify sources of data for migrations. If you have identified the data sources and their relevant entities, you can use different types of data sources depending on the format exported from external systems, some of which are legacy systems.

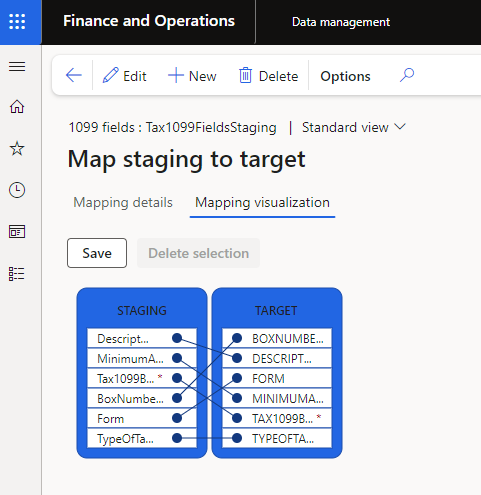

Upon adding an entity into the Data management project for either import or export, finance and operations apps creates field mapping between the source data and the target table for each data entity, which matches the schema of the table associated with the data entity.

The type of the field, and whether it is a required field, are examples of the metadata of the table that is being shown in the Mapping details tab. Here, you can choose to ignore blank values and select a text identifier to properly use the text values from the source data field.

Alternatively, you can use the label for the enumerator fields instead of its value.

Support the transition between the existing and migrated systems

Analyzing the data between what is in the legacy system and what has been imported to finance and operations apps is an important part of data migration. This provides an overview of the source data and its corresponding target table that is represented by data entities in finance and operations apps.

It’s important to know how each system works and how the data within each system is structured. You also need to validate the information discovered in the analysis phase of each phase in data migration, and then ensure that all data is properly migrated by running certain reports, or inquiries. By validating the data, your team can focus solely on structural manipulation and movement and data assurance.