Best practices

To help you understand the best practices and recommendations for dual-write, live sync, and initial sync, the following sections take a step back to explain the overall process of a transaction and the flow of dual-write.

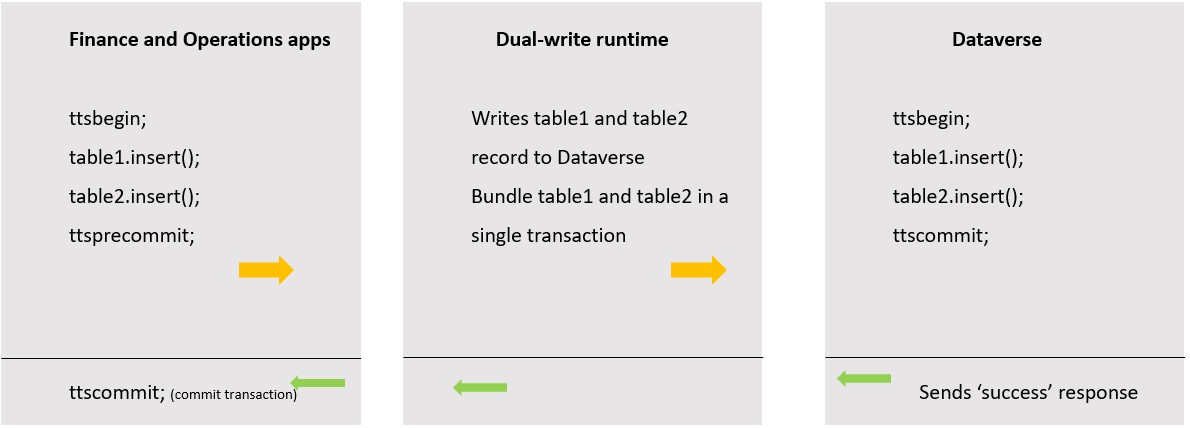

The following diagram shows an example of a live sync transaction and success flow.

A transaction in the flow begins with an update or an insert, and the table is written to the record in Dataverse by being included in a single transaction. Then, that transaction is begun and committed in the Dataverse environment, which will send a successful response. When the success response reaches the finance and operations apps environment, the transaction will be committed, and the process will be complete. One single transaction might involve single or multiple tables’ records underneath it. All table records for a single transaction are included together to create a single batch, and that batch must be successful in committing this transaction in all systems.

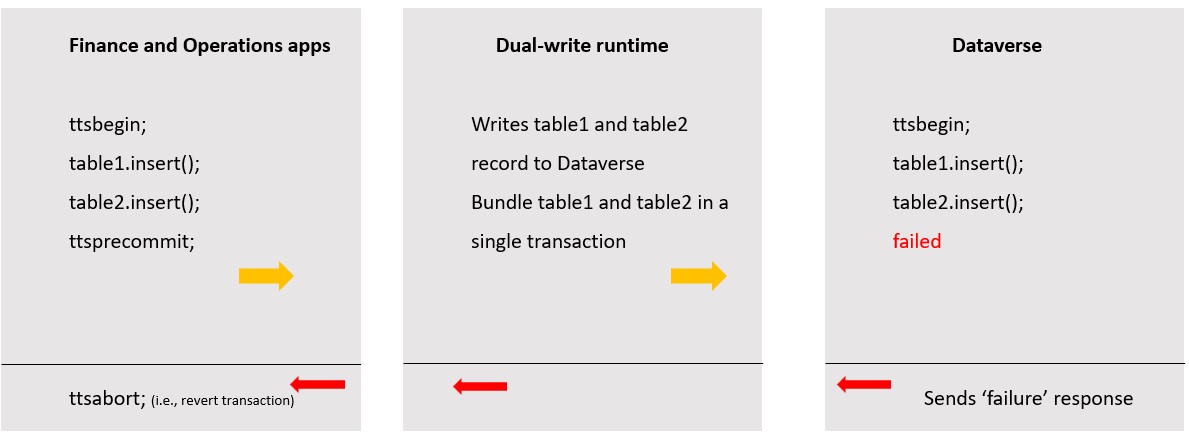

In the case of a failure, as shown in the preceding diagram, if the transaction fails in the Dataverse environment, or anywhere along the transaction, the transaction will be aborted if a failure response is sent from the system during the flow. This failure will trigger the records to roll back in the finance and operations apps environment. When multiple records are being inserted into one or more tables as part of a single transaction block during the implementation of a process, it’s referred to as a single transaction block. Multiple transaction blocks can also occur, where multiple records are being inserted into one or more tables as part of multiple, smaller transaction blocks during an implementation of a dual-write process (such as a posting routine.) Again, if any part of the transaction blocks or the multiple transaction block fails, the transaction will also fail. Transactions between the systems refer to the units of work that happen on either side of the dual-write process.

The number of transactions, the number of records for each transaction, the time-out limit for a transaction, platform limitations, and API limits or throttling can affect the performance and reliability for live sync in dual-write.

For live sync transactions from finance and operations apps to Dataverse, you should limit the number of transactions to the same amount as any API transaction limits. These limits are high and dual-write will not perform as many as most API transactions; however, it’s beneficial to keep these limits in mind. You should limit a single transaction to 1000 or fewer records. Dual-write and the process will reject a transaction over 1000 records as well. The transaction timeout is 60 seconds, or two minutes; this time is the maximum allocated time. The transaction timeout includes the business processes and network issues for each system and isn’t directly related to the number of records. Each timeout can add up to the implementation time for a transaction.

For the live sync transaction from Dataverse to finance and operations apps, these concepts have changed slightly. The number of transactions is determined by the priority-based throttling that’s associated with the environment. Often, dual-write doesn’t meet these limits, but knowing them is a good point of reference if these issues arise. No current limit is imposed on the number of records in a transaction, but the transaction must be completed within the two-minute timeout. The type of business processes behind entities in finance and operations apps are complex and will often have business logic that will run as part of this transaction, which will also contribute to the two-minute timeout window.

For more information, see Service protection API limits and Throttling prioritization.