Hands On with Dynamics CRM 2011's New ExecuteMultiple Request

Microsoft Dynamics 2011 Update Rollup 12 is now available, and includes a brand new OrganizationRequest which allows developers to batch process other requests. In this article, we’ll take a look at the new request, and explore why this new functionality can introduce huge performance gains in certain scenarios.

The Problem

Over the last couple of months, I’ve been working with a customer making use of this new functionality to increase data load performance. Data load scenarios can be a huge challenge where there are performance requirements, especially when dealing with large data sets, and particularly when working with Dynamics CRM 2011 Online.

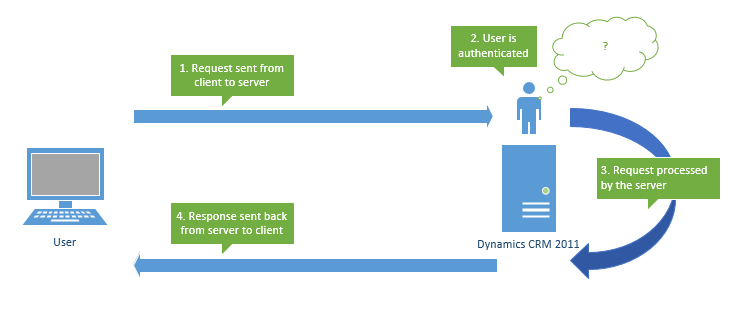

To understand why this can be a challenge, we need to understand how records are typically processed without ExecuteMultiple. Imagine we’re writing an application that loads contact records into Dynamics CRM. At some point in the code, for each row in our data source that represents a contact, we’ll send a single CreateRequest to the server. The journey that each request takes is illustrated below.

Each of the stages (1 – 4) takes a certain amount of time. The time taken for the request to travel from the client to the server (or the other way – stages 1 and 4) is known as the latency. You can view the latency you’re getting between your client and server at https://<server>:<port>/<org>/tools/diagnostics/diag.aspx. For example, typically I get around 15ms latency between my client and server. To put that into context, that means 30ms of every request sent to Dynamics CRM is spent travelling. That’s 8 minutes 20 seconds on a very simple one million record import without even doing any processing on either the client or server ((1,000,000 * 0.03) / 60). Furthermore, 15ms is a very low latency. Additionally, each request is received and authenticated by the server as it’s received. Again, this fixed overhead on each request adds time to our data import.

It’s also common to need to perform additional OrganizationRequests for each row. Imagine we’d written our routine to perform a RetrieveMultiple query prior to creating the request, perhaps to look up a related value, or check if the record already exists. This doubles the amount of web requests, thus doubling the effects of the latency and processing overhead on the server.

ExecuteMultiple

ExecuteMultiple allows us to batch a number of other OrganizationRequests into a single request. These can be requests of different types, for example, a mixture of CreateRequest, UpdateRequest, and RetrieveMultipleRequests if desired. Each request is independent of other requests in the same batch, so you could not, for example, execute a CreateRequest followed by an UpdateRequest using the newly created ID within the same batch. Requests are executed in the order in which they are added to the request collection, and return results in the same order.

The default batch size (which is configurable for On-Premise installations – more on that later) is 1000. So in the 1 million record import example above, instead of sending 1,000,000 CreateRequests, we can send 1,000 batches of 1,000. With a latency of 15ms, the travelling time is cut from 8 minutes 20 seconds, to only 30 seconds ((1,000 * 0.03) / 60). Additionally, the server only receives and authenticates 1,000 records, saving additional processing time.

Obviously the impact of such a reduction in time needs to be looked at in context. If your 1 million record data import takes hours, a time saving of a few minutes may be negligible. Having said that, preliminary testing of the functionality I’ve been working on recently has shown the use of ExecuteMultiple to increase the speed of simple imports to un-customized On-Premise environments by around 35-40%, which is a significant improvement. I would expect this difference to be less in more typical environments, for example, where server side customizations are present.

The new SDK page “Use ExecuteMultiple to Improve Performance for Bulk Data Load” provides a great technical explanation of how to use the functionality, as well as a good code sample.

Batch Size

The maximum batch size allowed for an online deployment of Microsoft Dynamics CRM 2011 is 1,000, which is also the default value for an On-Premise installation. This value can be configured On-Premise if necessary, but it’s important to understand the implications of doing so.

So why not insert say, 100,000 records in a single web request? There are a number of reasons why this is not a good idea. I decided to test this with another simple data import routine, creating 100,000 contact records, with first and last names, and an email address. I configured the ExecuteMultipleMaxBatchSize to 100,000 and ran my console application.

Request Size

Almost immediately, my application failed with a 404 error, which was odd as I had previously tested the application with a more sensible batch size (so I knew all URLs were correct) and the server was still working in the browser. On further investigation I noticed my single web request was 147MB, far exceeding the maxAllowedContentLength parameter set in the web.config on the server. I altered this accordingly and retried.

Again the application failed, although this time it took a little more time to do so. This time I found the answer in the server trace files – the maximum request length had been exceeded. Again I altered the server’s web.config accordingly.

Request Execution Time

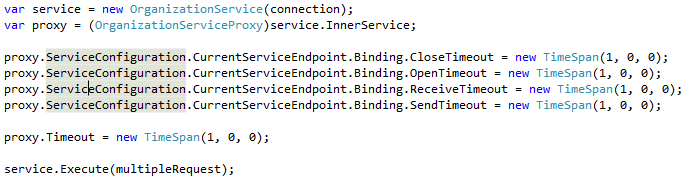

With the necessary web.config values in place, I retried my application. This time the application timed out after two minutes. My Dynamics CRM 2011 environment was a single machine, self-contained CRM and SQL machine with a small 4GB RAM. I find this is typically fine for development, but clearly the machine was struggling to create 100,000 records within the default timeout window. To accommodate this, I increased the timeout values on my OrganizationProxy object, setting them to timeout after 1 hour, rather than the default two minutes.

I ran the application again, which ran for an hour and then timed out.

Risk of Failure

A common misconception that I’ve heard a few people mention with ExecuteMultiple is that it is transaction aware. This is absolutely not the case. Each child request is processed in isolation, and if your ExecuteMultiple request fails midway through, any requests executed prior to failure are not rolled back. For example, after the 1 hour timeout mentioned above, I checked my database and noted 93,000 records had been successfully created. Unfortunately, my client code was unable to capture this information because the request timed out before a response was sent back, which, if this was a real environment, would have left me with a potentially difficult data clean-up exercise.

Conclusion

So when considering a non-default batch size, it’s important to have an understanding of how this effects your ExecuteMultiple requests, to ensure the server will allow large requests to be accepted, and processed in a time that does not risk timeouts.

The ExecuteMultiple request is a great enhancement to the SDK that offers significant performance gains when dealing with bulk operations. Version 5.0.13 of the SDK is available now, here.

|

Dave Burman Consultant Microsoft Consulting Services UK |

Comments

Anonymous

January 09, 2013

Great Article! cannot wait to try this ExecuteMultiple message.Anonymous

January 09, 2013

Nice article Dave! Very informative.Anonymous

January 09, 2013

Very useful, thanks!Anonymous

January 10, 2013

I wonder how the OrganizationServiceContext.SaveChanges() works internally and if it's using this new request. Either way, great write-up.Anonymous

July 10, 2013

This is cool we've been playing with this and our Simego Data Sync product and managed to achieve around 333 Contacts per sec or ~ 50,000 in 2.5 Minutes to CRM Online.