Designing and implementing a GenAI gateway solution

1. Purpose

This guide assists engineering teams in designing and implementing a GenAI gateway solution for Azure OpenAI or any similar hosted LLMs. It offers crucial guidance and reference designs to achieve the following GenAI optimizations:

- Resource utilization

- Integrated workloads

- Enablement of monitoring and billing

Note: This document focuses on guidance rather than implementation details. Key features include:

- Authentication

- Observability

- Compliance

- Controls

2. Definition & Problem Statement

2.1. Problem Statement

Organizations using Large Language Models (LLMs) face challenges in federating and managing GenAI resources. As demand for diverse LLMs grows, a centralized solution is needed to integrate, optimize, and distribute workloads across a federated network. Traditional gateway solutions often lack a unified approach. This lack of unity leads to suboptimal resource utilization, increased latency, and management difficulties.

What is a GenAI Gateway?

A "GenAI gateway" is an intelligent middleware that dynamically balances incoming traffic across backend resources to optimize resource utilization. It can also address challenges related to billing and monitoring.

Some key benefits that can be achieved using GenAI gateway:

|

|---|

| Figure 1: Key Benefits of GenAI Gateway |

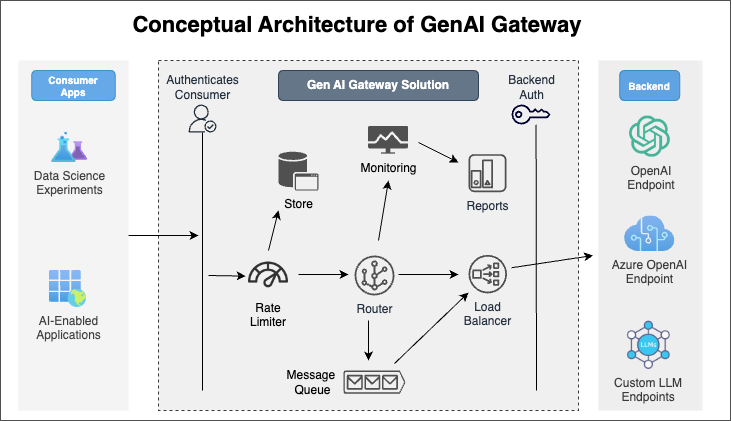

2.2. Conceptual architecture of a GenAI gateway

Below is the conceptual architecture depicting high-level components of a GenAI gateway.

|

|---|

| Figure 2: Conceptual Architecture |

3. Recommended Pre-Reading

It is recommended that readers familiarize themselves with certain key concepts and terminologies of Azure OpenAI. These concepts and terminologies are essential for establishing a foundational understanding.

4. Complexity in Building a GenAI Gateway

Large Language Models (LLMs) are accessed via REST interfaces, allowing easy endpoint calls. In large enterprises, these REST resources are typically hidden behind a Gateway, providing centralized control over access and usage. This Gateway enables effective implementation of policies such as:

- Rate limiting

- Authentication

- Data privacy controls

Traditional API Gateways handle rate limiting and load balancing by doing the following actions:

- Regulating request numbers over time

- Using techniques like throttling

- Load balancing across multiple backends

When using LLM resources, the added complexity of Tokens Per Minute (TPMs) must be managed. The GenAI gateway must regulate both the number of requests and the total tokens processed across multiple requests. This regulation is crucial because the cost of using LLMs is often based on the number of tokens processed. Therefore, effective management of TPMs is essential to control costs and ensure efficient resource utilization.

5. Key Considerations while Building GenAI Gateway

The Tokens Per Minute (TPM) constraint requires modifications to traditional gateways due to the unique challenges posed by AI endpoints.

Key considerations for building a GenAI gateway in line with the Azure Well Architected Framework include:

These guidelines are based on Azure OpenAI but can be applied to custom hosted LLMs or other GenAI resources.

- Scalability

- Performance Efficiency

- Security and Data Integrity

- Operational Excellence

- Cost Optimization

6. Summary

This document provides a foundational understanding of key concepts and practical strategies for implementing a GenAI Gateway. It addresses the challenge of efficiently federating and managing GenAI resources. This federation and management is essential for applications utilizing Azure OpenAI and custom Large Language Models (LLMs). The document applies industry-standard architectural frameworks to categorize and address the complexities of building a GenAI Gateway. It offers comprehensive, technically sound, and best practice-adhering reference designs.