Azure CLI を使用して Azure Monitor エッジのパイプラインに必要なコンポーネントを作成および構成するために必要な手順を次に示します。

エッジ パイプライン拡張機能

次のコマンドは、Arc 対応 Kubernetes クラスターにエッジ パイプライン拡張機能を追加します。

az k8s-extension create --name <pipeline-extension-name> --extension-type microsoft.monitor.pipelinecontroller --scope cluster --cluster-name <cluster-name> --resource-group <resource-group> --cluster-type connectedClusters --release-train Preview

## Example

az k8s-extension create --name my-pipe --extension-type microsoft.monitor.pipelinecontroller --scope cluster --cluster-name my-cluster --resource-group my-resource-group --cluster-type connectedClusters --release-train Preview

カスタムの場所

次の ARM テンプレートは、Arc 対応 Kubernetes クラスター用にカスタムの場所を作成します。

az customlocation create --name <custom-location-name> --resource-group <resource-group-name> --namespace <name of namespace> --host-resource-id <connectedClusterId> --cluster-extension-ids <extensionId>

## Example

az customlocation create --name my-cluster-custom-location --resource-group my-resource-group --namespace my-cluster-custom-location --host-resource-id /subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/my-resource-group/providers/Microsoft.Kubernetes/connectedClusters/my-cluster --cluster-extension-ids /subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/my-resource-group/providers/Microsoft.Kubernetes/connectedClusters/my-cluster/providers/Microsoft.KubernetesConfiguration/extensions/my-cluster

DCE

次の ARM テンプレートは、エッジのパイプラインがクラウド パイプラインに接続するために必要なデータ収集エンドポイント (DCE) を作成します。 同じリージョンに既存の DCE がある場合は、それを使用できます。 テンプレートをデプロイする前に、次の表のプロパティを置き換えます。

az monitor data-collection endpoint create -g "myResourceGroup" -l "eastus2euap" --name "myCollectionEndpoint" --public-network-access "Enabled"

## Example

az monitor data-collection endpoint create --name strato-06-dce --resource-group strato --public-network-access "Enabled"

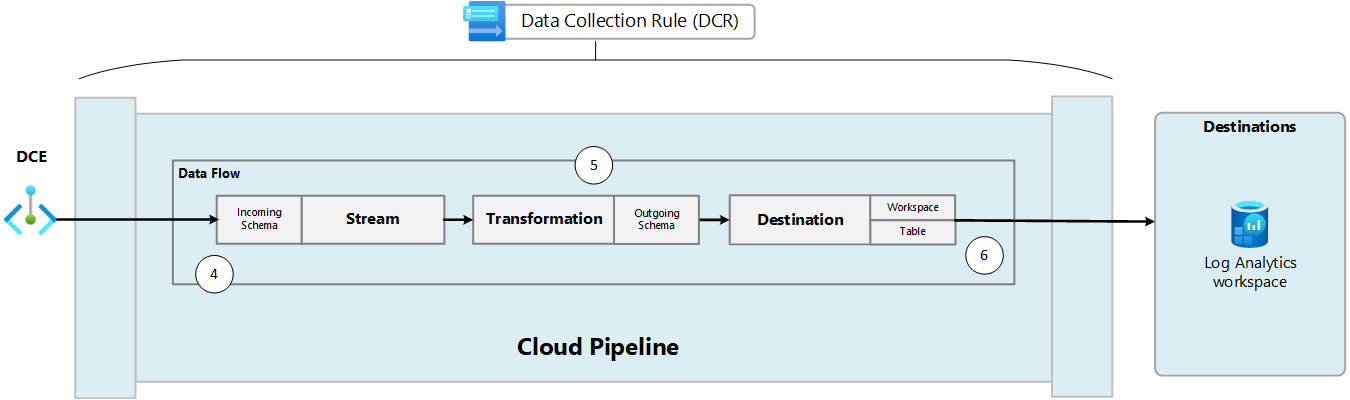

DCR

DCR は Azure Monitor に保存され、エッジのパイプラインからデータを受信したときのデータの処理方法を定義します。 エッジのパイプライン構成では、DCR の immutable ID に加え、データを処理する DCR 内の stream を指定します。 immutable ID は DCR の作成時に自動的に生成されます。

CLI コマンドを実行して DCR を作成する前に、次のテンプレートのプロパティを置き換えて json ファイルに保存します。 DCR の構造の詳細については、「Azure Monitor でのデータ収集ルールの構造」を参照してください。

| パラメーター |

説明 |

name |

DCR の名前。 サブスクリプションに対して一意である必要があります。 |

location |

DCR の場所。 DCE の場所と一致する必要があります。 |

dataCollectionEndpointId |

DCE のリソース ID。 |

streamDeclarations |

受信するデータのスキーマ。 パイプライン構成のデータフローごとに 1 つのストリームが必要です。 名前は DCR 内で一意である必要があり、Custom- で始まる必要があります。 以下のサンプルの column セクションは、OLTP および Syslog のデータ フロー用に使用する必要があります。 宛先テーブルのスキーマが異なる場合は、transformKql パラメータで定義された変換を使用して変更できます。 |

destinations |

追加のセクションを追加することで、複数のワークスペースにデータを送信できます。 |

- name |

dataFlows セクションで参照する宛先の名前。 DCR に対して一意である必要があります。 |

- workspaceResourceId |

Log Analytics ワークスペースのリソース ID。 |

- workspaceId |

Log Analytics ワークスペースのワークスペース ID。 |

dataFlows |

ストリームおよび宛先と一致します。 ストリームと宛先の組み合わせごとに 1 つのエントリ。 |

- streams |

1 つ以上のストリーム (streamDeclarations で定義される)。 同じ宛先に送信される場合は、複数のストリームを含めることができます。 |

- destinations |

1 つ以上の宛先 (destinations で定義される)。 同じ宛先に送信される場合は、複数の宛先を含めることができます。 |

- transformKql |

宛先に送信する前にデータに適用する変換。 source を使用すると、変更なしでデータを送信できます。 変換の出力は、宛先テーブルのスキーマと一致する必要があります。 変換の詳細については、「Azure Monitor でのデータ コレクションの変換」を参照してください。 |

- outputStream |

Log Analytics ワークスペース内の宛先テーブルを指定します。 テーブルは、ワークスペースに既に存在している必要があります。 カスタム テーブルの場合は、テーブル名の前に Custom- を付けます。 組み込みテーブルは、現在エッジのパイプラインではサポートされません。 |

{

"properties": {

"dataCollectionEndpointId": "/subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/my-resource-group/providers/Microsoft.Insights/dataCollectionEndpoints/my-dce",

"streamDeclarations": {

"Custom-OTLP": {

"columns": [

{

"name": "Body",

"type": "string"

},

{

"name": "TimeGenerated",

"type": "datetime"

},

{

"name": "SeverityText",

"type": "string"

}

]

},

"Custom-Syslog": {

"columns": [

{

"name": "Body",

"type": "string"

},

{

"name": "TimeGenerated",

"type": "datetime"

},

{

"name": "SeverityText",

"type": "string"

}

]

}

},

"dataSources": {},

"destinations": {

"logAnalytics": [

{

"name": "LogAnayticsWorkspace01",

"workspaceResourceId": "/subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/my-resource-group/providers/Microsoft.OperationalInsights/workspaces/my-workspace",

}

]

},

"dataFlows": [

{

"streams": [

"Custom-OTLP"

],

"destinations": [

"LogAnayticsWorkspace01"

],

"transformKql": "source",

"outputStream": "Custom-OTelLogs_CL"

},

{

"streams": [

"Custom-Syslog"

],

"destinations": [

"LogAnayticsWorkspace01"

],

"transformKql": "source",

"outputStream": "Custom-Syslog_CL"

}

]

}

}

次のコマンドを使用して DCR をインストールします。

az monitor data-collection rule create --name 'myDCRName' --location <location> --resource-group <resource-group> --rule-file '<dcr-file-path.json>'

## Example

az monitor data-collection rule create --name my-pipeline-dcr --location westus2 --resource-group 'my-resource-group' --rule-file 'C:\MyDCR.json'

DCR へのアクセス

クラウド パイプラインにデータを送信するには、Arc 対応 Kubernetes クラスターは DCR にアクセスできる必要があります。 次のコマンドを使用して、クラスターのシステム割り当て ID のオブジェクト ID を取得します。

az k8s-extension show --name <extension-name> --cluster-name <cluster-name> --resource-group <resource-group> --cluster-type connectedClusters --query "identity.principalId" -o tsv

## Example:

az k8s-extension show --name my-pipeline-extension --cluster-name my-cluster --resource-group my-resource-group --cluster-type connectedClusters --query "identity.principalId" -o tsv

このコマンドからの出力を次のコマンドへの入力として使用して、Azure Monitor パイプラインにテレメトリを DCR に送信する権限を付与します。

az role assignment create --assignee "<extension principal ID>" --role "Monitoring Metrics Publisher" --scope "/subscriptions/<subscription-id>/resourceGroups/<resource-group>/providers/Microsoft.Insights/dataCollectionRules/<dcr-name>"

## Example:

az role assignment create --assignee "00000000-0000-0000-0000-000000000000" --role "Monitoring Metrics Publisher" --scope "/subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/my-resource-group/providers/Microsoft.Insights/dataCollectionRules/my-dcr"

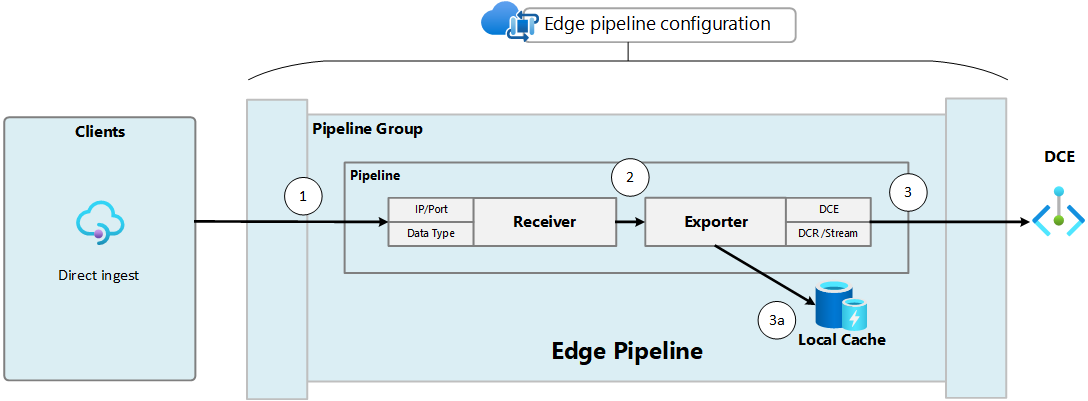

エッジ パイプラインの構成

エッジのパイプラインの構成では、エッジ パイプライン インスタンスの詳細を定義し、テレメトリを受信してクラウドに送信するために必要なデータ フローをデプロイします。

テンプレートをデプロイする前に、次の表のプロパティを置き換えます。

| プロパティ |

Description |

| 全般 |

|

name |

パイプライン インスタンスの名前。 サブスクリプション内で一意である必要があります。 |

location |

パイプライン インスタンスの場所。 |

extendedLocation |

|

| レシーバー |

レシーバーごとに 1 つのエントリ。 各エントリは、受信するデータの種類、リッスンするポート、および構成の pipelines セクションで使用される一意の名前を指定します。 |

type |

受信するデータの種類。 現在のオプションは OTLP と Syslog です。 |

name |

service セクションで参照されるレシーバーの名前。 パイプライン インスタンスに対して一意である必要があります。 |

endpoint |

レシーバーがリッスンするアドレスとポート。 すべてのアドレスに 0.0.0.0 を使用します。 |

| [プロセッサ] |

将来の使用のために予約済み。 |

| エクスポーター |

宛先ごとに 1 つのエントリ。 |

type |

現在サポートされる種類は AzureMonitorWorkspaceLogs のみです。 |

name |

パイプライン インスタンスに対して一意である必要があります。 この名前は構成の pipelines セクションで使用されます。 |

dataCollectionEndpointUrl |

エッジのパイプラインがデータを送信する DCE の URL。 これを Azure portal で見つけるには、DCE に移動し、ログ インジェストの値をコピーします。 |

dataCollectionRule |

クラウド パイプライン内のデータ収集を定義する DCR の不変 ID。 Azure portal の DCR の JSON ビューで、[全般] セクションの [不変 ID] 値をコピーします。 |

- stream |

データを受け入れる DCR 内のストリームの名前。 |

- maxStorageUsage |

キャッシュの容量。 この容量の 80% に達すると、より多くのデータを保存するために最も古いデータが排除されます。 |

- retentionPeriod |

保存期間 (分)。 この時間が経過すると、データは排除されます。 |

- schema |

クラウド パイプラインに送信されるデータのスキーマ。 これは、DCR のストリームで定義されるスキーマと一致する必要があります。 この例で使用されるスキーマは、Syslog と OTLP の両方で有効です。 |

| サービス |

パイプライン インスタンスごとに 1 つのエントリ。 パイプライン拡張機能ごとに 1 つのインスタンスのみを使用することをお勧めします。 |

| パイプライン |

データ フローごとに 1 つのエントリ。 各エントリは exporter を持つ receiver と一致します。 |

name |

パイプラインの一意の名前。 |

receivers |

受信するデータをリッスンする 1 つ以上のレシーバー。 |

processors |

将来の使用のために予約済み。 |

exporters |

クラウド パイプラインにデータを送信する 1 つ以上のエクスポーター。 |

persistence |

キャッシュ用に使用される永続ボリュームの名前。 キャッシュを有効化しない場合は、このパラメータを削除します。 |

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"metadata": {

"description": "This template deploys an edge pipeline for azure monitor."

},

"resources": [

{

"type": "Microsoft.monitor/pipelineGroups",

"location": "eastus",

"apiVersion": "2023-10-01-preview",

"name": "my-pipeline-group-name",

"extendedLocation": {

"name": "/subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/my-resource-group/providers/Microsoft.ExtendedLocation/customLocations/my-custom-location",

"type": "CustomLocation"

},

"properties": {

"receivers": [

{

"type": "OTLP",

"name": "receiver-OTLP",

"otlp": {

"endpoint": "0.0.0.0:4317"

}

},

{

"type": "Syslog",

"name": "receiver-Syslog",

"syslog": {

"endpoint": "0.0.0.0:514"

}

}

],

"processors": [],

"exporters": [

{

"type": "AzureMonitorWorkspaceLogs",

"name": "exporter-log-analytics-workspace",

"azureMonitorWorkspaceLogs": {

"api": {

"dataCollectionEndpointUrl": "https://my-dce-4agr.eastus-1.ingest.monitor.azure.com",

"dataCollectionRule": "dcr-00000000000000000000000000000000",

"stream": "Custom-OTLP",

"schema": {

"recordMap": [

{

"from": "body",

"to": "Body"

},

{

"from": "severity_text",

"to": "SeverityText"

},

{

"from": "time_unix_nano",

"to": "TimeGenerated"

}

]

}

},

"cache": {

"maxStorageUsage": 10000,

"retentionPeriod": 60

}

}

}

],

"service": {

"pipelines": [

{

"name": "DefaultOTLPLogs",

"receivers": [

"receiver-OTLP"

],

"processors": [],

"exporters": [

"exporter-log-analytics-workspace"

],

"type": "logs"

},

{

"name": "DefaultSyslogs",

"receivers": [

"receiver-Syslog"

],

"processors": [],

"exporters": [

"exporter-log-analytics-workspace"

],

"type": "logs"

}

],

"persistence": {

"persistentVolumeName": "my-persistent-volume"

}

},

"networkingConfigurations": [

{

"externalNetworkingMode": "LoadBalancerOnly",

"routes": [

{

"receiver": "receiver-OTLP"

},

{

"receiver": "receiver-Syslog"

}

]

}

]

}

}

]

}

次のコマンドを使用してテンプレートをインストールします。

az deployment group create --resource-group <resource-group-name> --template-file <path-to-template>

## Example

az deployment group create --resource-group my-resource-group --template-file C:\MyPipelineConfig.json

次に示す 1 つの ARM テンプレートを使用して、Azure Monitor のエッジのパイプラインに必要なすべてのコンポーネントをデプロイできます。 環境に対する特定の値を使用してパラメータ ファイルを編集します。 テンプレートを使用する前に変更する必要があるセクションを含む、テンプレートの各セクションについて以下に説明します。

| コンポーネント |

型 |

説明 |

| Log Analytics ワークスペース |

Microsoft.OperationalInsights/workspaces |

既存の Log Analytics ワークスペースを使用している場合、このセクションを削除します。 必要な唯一のパラメータはワークスペース名です。 他のコンポーネント用に必要なワークスペースの不変 ID が自動的に作成されます。 |

| データ収集エンドポイント (DCE) |

Microsoft.Insights/dataCollectionEndpoints |

既存の DCE を使用している場合は、このセクションを削除します。 必要な唯一のパラメータは DCE 名です。 他のコンポーネント用に必要な DCE のログ インジェスト URL が自動的に作成されます。 |

| エッジ パイプライン拡張機能 |

Microsoft.KubernetesConfiguration/extensions |

必要な唯一のパラメータはパイプライン拡張機能名です。 |

| カスタムの場所 |

Microsoft.ExtendedLocation/customLocations |

カスタムを作成するための Azure Arc 対応 Kubernetes クラスターのカスタムの場所。 |

| エッジ パイプライン インスタンス |

Microsoft.monitor/pipelineGroups |

リスナー、エクスポーター、データ フローの構成を含むエッジ パイプライン インスタンス。 テンプレートをデプロイする前に、パイプライン インスタンスのプロパティを変更する必要があります。 |

| データ収集ルール (DCR) |

Microsoft.Insights/dataCollectionRules |

必要なパラメータは DCR 名だけですが、テンプレートをデプロイする前に DCR のプロパティを変更する必要があります。 |

テンプレート ファイル

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"location": {

"type": "string"

},

"clusterId": {

"type": "string"

},

"clusterExtensionIds": {

"type": "array"

},

"customLocationName": {

"type": "string"

},

"cachePersistentVolume": {

"type": "string"

},

"cacheMaxStorageUsage": {

"type": "int"

},

"cacheRetentionPeriod": {

"type": "int"

},

"dceName": {

"type": "string"

},

"dcrName": {

"type": "string"

},

"logAnalyticsWorkspaceName": {

"type": "string"

},

"pipelineExtensionName": {

"type": "string"

},

"pipelineGroupName": {

"type": "string"

},

"tagsByResource": {

"type": "object",

"defaultValue": {}

}

},

"resources": [

{

"type": "Microsoft.OperationalInsights/workspaces",

"name": "[parameters('logAnalyticsWorkspaceName')]",

"location": "[parameters('location')]",

"apiVersion": "2017-03-15-preview",

"tags": "[ if(contains(parameters('tagsByResource'), 'Microsoft.OperationalInsights/workspaces'), parameters('tagsByResource')['Microsoft.OperationalInsights/workspaces'], json('{}')) ]",

"properties": {

"sku": {

"name": "pergb2018"

}

}

},

{

"type": "Microsoft.Insights/dataCollectionEndpoints",

"name": "[parameters('dceName')]",

"location": "[parameters('location')]",

"apiVersion": "2021-04-01",

"tags": "[ if(contains(parameters('tagsByResource'), 'Microsoft.Insights/dataCollectionEndpoints'), parameters('tagsByResource')['Microsoft.Insights/dataCollectionEndpoints'], json('{}')) ]",

"properties": {

"configurationAccess": {},

"logsIngestion": {},

"networkAcls": {

"publicNetworkAccess": "Enabled"

}

}

},

{

"type": "Microsoft.Insights/dataCollectionRules",

"name": "[parameters('dcrName')]",

"location": "[parameters('location')]",

"apiVersion": "2021-09-01-preview",

"dependsOn": [

"[resourceId('Microsoft.OperationalInsights/workspaces', 'DefaultWorkspace-westus2')]",

"[resourceId('Microsoft.Insights/dataCollectionEndpoints', 'Aep-mytestpl-ZZPXiU05tJ')]"

],

"tags": "[ if(contains(parameters('tagsByResource'), 'Microsoft.Insights/dataCollectionRules'), parameters('tagsByResource')['Microsoft.Insights/dataCollectionRules'], json('{}')) ]",

"properties": {

"dataCollectionEndpointId": "[resourceId('Microsoft.Insights/dataCollectionEndpoints', 'Aep-mytestpl-ZZPXiU05tJ')]",

"streamDeclarations": {

"Custom-OTLP": {

"columns": [

{

"name": "Body",

"type": "string"

},

{

"name": "TimeGenerated",

"type": "datetime"

},

{

"name": "SeverityText",

"type": "string"

}

]

},

"Custom-Syslog": {

"columns": [

{

"name": "Body",

"type": "string"

},

{

"name": "TimeGenerated",

"type": "datetime"

},

{

"name": "SeverityText",

"type": "string"

}

]

}

},

"dataSources": {},

"destinations": {

"logAnalytics": [

{

"name": "DefaultWorkspace-westus2",

"workspaceResourceId": "[resourceId('Microsoft.OperationalInsights/workspaces', 'DefaultWorkspace-westus2')]",

"workspaceId": "[reference(resourceId('Microsoft.OperationalInsights/workspaces', 'DefaultWorkspace-westus2'))].customerId"

}

]

},

"dataFlows": [

{

"streams": [

"Custom-OTLP"

],

"destinations": [

"localDest-DefaultWorkspace-westus2"

],

"transformKql": "source",

"outputStream": "Custom-OTelLogs_CL"

},

{

"streams": [

"Custom-Syslog"

],

"destinations": [

"DefaultWorkspace-westus2"

],

"transformKql": "source",

"outputStream": "Custom-Syslog_CL"

}

]

}

},

{

"type": "Microsoft.KubernetesConfiguration/extensions",

"apiVersion": "2022-11-01",

"name": "[parameters('pipelineExtensionName')]",

"scope": "[parameters('clusterId')]",

"tags": "[ if(contains(parameters('tagsByResource'), 'Microsoft.KubernetesConfiguration/extensions'), parameters('tagsByResource')['Microsoft.KubernetesConfiguration/extensions'], json('{}')) ]",

"identity": {

"type": "SystemAssigned"

},

"properties": {

"aksAssignedIdentity": {

"type": "SystemAssigned"

},

"autoUpgradeMinorVersion": false,

"extensionType": "microsoft.monitor.pipelinecontroller",

"releaseTrain": "preview",

"scope": {

"cluster": {

"releaseNamespace": "my-strato-ns"

}

},

"version": "0.37.3-privatepreview"

}

},

{

"type": "Microsoft.ExtendedLocation/customLocations",

"apiVersion": "2021-08-15",

"name": "[parameters('customLocationName')]",

"location": "[parameters('location')]",

"tags": "[ if(contains(parameters('tagsByResource'), 'Microsoft.ExtendedLocation/customLocations'), parameters('tagsByResource')['Microsoft.ExtendedLocation/customLocations'], json('{}')) ]",

"dependsOn": [

"[parameters('pipelineExtensionName')]"

],

"properties": {

"hostResourceId": "[parameters('clusterId')]",

"namespace": "[toLower(parameters('customLocationName'))]",

"clusterExtensionIds": "[parameters('clusterExtensionIds')]",

"hostType": "Kubernetes"

}

},

{

"type": "Microsoft.monitor/pipelineGroups",

"location": "[parameters('location')]",

"apiVersion": "2023-10-01-preview",

"name": "[parameters('pipelineGroupName')]",

"tags": "[ if(contains(parameters('tagsByResource'), 'Microsoft.monitor/pipelineGroups'), parameters('tagsByResource')['Microsoft.monitor/pipelineGroups'], json('{}')) ]",

"dependsOn": [

"[parameters('customLocationName')]",

"[resourceId('Microsoft.Insights/dataCollectionRules','Aep-mytestpl-ZZPXiU05tJ')]"

],

"extendedLocation": {

"name": "[resourceId('Microsoft.ExtendedLocation/customLocations', parameters('customLocationName'))]",

"type": "CustomLocation"

},

"properties": {

"receivers": [

{

"type": "OTLP",

"name": "receiver-OTLP-4317",

"otlp": {

"endpoint": "0.0.0.0:4317"

}

},

{

"type": "Syslog",

"name": "receiver-Syslog-514",

"syslog": {

"endpoint": "0.0.0.0:514"

}

}

],

"processors": [],

"exporters": [

{

"type": "AzureMonitorWorkspaceLogs",

"name": "exporter-lu7mbr90",

"azureMonitorWorkspaceLogs": {

"api": {

"dataCollectionEndpointUrl": "[reference(resourceId('Microsoft.Insights/dataCollectionEndpoints','Aep-mytestpl-ZZPXiU05tJ')).logsIngestion.endpoint]",

"stream": "Custom-DefaultAEPOTelLogs_CL-FqXSu6GfRF",

"dataCollectionRule": "[reference(resourceId('Microsoft.Insights/dataCollectionRules', 'Aep-mytestpl-ZZPXiU05tJ')).immutableId]",

"cache": {

"maxStorageUsage": "[parameters('cacheMaxStorageUsage')]",

"retentionPeriod": "[parameters('cacheRetentionPeriod')]"

},

"schema": {

"recordMap": [

{

"from": "body",

"to": "Body"

},

{

"from": "severity_text",

"to": "SeverityText"

},

{

"from": "time_unix_nano",

"to": "TimeGenerated"

}

]

}

}

}

}

],

"service": {

"pipelines": [

{

"name": "DefaultOTLPLogs",

"receivers": [

"receiver-OTLP"

],

"processors": [],

"exporters": [

"exporter-lu7mbr90"

]

}

],

"persistence": {

"persistentVolume": "[parameters('cachePersistentVolume')]"

}

}

}

}

],

"outputs": {}

}

サンプルのパラメーター ファイル

{

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentParameters.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"location": {

"value": "eastus"

},

"clusterId": {

"value": "/subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/my-resource-group/providers/Microsoft.Kubernetes/connectedClusters/my-arc-cluster"

},

"clusterExtensionIds": {

"value": ["/subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/my-resource-group/providers/Microsoft.KubernetesConfiguration/extensions/my-pipeline-extension"]

},

"customLocationName": {

"value": "my-custom-location"

},

"dceName": {

"value": "my-dce"

},

"dcrName": {

"value": "my-dcr"

},

"logAnalyticsWorkspaceName": {

"value": "my-workspace"

},

"pipelineExtensionName": {

"value": "my-pipeline-extension"

},

"pipelineGroupName": {

"value": "my-pipeline-group"

},

"tagsByResource": {

"value": {}

}

}

}

![[Azure Monitor パイプラインの作成] 画面のスクリーンショット。](media/edge-pipeline/create-pipeline.png)

![[データフローの作成と追加] 画面のスクリーンショット。](media/edge-pipeline/create-dataflow.png)