Table variables and row estimations – Part 1

Hello all,

The subject of estimated rows vs. actual rows in plan execution has a lot of impact in query performance, and the source of these skews can be quite diverse, from outdated statistics, to incorrect sampling, or the inability of the query processor to know the value of certain variables at compile time, just to name a few.

Skewed statistics are a very common reason I find for issues with poor query plans, and that usually leads me to discussions about query recompilation thresholds vs. table cardinality, and the need to proactively act on statistics (even more so with filtered stats), notwithstanding the rule-of-thumb to have auto-create and auto-update statistics on. For proactive stats update and more, you can leverage AdaptiveIndexDefrag , which can be part of our recommendations for maintenance plans.

Cardinality changes are very important for the topic of recompilation. These modifications are tracked by the Column Modification Counter (colmodctr) since SQL Server 2005, which unlike the rowmodctr values exposed by the sys.sysindexes compatibility view, are not visible to the user.

Recompilation is triggered by statistics update within the following thresholds:

- For permanent tables, if the table cardinality was:

- 500 or less at the time statistics were evaluated, recompile for every 500 modifications.

- Above 500 at the time statistics were evaluated, recompile for every 500 + 20% of modifications.

For temporary tables, if the table cardinality was:

- 6 or less at the time statistics were evaluated, recompile for every 6 modifications.

- Between 6 and 500 at the time statistics were evaluated, recompile for every 500 modifications

- Above 500 at the time statistics were evaluated, recompile for every 500 + 20% of modifications.

What about table variables? A recompilation threshold does not exist for these because there are no statistics to track.

So how does SQL Server handle row estimation with table variables? I will demonstrate that in the following examples using AventureWorks2012 database. A huge THANK YOU to my colleague Nacho Portillo (Blog) for his assistance in gathering the internals data for this post.

In the 1st example I will use the HumanResources.Employee table, and load it into a table variable:

SET NOCOUNT ON;

DECLARE @tblEmployee TABLE (

[NationalIDNumber] [nvarchar](15) NOT NULL,

[LoginID] [nvarchar](256) NOT NULL,

[OrganizationNode] [hierarchyid] NULL,

[JobTitle] [nvarchar](50) NOT NULL,

[BirthDate] [date] NOT NULL,

[MaritalStatus] [nchar](1) NOT NULL,

[Gender] [nchar](1) NOT NULL,

[HireDate] [date] NOT NULL,

[SalariedFlag] bit NOT NULL,

[VacationHours] [smallint] NOT NULL,

[SickLeaveHours] [smallint] NOT NULL);

INSERT INTO @tblEmployee

SELECT [NationalIDNumber]

,[LoginID]

,[OrganizationNode]

,[JobTitle]

,[BirthDate]

,[MaritalStatus]

,[Gender]

,[HireDate]

,[SalariedFlag]

,[VacationHours]

,[SickLeaveHours]

FROM [AdventureWorks2012].[HumanResources].[Employee];

And then select from the table variable:

SET STATISTICS PROFILE ON;

SELECT * FROM @tblEmployee

SET STATISTICS PROFILE OFF;

GO

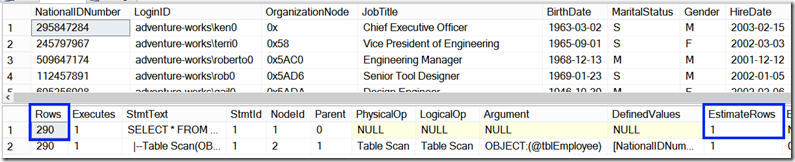

As I said before, SQL Server does not hold statistics for table variables (so no histograms) and assumes Estimated Rowcount is always 1, while Actual Rowcount will be as many rows as you put in your table variable.

Note: When comparing the estimated vs. actual number of rows, it is important to remember that the actual counts need to be divided by the actual number of executions to get a value that is comparable to the estimated number of rows returned. The estimated rows information is the per-invocation estimate.

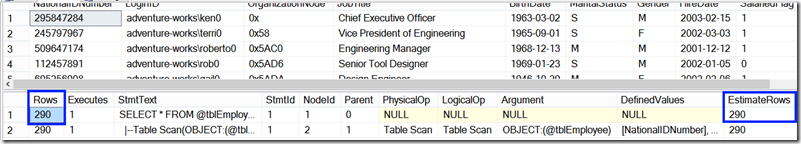

So we can force a statement level recompile for accurate estimation with OPTION(RECOMPILE) in the query with table variables:

SET STATISTICS PROFILE ON;

SELECT * FROM @tblEmployee OPTION(RECOMPILE)

SET STATISTICS PROFILE OFF;

GO

Now the Estimated Rowcount and Actual Rowcount match at 290 rows, at the cost of a recompile. This is a fairly well know behavior. What if we add search arguments?

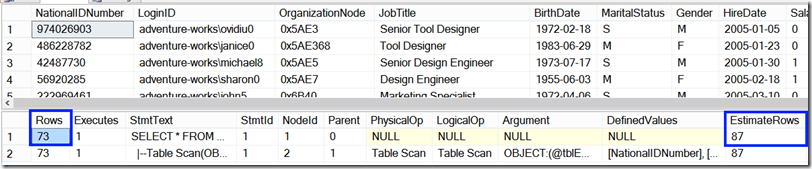

SET STATISTICS PROFILE ON;

SELECT * FROM @tblEmployee WHERE [HireDate] > '2003-06-04'

OPTION(RECOMPILE)

SET STATISTICS PROFILE OFF;

GO

Now the Estimated Rowcount is 87 while the Actual Rowcount is 73.

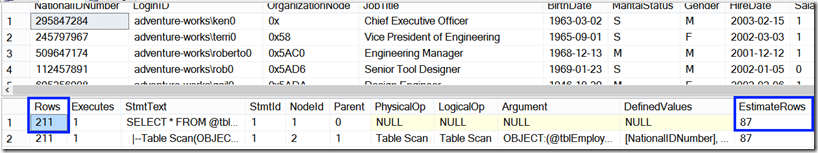

So what if I change the search argument to one that will yield a larger result set?

SET STATISTICS PROFILE ON;

SELECT * FROM @tblEmployee WHERE [BirthDate] < '1980-01-01'

OPTION(RECOMPILE)

SET STATISTICS PROFILE OFF;

GO

The Estimated Rowcount is still 87 while the Actual Rowcount is 211.

Why was the Estimated Rowcount the same 87 rows? First let’s realize what 87 rows mean. The table variable has a 290 cardinality, so 87 rows are exactly 30 percent of that (87 * 100 / 290 = 30).

That is because by design, when a relational operator such as greater than (>), less than (<), greater than or equal to (>=) or less than or equal to (<=) is used, the cardinality of the filter is equal to the cardinality of its left child multiplied by the probability of the comparison being true, which is always 30 percent (290 * 30 percent = 87).

So what about other relational operators? I’ll leave that for a next post Check out the second part of this topic.

See you next time!

Disclaimer: I hope that the information on these pages is valuable to you. Your use of the information contained in these pages, however, is at your sole risk. All information on these pages is provided "as -is", without any warranty, whether express or implied, of its accuracy, completeness, fitness for a particular purpose, title or non-infringement, and none of the third-party products or information mentioned in the work are authored, recommended, supported or guaranteed by Ezequiel. Further, Ezequiel shall not be liable for any damages you may sustain by using this information, whether direct, indirect, special, incidental or consequential, even if it has been advised of the possibility of such damages.