Troubleshoot Manufacturing data solutions (preview)

Important

Some or all of this functionality is available as part of a preview release. The content and the functionality are subject to change.

This troubleshooting guide is designed to help you identify and resolve common issues that can arise during and after the deployment of Manufacturing data solutions service.

Debug failed deployment

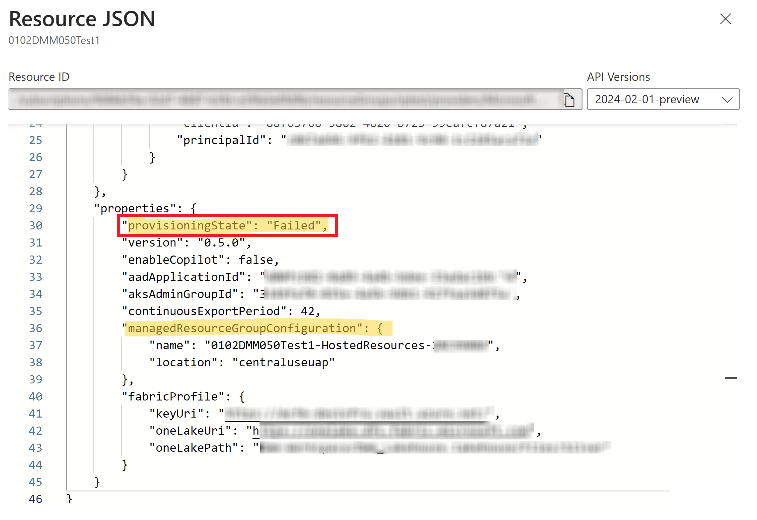

You can check if your deployment succeeded or not by looking at status of the resource.

If the status of your Manufacturing data solutions resource is Failed, go to Managed Resource group for that resource using the following naming convention MDS-{your-deployment-name}-MRG-{UniqueID}. You can also refer to Resource JSON details to fetch the details of Managed Resource group.

Note

The minimum access required is the Reader role for the specified Managed Resource group.

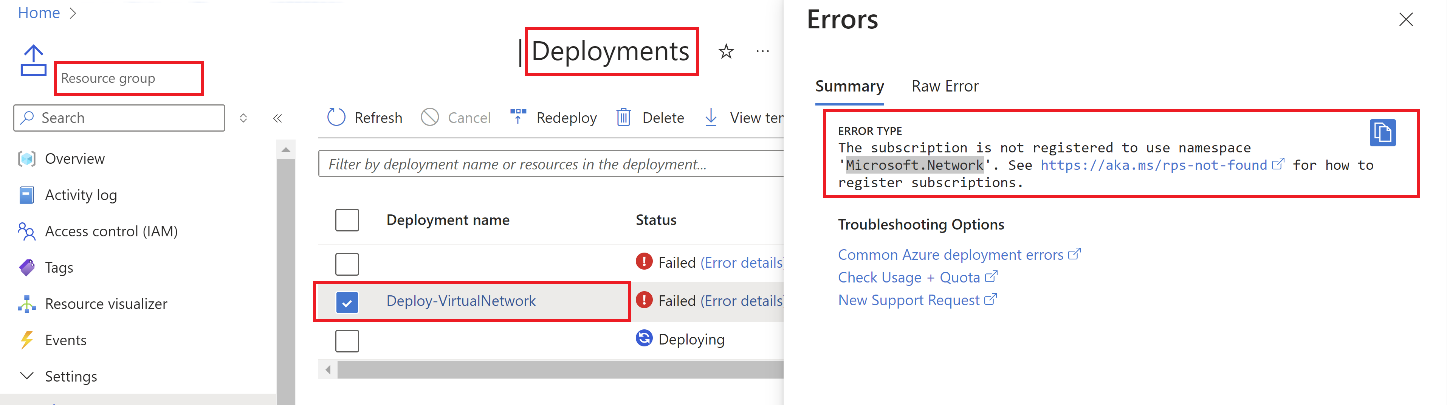

Go to the specified resource group, select Deployments tab from the left-hand menu, and review any deployment errors that have occurred.

If you receive a BadRequest error code, it indicates that the deployment values you sent don't match the expected values by Resource Manager. Check the inner message for more details.

Deployment can fail due to various reasons as listed in the following table:

| Error Code | Meaning | Mitigation |

|---|---|---|

Failed to assign role to UMI: <your-UMI>. Check if UMI has the required roles to assign roles. |

Missing permissions for the User Managed Identity used | If the User Assigned Managed Identity doesn't have the Owner role assigned at the subscription scope, the role assignments don't work and causes the deployment to fail. Also, User managed identity’s service principal need to have Owner role assigned to itself. |

| DeploymentQuotaExceeded | Insufficient quotas and limits for Azure services | Azure resources have certain quotas and limits for various Azure services like Azure Data Explorer, OpenAI etc. Make sure you have enough quota for all the resources before starting the Manufacturing data solutions deployment. |

| NoRegisteredProviderFound/ SubscriptionNotRegistered/ MissingSubscriptionRegistration | Required Resource Providers aren't registered | One of the prerequisites for the deployment is that you must register the Resource Providers listed in the deployment guide. Ensure that all the resource providers are properly registered before starting the deployment. |

| Only one User Assigned Managed Identity is supported | You can’t add multiple User Managed Identities in Manufacturing data solutions | For more information, see common Azure Resource Manager (ARM) deployment errors. |

Note

For any other intermittent issues, try to redeploy the solution once. If problem persists, you need to get in touch with Microsoft Support Team.

Debug custom entity CRUD

To check status of create Entity API, perform the following steps:

Call the POST API. The response includes an

Operation-Locationheader containing a URL. For example, the code is trying to register device entity.curl -X POST https://{{serviceUrl}}/mds/service/entities \ -H "Authorization: {BEARER_TOKEN}" \ -H "Content-Type: application/json" \ -d '{ "name": "Device", "columns": [ { "name": "id", "description": "A unique id", "type": "String", "mandatory": true, "semanticRelevantFlag": true, "isProperNoun": false, "groupBy": true, "primaryKey": true }, { "name": "description", "description": "Additional information about the equipment", "type": "String", "mandatory": false, "semanticRelevantFlag": true, "isProperNoun": false, "groupBy": false, "primaryKey": false }, { "name": "hierarchyScope", "description": "Identifies where the exchanged information", "type": "String", "mandatory": false, "semanticRelevantFlag": true, "isProperNoun": false, "groupBy": false, "primaryKey": false }, { "name": "equipmentLevel", "description": "An identification of the level in the role-based equipment hierarchy.", "type": "Enum", "mandatory": false, "semanticRelevantFlag": true, "isProperNoun": false, "groupBy": false, "primaryKey": false, "enumValues": [ "enterprise", "site", "area", "workCenter", "workUnit", "processCell", "unit", "productionLine", "productionUnit", "workCell", "storageZone", "Storage Unit" ] }, { "name": "operationalLocation", "description": "Identifies the operational location of the equipment.", "type": "String", "mandatory": false, "semanticRelevantFlag": true, "isProperNoun": false, "groupBy": false, "primaryKey": false }, { "name": "operationalLocationType", "description": "Indicates whether the operational", "type": "Enum", "mandatory": false, "semanticRelevantFlag": true, "isProperNoun": false, "groupBy": false, "primaryKey": false, "enumValues": [ "Description", "Operational Location" ] }, { "name": "assetsystemrefid", "description": "Asset/ERP System of Record Identifier for Equipment (AssetID)", "type": "Alphanumeric", "mandatory": false, "semanticRelevantFlag": false, "isProperNoun": false, "isProperNoun": false, "groupBy": false, "primaryKey": false }, { "name": "messystemrefid", "description": "Manufacturing system Ref ID", "type": "Alphanumeric", "mandatory": false, "semanticRelevantFlag": false, "isProperNoun": false, "groupBy": false, "primaryKey": false }, { "name": "temperature", "description": "temperature of device", "type": "Double", "mandatory": false, "semanticRelevantFlag": true, "isProperNoun": false, "groupBy": false, "primaryKey": false }, { "name": "pressure", "description": "pressure of device", "type": "Double", "mandatory": false, "semanticRelevantFlag": true, "isProperNoun": false, "groupBy": false, "primaryKey": false } ], "tags": { "ingestionFormat": "Batch", "ingestionRate": "Hourly", "storage": "Hot" }, "dtdlSchemaUrl": "https%3A%2F%2Fl2storage.blob.core.windows.net%2Fcustomentity%2FDevice.json", "semanticRelevantFlag": true }'It populates header's

Operation-Locationwith the URL to check status of API.Decode the URL provided in the

Operation-Locationheader in the previous call to check the status via GET request.curl -X GET "https://{serviceUrl}/mds/service/entities/status/RegisterEntity/efd66e795001492fb67def8a94336384" \ -H "Authorization: Bearer {BEARER_TOKEN}"Check Action API Response Ensure you receive a 202 response from the API and check the status using the Operation-Locationheader. Perform a GET request on the URL provided in the header and verify that the status in the response payload indicates success.

"createdDateTime": "2024-05-27T03:22:50.1367676Z","resourceLocationUrl": "https://{serviceUrl}/mds/service/entities/Device","status": "Succeeded"

Path:https://{serviceUrl}/mds/service/entities

To debug the error, check the response generated by the API. For more instructions, follow Entity registration Updation.

Troubleshoot via logs

Note

The instructions are written for a Technical/IT professional with familiarity with Azure services. If you are not familiar with these technologies, you may need to work with your IT professional to complete the steps.

Important

Make sure that you at a minimum have Log Analytics Reader role to the deployed Log Analytics Workspace instance

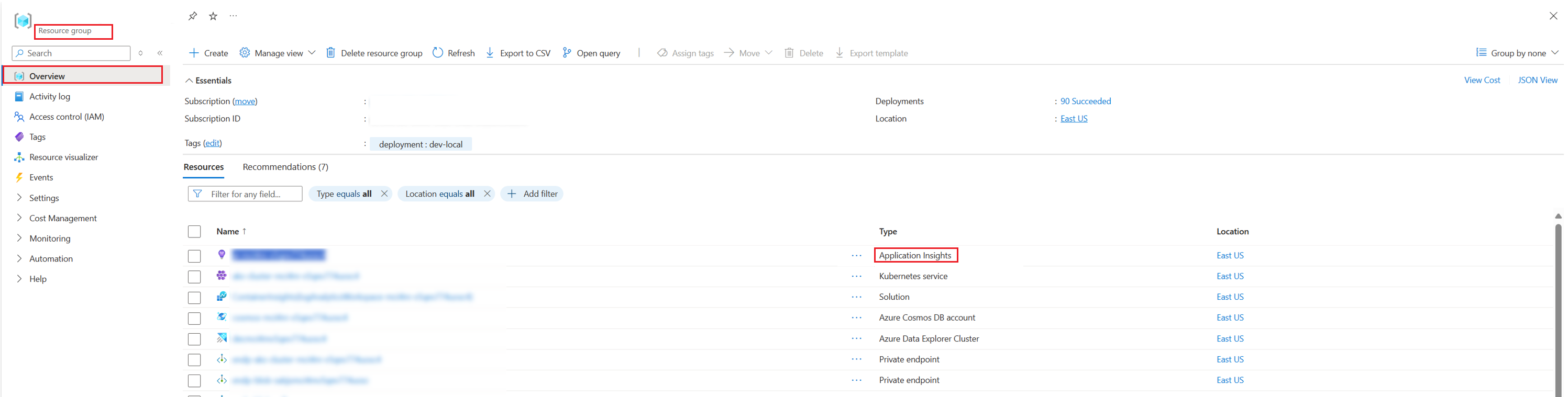

Go to Application Insights Resource in the Managed Resource Group.

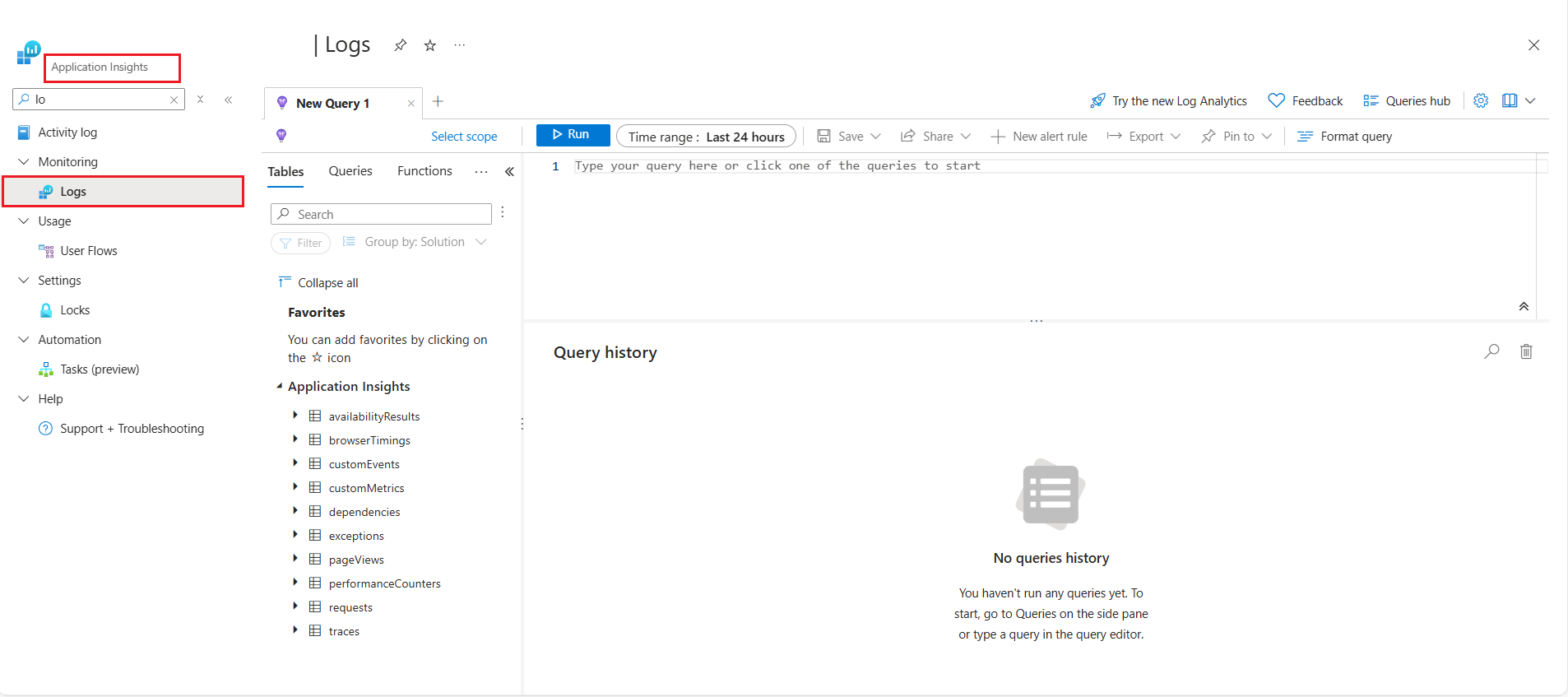

Select Logs under Monitoring navigation section on left hand side.

Run the KQL in Application Insights provided in Table:

For cases where CRUD API returned failed status

Check Action API Response Ensure you receive a 202 response from the API and check the status using the Operation-Locationheader. Perform a GET request on the URL provided in the header and if the status isFailedPath: https://{serviceUrl}/mds/service/entitiesApplication Insights Check Use the following query to check whether it's Redis issue, run the following query in Application Insights:

traces \| sort by timestamp \| where message contains "Error while creating entry in Redis".

If it's a Redis issue, check the actual exception and check Redis resource is up and running.Expected Result - Should return a single entry if an entity was registered within the specified time Application Insights Check Use the following query to check whether it's Cosmos DB issue, run the following query in Application Insights:

`traces | sort by timestamp | where message contains "Error occurred while updating the status in Cosmos".

If it's a Cosmos DB issue, check the inner exception and verify the Cosmos DB is up and running.Expected Result - Should return a single entry if an entity was registered within the specified time For cases where CRUD API returned faulted status -

Check Action API Response Ensure you receive a 202 response from the API and check the status using the "Operation-Location" header. Perform a GET request on the URL provided in the header and if the status is Faulted

Path:{serviceUrl}/mds/service/entitiesApplication Insights Check This check occurs when another job is already processing this entity

traces | sort by timestamp | where message contains " Another job is already processing this entity"

Commonly encountered errors

| Scenario | Condition | Response Code | Response Body Detail |

|---|---|---|---|

| Entity Already Exists | Attempting to register an entity that already exists | 400 | {"Detail": "An entity already exists with the name Device. Please retry with a different name"} |

| Invalid DTDL Schema Location | Providing a location where the DTDL schema isn't present | 400 | {"Detail": "Failed to get the DTDL schema from URL. Error: Response status code does not indicate success: 404 (The specified blob does not exist.)."} |

| Invalid DTDL Schema URL | Providing an invalid dtdlschemaUrl | 400 | {"Detail": "Failed to get the DTDL schema from URL. Error: Response status code does not indicate success: 404 (The specified resource does not exist.)."} |

| Response of GET job status API is Faulted | This response occurs when Another job is already processing this entity | 202 | {"createdDateTime": "2024-05-27T03:22:50.1367676Z","resourceLocationUrl": "https://{serviceUrl}/mds/service/entities/Device","status": "Faulted"} |

| Response of GET job status API is Failed | Case 1: Redis error: Error while creating entry in Redis. Case 2: Cosmos error: Error occurred while updating cosmos |

202 | {"createdDateTime":"2024-05-27T03:22:50.1367676Z","resourceLocationUrl": "https://{serviceUrl}/mds/service/entities/Device","status": "Failed"} |

Troubleshoot data mapping

Initial checks

For assistance in verifying that the mapping is done correctly, contact the Microsoft Support Team at mdssupport@microsoft.com. Additionally, ensure you understand the mapping by reviewing the examples provided on the mapping page.

Troubleshoot data ingestion

Initial checks

Match the actual Twin and relationship count to expected one to use the following API:

curl -X GET "https://{serviceUrl}/mds/service/query/ingestionStatus?operator=BETWEEN&endDate=2024-05-20&startDate=2024-05-01" \ -H "Authorization: Bearer {BEARER_TOKEN}"Check the following link for more detailed information:

Check that you get a healthy response from Manufacturing data solutions Health check API.

curl -H "Authorization: Bearer {BEARER_TOKEN}" https://{{serviceUrl}}/mds/service/healthOutput:

{ "message": "Manufacturing data solutions is ready to serve the requests.", "setupStatus": "Succeeded", "setupInfo": { "registerEntityStatus": "Succeeded" }, "errorMessage": [], "id": "f26dcdeb-6453-4521-b28e-610171db3997", "version": 1 }

Debug ingestion validation errors

Note

The minimum access required is Workspace member role for accessing the validation errors in Fabric.

Batch ingestion

When files are uploaded to the lakehouse, they undergo validation checks.

For batch ingestion, the first 200 validation failures per entity are recorded. These errors are stored in a subfolder named ValidationErrors within the specified lake path provided by the customer. A CSV file is then created in this folder, where the errors are recorded following the format provided in the sample CSV file.

During reingestion, a new file is created with a new timestamp, and any validation errors encountered are recorded in this new file.

Cleanup of files in ValidationErrors isn't being performed. On reingestion, new files get created with latest timestamp. It's user's responsibility to clean up older files if not needed.

Sample CSV file

"File Name","Entity Name","File Type" , "Error Message", "Record"

"Operations Request_20240501134049.csv", "Operations Request", "CSV","DataTypeMisMatch: Cell Value(s) do(es) not adhere to the agreed upon data contract",""ulidoperationsrequest3762901per","YrbidoperationsRequest3762901", "descriptionoperationsRequest732483", "Production", "hierarchyscopeoperationsRequest577992", "2024-02-13T06:00:04.12", "2019-06-13T00:00:00","priorityoperationsRequest646820", "aborted""

Stream ingestion

When streaming data is sent to Manufacturing data solutions, errors are captured in a single file per day at the validation layer.

On performing multiple reingestions, errors are appended in the same file.

The limit on file size is set to 10 MB; if a file reaches this maximum size, no more errors are written to the file per day.

Sample file

"TimeStamp", "Entity Name", "DataFormat" , "ValidationErrorDataCategory" ,"Error Message", "Properties","Relationships"

"20240501130605", "Operations Request", "JSON", "Property", "Invalid property value as there is mismatch with its type ", "{"id":"0128341001001","description":"EXTRUDE","hierarchyScope":"BLOWNLINE06","startTime":"2023-09-24T02:43","endTime":"2023-09-24T19:23:48","requestState":"completed"}", "[]"

"20240501130623", "Equipment Property", "JSON", "Property", "Mandatory Property Cannot be null or empty", "{"id":"","description":"Address of the hierarchy scope object","value":"Contoso Furniture HQ Hyderabad India","valueUnitOfMeasure":"NA","externalreferenceid":"externalreferenceid7ET2VF39EH","externalreferencedictionary":"externalreferencedictionaryVfMZahPNcL","externalreferenceuri":"https://www.bing.com/"}", "[]"

"20240501130624", "Operations Request", "JSON", "Property", "Invalid property value as there is mismatch with its type ", "{"id":"0128341001001","description":"EXTRUDE","hierarchyScope":"BLOWNLINE06","startTime":"2023-09-24T02:43","endTime":"2023-09-24T19:23:48","requestState":"completed"}", "[]"

"20240501130625", "", "JSON", "Relationship", "Provide a valid entity name in the relationship", "{}", "[{"targetEntityName":"","keys":{"id":"FHnAddress1","value":"NA","valueUnitOfMeasure":"NA"},"relationshipType":null}]"

"20240501130627", "", "JSON", "Relationship", "Provide a valid entity name in the relationship", "{}", "[{"targetEntityName":"","keys":{"id":"FHnAddress1","value":"NA","valueUnitOfMeasure":"NA"},"relationshipType":null}]"

"20240501130628", "Equipment Property", "JSON", "Property", "Mandatory Property Cannot be null or empty", "{"id":"","description":"Ownership Type of the estabslishment","value":"Private Limited Company","valueUnitOfMeasure":"NA","externalreferenceid":"externalreferenceid6U5CFU5OVT","externalreferencedictionary":"externalreferencedictionarylxzpGxVYEN","externalreferenceuri":"https://www.bing.com/"}", "[]"

"20240501130630", "", "JSON", "Relationship", "Provide a valid entity name in the relationship", "{}", "[{"targetEntityName":"","keys":{"id":"EZWProducts Handled1","value":"NA","valueUnitOfMeasure":"NA"},"relationshipType":null}]"

"20240501134049", "Operations Request", "JSON", "Property", "Invalid property value as there is mismatch with its type ", "{"id":"0128341001001","description":"EXTRUDE","hierarchyScope":"BLOWNLINE06","startTime":"2023-09-24T02:43","endTime":"2023-09-24T19:23:48","requestState":"completed"}", "[]"

OPC UA stream ingestion

When a streaming OPC UA data or metadata is sent to Manufacturing Data Solutions, errors are captured in a single file per day at the validation layer.

On performing multiple reingestions, errors are appended in the same file. The ValidationErrorDataCategory field indicates whether the issue is with metadata or data.

A limit of 10 MB is set on file size; if a file reaches this maximum size, no more errors are written to the file per day.

"TimeStamp", "DataFormat" , "ValidationErrorDataCategory" ,"Error Message", "DataMessage"

"20240513112048", "OPCUA", "OpcuaData", "[MDS_Ingestion_Response_Code : MDS_IG_OPCUA_MappingDataFieldsNotMatching] : Ingestion failed as Field key not matching with field in metadata with namespaceUri", "{"DataSetWriterId":200,"Payload":{"new key":{"Value":"Contoso Furniture HQ Hyderabad Indianew"}},"Timestamp":"2023-10-10T09:27:39.2743175Z"}"

"20240508115927", "OPCUA", "OpcuaMetadata", "[MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataPublisherIdMissing]: Validation failed as publisherId is missing", "{"MessageType":"ua-metadata","PublisherId":"","MessageId":"600","DataSetWriterId":200,"MetaData":{"Name":"urn:assembly.seattle;nsu=http://opcfoundation.org/UA/Station/;i=403","Fields":[{"Name":"Address Hyderabad","FieldFlags":0,"BuiltInType":6,"DataType":null,"ValueRank":-1,"MaxStringLength":0,"DataSetFieldId":"b6738567-05eb-4b48-8cd4-f38c9b120129"}],"ConfigurationVersion":null}}"

"20240508120004", "OPCUA", "OpcuaMetadata", "[MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataPublisherIdMissing]: Validation failed as publisherId is missing", "{"MessageType":"ua-metadata","PublisherId":"","MessageId":"600","DataSetWriterId":200,"MetaData":{"Name":"urn:assembly.seattle;nsu=http://opcfoundation.org/UA/Station/;i=403","Fields":[{"Name":"Address Hyderabad","FieldFlags":0,"BuiltInType":6,"DataType":null,"ValueRank":-1,"MaxStringLength":0,"DataSetFieldId":"b6738567-05eb-4b48-8cd4-f38c9b120129"}],"ConfigurationVersion":null}}"

"20240508120329", "OPCUA", "OpcuaMetadata", "[MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataPayloadDeserializationFailed] : OPCUAMetadataIngestionFailed as JsonDeserialization to MetadataMessagePayload payload failed", "{"MessageType":"ua-metadata","PublisherId":"publisher.seattle","MessageId":"602","MetaData":{"Name":"urn:assembly.seattle;nsu=http://opcfoundation.org/UA/Station/;i=405","Fields":[{"Name":"Address Peeniya","FieldFlags":0,"BuiltInType":6,"DataType":null,"ValueRank":-1,"MaxStringLength":0,"DataSetFieldId":"b6738567-05eb-4b48-8cd4-f38c9b120129"}],"ConfigurationVersion":null}}"

"20240508121318", "OPCUA", "OpcuaMetadata", "[MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataPayloadDeserializationFailed] : OPCUAMetadataIngestionFailed as JsonDeserialization to MetadataMessagePayload payload failed", "{"MessageType":"ua-metadata","PublisherId":"publisher.seattle","MessageId":"602","MetaData":{"Name":"urn:assembly.seattle;nsu=http://opcfoundation.org/UA/Station/;i=405","Fields":[{"Name":"Address Peeniya","FieldFlags":0,"BuiltInType":6,"DataType":null,"ValueRank":-1,"MaxStringLength":0,"DataSetFieldId":"b6738567-05eb-4b48-8cd4-f38c9b120129"}],"ConfigurationVersion":null}}"

Debug other ingestion errors

Troubleshoot via logs

Note

The instructions are written for a technical/IT professional with familiarity with Azure services. If you are not familiar with these technologies, you may need to work with your IT professional to complete the steps.

Make sure that you at a minimum have Log Analytics Reader role to the deployed Log Analytics Workspace instance.

- Go to Application Insights Resource in the Managed Resource Group.

1.Select "Logs" under "Monitoring" section on left hand side.

1.Run the following KQL in Application Insights to get more information.

traces

| where message contains "ingestion failed"

Example of Batch ingestion csv row failure in Application Insights.

For Twins

[05:46:50 ERR] Ingestion failed for row: [rbsawmill1,KupSawMill1,Production Line for wood sawing operations,Large Wood Saw Medium Wood Saw,workUnit,ProductionLine,Description,assetsystemrefidYKUQX8MDDD,messystemrefidBA3XM6UOAL,dkjsfhdks] at 04/29/2024 05:46:50 for entity Equipment with filename : sampleLakehouse.Lakehouse/Files/dpfolder/Equipment_202404291105102034.csv due to There is a mismatch in total number of columns defined.

For Relationships

[12:09:41 ERR] Ingestion failed for row: [Person,dhyardhandler11Test,,bbhandlinglocationyard3511,hasValuesOf] at 04/30/2024 12:09:41 with filename : sampleLakehouse.Lakehouse/Files/dpfolder/Person_Mapping_202403190947077573.csv due to Source Type and Target Type cannot be empty for the record.

Commonly encountered errors

| Error Code | Meaning | Mitigation |

|---|---|---|

| MDS_IG_FileOnlyHasHeaderInformation | The file only has header | Ensure that the file contains data in addition to the header. |

| MDS_IG_InvalidHeader | Column Names in the header don't match with registered columns | Check and correct the column names in the file header. |

| MDS_IG_NotAllMandatoryColumnsArePresent | Header Validation Error: Mandatory Column is Missing | Include all mandatory columns defined during entity registration in the file. |

| MDS_IG_PrimaryKeyColumnNotPresent | Header Validation Error: PrimaryKey is missing | Ensure that the PrimaryKey, which is a mandatory column, is present in the entity file. |

| MDS_IG_ColumnCountRowCountMismatch | There's a mismatch in total number of columns defined | Ensure that the number of columns in the file matches the expected count. |

| MDS_IG_MandatoryColumnNotNullNotEmpty | Cell Value(s) at Mandatory Column(s) are either null or empty | Provide non-null and nonempty values for mandatory columns. |

| MDS_IG_DataTypeMisMatch | DataTypeMisMatch: Cell Value(s) doesn't adhere to the agreed upon data contract | Ensure that cell values conform to the specified data types. |

| MDS_IG_PrimaryKeyValueNotPresent (487) | PrimaryKey value is missing | Ensure that the PrimaryKey value, which is mandatory, is present and not null or empty. |

| MDS_IG_MandatoryPropertiesOfEntityAreMissingInRelationships (489) | Mandatory Properties of entity are missing in relationships | Include all mandatory properties in the entity relationships. |

| MDS_IG_InvalidPropertyValue (490) | Invalid property value as there's mismatch with its type | Ensure that property values match their specified types. |

| MDS_IG_ProvideAValidTargetEntityNameInTheRelationship (493) | Provide a valid entity name in the relationship | Specify a valid target entity name in the relationship. |

| MDS_IG_TargetEntityNameIsNotRegisteredInDMM (494) | Target entity name isn't registered in Manufacturing data solutions | Register the target entity in Manufacturing data solutions before defining relationships. |

| MDS_IG_PrimaryKeyPropertiesOfTargetEntityAreMissingInRelationship (495) | Properties marked as primary in target entity are missing in relationship | Include all primary key properties in the relationship. |

| MDS_IG_MandatoryPropertyCannotBeNullOrEmpty (496) | Mandatory Property can't be null or empty | Provide non-null and nonempty values for mandatory properties. |

| MDS_IG_CSVMappingFileSourceAndTargetShouldNotBeEmpty (499) | Source Type and Target Type can't be empty for the record | Provide valid source and target types in the mapping file. |

| MDS_IG_CSVMappingNumberOfCellsDoNotMatchNumberOfColumns (501) | Row data count isn't matching with the header count of the mapping file | Ensure that the number of cells in each row matches the number of columns in the mapping file header. |

| MDS_IG_EntityNameIsNotRegisteredInDMM (502) | Entity isn't registered in Manufacturing data solutions | Register the entity in Manufacturing data solutions before ingestion. |

Common errors related to OPC UA

| Error Code | Meaning | Mitigation |

|---|---|---|

| MDS_IG_OPCUAInvalidDataNoRelatedTargetTwinExist | [MDS_Ingestion_Response_Code :MDS_IG_OPCUAInvalidDataNoRelatedTargetTwinExist] Unable to update OPCUA telemetry data as no related twin or target twin exist | Ensure that a related twin or target twin exists before attempting to update OPCUA telemetry data. |

| MDS_IG_OPCUAMetadataPublisherIdMissing | [MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataPublisherIdMissing]: Validation failed as publisherId is missing | Include a valid publisherId in the metadata before reingesting the data. |

| MDS_IG_OPCUAMetadataDataSetWriterIdIdMissing | [MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataDataSetWriterIdIdMissing]: Validation failed as DatasetWriterId is missing | Provide a valid DatasetWriterId in the metadata before reingesting the data |

| MDS_IG_OPCUAMetadataUnsupportedMessageType | [MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataUnsupportedMessageType]: Validation failed as provided MessageType isn't supported. The supported MessageTypes are ua-data or ua-metadata. | Ensure the MessageType is either ua-data or ua-metadata before reingesting the data. |

| MDS_IG_OPCUAMetadataNameMissing | [MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataNameMissing]: Validation failed as Metadata Name is null or empty | Provide a non-null and nonempty Metadata Name before reingesting the data. |

| MDS_IG_OPCUAMetadataFieldsMissing | [MDS_Ingestion_Response_Code : MDS_IG_OPCUAMetadataFieldsMissing]: Validation failed as Metadata fields can't be empty | Ensure that Metadata fields are populated with valid entries before reingesting the data. |

| MDS_IG_OPCUA_MappingMissing | [MDS_Ingestion_Response_Code : MDS_IG_OPCUA_MappingMissing]: Ingestion failed due to missing mapping | Ensure that the mapping is uploaded before sending telemetry events |

Start fresh

If for some reason you need to start Manufacturing data solutions with a clean slate, you can delete all the ingested data via the Cleanup API. This step makes sure you can reingest the data and don't get bothered with one or more previous ingested datasets.

Debug response of health API

Various Issues encountered related to health API.

Unable to access health API

After the deployment is completed, you can call the health API to check if the system is ready state. There can be Authentication or Authorization issue while trying to invoke the health API. Ensure that you created the App registration as per the prerequisites and has necessary roles assigned.

To invoke any of the Manufacturing data solutions APIs, you must fetch the access token for the App ID specified during deployment and use it as the Bearer token.

Failed status in health API response

Sometimes the Manufacturing data solutions health API can return the response with status as Failed.

The response also contains the actual error message.

Depending on the error message, you need to get in touch with the Microsoft support team.

Troubleshoot control plane issues

Initial checks

Check metrics emitted by Azure resources using Azure Monitor

Diagnostic settings are enabled by default to capture extra metrics for our Infrastructure resources. These metrics are enabled for following resources and send to Log Analytics workspace which gets procured as part of deployment. These settings are enabled for all Manufacturing data solutions SKUs.

- Azure Data explorer

- Event-hub

- Function App

- Azure Kubernetes Service

- Cosmos DB

- Azure OpenAI

- App Service Plan

- Azure Redis Cache

Note

The retention period for these Metrics in Log Analytics workspace is 30 days.

How to view metrics in Azure Monitor

Follow the guidance on Azure Monitor in MS Learn.

Debugging control plane issues

Common encountered scenarios

How to change network settings

- Ensure you have at least Contributor role on the Resource. Go to Networking tab for the required resource and add your IP address.

How to grant database access

Prerequisite: Your IP address is added in networking settings.

Ensure you have corresponding role required to access the Resource. For example, if you were to access storage account, ensure you have Storage Blob Data Reader or Storage Blob Data Contributor on the Storage account. Similarly, for Cosmos Database.

For Azure Data Explorer (ADX), you would need Database Reader permissions to query the data. For detailed instructions, refer to Manage Cluster Permissions.

Troubleshoot copilot

Initial checks

Try to fetch information from a table by running Copilot Query API.

POST https://{serviceUrl}/mds/copilot/v3/query?api-version=2024-06-30-preview

{

"ask": "string", // natural language question

}

Debug Copilot issues

Troubleshoot via logs

Note

The instructions are written for a technical/IT professional with familiarity with Azure services. If you are not familiar with these technologies, you may need to work with your IT professional to complete the steps.

Make sure that you at a minimum have Log Analytics Reader role to the deployed Log Analytics Workspaceinstance.

- Run the following KQL in Application Insights with updated start and end time to get more information.

KQL Query

let startTime = datetime('2024-07-04T00:00:00');

let endTime = datetime('2024-07-05T00:00:00');

let reqs = requests

| where timestamp between (startTime .. endTime)

| where cloud_RoleInstance startswith "aks-dmmcopilot-"

| where url has 'copilot/v3/query'

| project operation_Id, timestamp, resultCode, duration;

let exc = exceptions

| where timestamp between (startTime .. endTime)

| where cloud_RoleInstance startswith "aks-dmmcopilot-"

| distinct operation_Id, outerMessage

| summarize exception_list = make_list(outerMessage, 10) by operation_Id;

let copilot_traces = traces

| where timestamp between (startTime .. endTime)

| where cloud_RoleInstance startswith "aks-dmmcopilot-";

let instructions = copilot_traces

| where message has 'The instruction id used in prompt is '

| extend instructions = parse_json(customDimensions)['Instructionid']

| project operation_Id, instructions;

let alias = copilot_traces

| where message has 'AliasDictionary -'

| extend alias = tostring(parse_json(customDimensions)['aliasDictionary'])

| where isnotempty(alias)

| summarize aliases = take_any(alias) by operation_Id

| project operation_Id, aliases;

let retries = copilot_traces

| where message has 'Retry attempt {autoCorrectCount} out of'

| summarize arg_max(timestamp,customDimensions) by operation_Id

| project retries = toint(parse_json(customDimensions)['autoCorrectCount']), operation_Id;

let total_vector_search_ms = copilot_traces

| where message has 'Time taken to do similarity search on collection'

| summarize total_vector_search_ms = sum(todouble((parse_json(customDimensions)['timeTaken']))) by operation_Id

| project total_vector_search_ms, operation_Id;

let query = copilot_traces

| where message startswith 'User input -'

| extend query = tostring(parse_json(customDimensions)['input'])

| extend intent = iff(message contains 'invalid', 'invalid', 'valid')

| distinct operation_Id, query, intent;

let tokens = copilot_traces

| where message has 'Prompt tokens:' and message has 'Total tokens:'

| extend prompt_tokens = toint(parse_json(customDimensions)['PromptTokens'])

| extend completion_tokens = toint(parse_json(customDimensions)['CompletionTokens'])

| summarize make_list(prompt_tokens), make_list(completion_tokens) by operation_Id;

let total_tokens = copilot_traces

| where message has 'Prompt tokens:' and message has 'Total tokens:'

| extend total_tokens = toint(parse_json(customDimensions)['TotalTokens'])

| summarize total_token = sum(total_tokens) by operation_Id;

let kql = copilot_traces

| where message has 'Generated Graph KQL Query -'

| extend KQLquery = parse_json(customDimensions)['kqlQuery']

| summarize kqls = make_list(KQLquery) by operation_Id

| project operation_Id, kqls;

let kql_sanitized = copilot_traces

| where message has 'Sanitized Graph KQL Query -'

| extend KQLquery = parse_json(customDimensions)['kqlQuery']

| summarize s_kqls = make_list(KQLquery) by operation_Id

| project operation_Id, s_kqls;

reqs

| extend operation_id_req = operation_Id

| join kind=leftouter exc on $left.operation_id_req == $right.operation_Id

| join kind=leftouter query on $left.operation_id_req == $right.operation_Id

| join kind=leftouter instructions on $left.operation_id_req == $right.operation_Id

| join kind=leftouter alias on $left.operation_id_req == $right.operation_Id

| join kind=leftouter tokens on $left.operation_id_req == $right.operation_Id

| join kind=leftouter total_tokens on $left.operation_id_req == $right.operation_Id

| join kind=leftouter kql on $left.operation_id_req == $right.operation_Id

| join kind=leftouter kql_sanitized on $left.operation_id_req == $right.operation_Id

| join kind=leftouter retries on $left.operation_id_req == $right.operation_Id

| join kind=leftouter total_vector_search_ms on $left.operation_id_req == $right.operation_Id

| project

timestamp,

operation_Id,

resultCode,

query,

duration,

intent,

exception_list,

instructions,

aliases,

list_prompt_tokens,

list_completion_tokens,

total_token,

kqls,

s_kqls,

retries,

total_vector_search_ms

| order by timestamp desc

Go to Application Insights Resource in the Managed Resource Group.

Select "Logs" under Monitoring navigation section on left hand side.

Commonly encountered issue

Alias dictionary

| Error code | Error Message | Description |

|---|---|---|

| MDSCP5001 | Alias info not found related to the ID: {id} |

The system was unable to find any alias information for the provided ID. This error can occur if the ID is incorrect or if the alias isn't yet been created. |

| MDSCP5002 | Error saving custom alias with the key {key} |

There was an error while attempting to save the custom alias with the specified key. This error could be due to a conflict with an existing alias or a temporary issue with the database. |

| MDSCP5003 | Custom Alias Dictionary with the {id} is Internal. Hence, can't be deleted. |

The alias dictionary identified by the given ID is marked as internal and can't be deleted. Internal dictionaries are protected to ensure system integrity. |

Example Query

| Error Code | Error Message | Description |

|---|---|---|

| MDSCP4001 | Example query doc with exampleId {exampleId} not found |

The system couldn't find any example query document associated with the provided exampleId. This error can occur if the ID is incorrect or the example isn't yet been created. |

| MDSCP4002 | The given query has admin command {Admin Command} at index {Index} |

The provided query contains an admin command at the specified index, which isn't allowed. Remove the admin command and try again. |

| MDSCP4003 | {ExampleId} is already in use |

The example ID provided is already in use by another query. Use a unique example ID. |

| MDSCP4004 | Example query contains Reserved Keyword Internal |

The example query contains the reserved keyword 'Internal', which isn't allowed. Remove or replace the reserved keyword. |

| MDSCP4005 | An example with the same {UserQuestion}, {SampleQuery}, {LinkedInstructions}, and {exampleId} already exists |

An example query with the same user question, sample query, linked instructions, and example ID already exist in the system. |

| MDSCP4006 | Example query or queries contains duplicate IDs | The provided payload contains duplicate example IDs. Each example ID must be unique. |

Instruction

| Error Code | Error Message | Description |

|---|---|---|

| MDSCP3001 | An example with ID {exampleId} has the same linked instructions |

An existing example with the provided example ID has the same linked instructions. Ensure that the linked instructions are unique or modify the existing example. |

| MDSCP3002 | Can't find the following linked instruction ID registered: {Instruction Ids} |

The system couldn't find the provided instruction IDs. Verify the IDs and try again. |

| MDSCP3003 | Validation failed for Instruction request. \nError: {Message} | The instruction request failed validation. For more information, see the provided error message and correct the request accordingly. |

| MDSCP3004 | No Instruction with InstructionId: {instructionId} and Version: {version} is present |

The system couldn't find any instruction with the provided instruction ID and version. Verify the ID and version and try again. |

| MDSCP3005 | No Instruction with InstructionId : {0} and Version : {1} is present. |

The system couldn't find the instruction with the provided instruction ID. Verify the ID and try again. |

| MDSCP3006 | Internal Instruction with the {instructionId} can't be deleted |

The instruction identified by the given ID is marked as internal and can't be deleted. Internal instructions are protected to ensure system integrity. |

| MDSCP3007 | Instruction can't be deleted as it's referenced by others | The instruction can't be deleted because it's currently referenced by other entities. Remove the references before attempting to delete the instruction. |

| MDSCP3008 | Can't delete bulk delete instruction custom versions, make sure deleteAll flag is true. Exercise caution. |

Can't delete bulk delete instruction custom versions. Make sure deleteAll flag is true. Exercise caution. |

| MDSCP3010 | This instruction has only one version. Deleting it removes the instruction entirely, but it's referenced by other instructions. To completely delete this instruction, use the deleteAll endpoint and set forceDelete to true. |

This instruction has only one version. Deleting it removes the instruction entirely, but it's referenced by other instructions. To completely delete this instruction, use the deleteAll endpoint and set forceDelete to true. |

Operation

| Error Code | Error Message | Description |

|---|---|---|

| MDSCP1001 | Operation ID doesn’t exists | The system couldn't find any operation associated with the provided operation ID. Verify the ID and try again. |

Query

| Error Code | Error Message | Description |

|---|---|---|

| MDSCP2001 | Query has Invalid Intent | The user query doesn't relate to the Manufacturing Operations Management Domain. Ensure the query is relevant to the domain. |

| MDSCP2002 | Expected KQL isn't valid | The expected KQL (Kusto Query Language) is invalid. Correct the KQL syntax and try again. |

| MDSCP2003 | Validate Test details not found | The system couldn't find any validation test details corresponding to the provided test ID. Verify the ID and try again. |

| MDSCP2004 | Unable to generate valid KQL for the user Ask | The system was unable to generate a valid KQL for the user's query. Review the query and try again. |

| MDSCP2005 | Include Summary isn't specified when working with Conversation | The 'Include Summary' flag is mandatory when working with Conversation API. Specify the flag and try again. |

| MDSCP2007 | Test cases Validation failure | The provided test cases are empty. Provide valid test cases and try again. |

| MDSCP2008 | Test Query “Aks” is empty | The query for test case is empty. Provide a valid query and try again. |

| MDSCP2009 | Query KQL is empty | The expected KQL for the test case isn't specified. Provide a valid KQL and try again. |

| MDSCP2010 | Test summary request invalid | The test summary request is invalid. Review the request and try again. |

| MDSCP2011 | Conversation ID isn't present in the header | The conversation ID is missing from the request header. Include the conversation ID and try again. |

| MDSCP2012 | Query has cast exception | There was an invalid cast exception in the resultant KQL. Review the query and correct any type mismatches. |

| MDSCP2013 | API has throttled | The API has encountered a throttling issue with OpenAI. Try again later or reduce the frequency of requests. |

Feedback

| Error Code | Error Message | Description |

|---|---|---|

| MDSCP6001 | Feedback Request is Invalid | The feedback request is invalid. Review the request and ensure all required fields are correctly filled. |

| MDSCP6002 | Operation ID is invalid | The provided operation ID is invalid. Verify the ID and try again. |

| MDSCP2003 | Invalid value for the Feedback | The feedback contains an invalid value. Review the feedback and provide a valid value. |

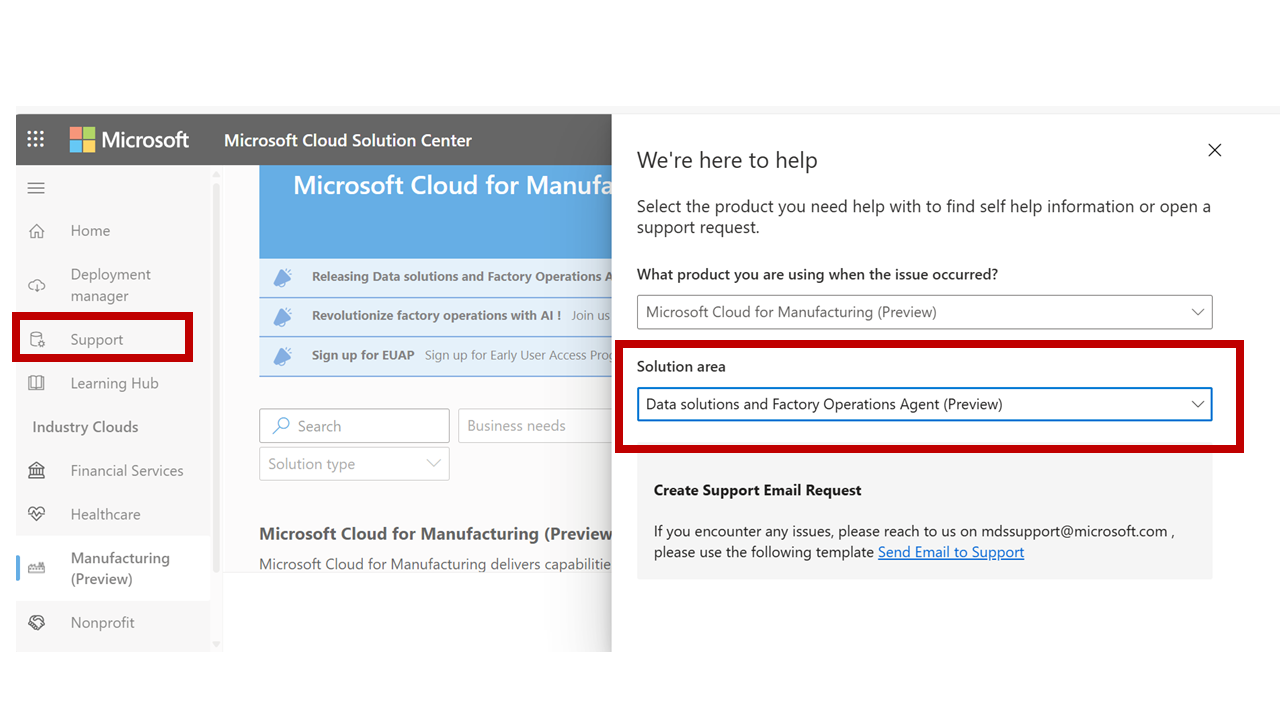

Create a support e-mail ticket

Select Support in the left hand pane in Solution Center. Select Microsoft Cloud for Manufacturing (Preview) and then Data Solutions and Factory Operations Agent(Preview) under Solution Area. Select the support email template to send a support request to the Manufacturing data solutions team.

You can also create a support ticket from the announcements in Solution Center in the Microsoft Cloud for Manufacturing Home page. Select Send Email to send a support request to the Manufacturing data solutions team.