Step 4. Evaluate the POC’s quality

See the GitHub repository for the sample code in this section.

Expected time: 5 - 60 minutes. Time varies based on the number of questions in your evaluation set. For 100 questions, evaluation takes approximately 5 minutes.

Overview and expected outcome

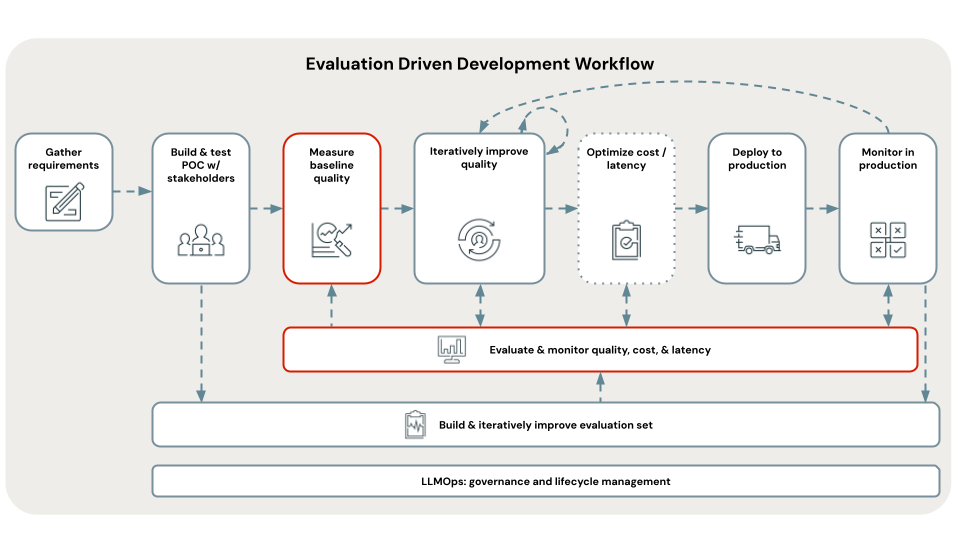

This step uses the evaluation set you just curated to evaluate your POC app and establish the baseline quality, cost, and latency. The evaluation results are used by the next step to identify the root cause of any quality issues.

Evaluation is done using Mosaic AI Agent Evaluation and looks comprehensively across all aspects of quality, cost, and latency that are outlined in the metrics section of this tutorial.

The aggregated metrics and evaluation of each question in the evaluation set are logged to MLflow. For details, see Evaluation outputs.

Requirements

- Evaluation set is available.

- All requirements from previous steps.

Instructions

- Open the

05_evaluate_poc_qualitynotebook in your chosen POC directory and click Run all. - Inspect the results of evaluation in the notebook or using MLflow. If the results meet your requirements for quality, you can skip directly to [Deploy and monitor] . Because the POC application is built on Databricks, it is ready to be deployed to a scalable, production-ready REST API.

Next step

Using this baseline evaluation of the POC’s quality, identify the root causes of any quality issues and iteratively fix those issues to improve the app. See Step 5. Identify the root cause of quality issues.